botorch

botorch copied to clipboard

botorch copied to clipboard

Problem with Polytope Sampler

Hi,

I encountered a problem with the get_polytope_samples when the individual variables live in different dimensions. Here is a small didactic example:

from torch import tensor

from botorch.utils.sampling import get_polytope_samples

import matplotlib.pyplot as plt

samples = get_polytope_samples(

n=100,

bounds = tensor([[0.1,0.3,0.1,30.],[0.6,0.7,0.7,700.]]),

equality_constraints=[(tensor([1, 2, 0]), tensor([1., 1., 1.]), 1.0)]

)

fig, ax = plt.subplots()

ax.hist(samples.numpy()[:,-1])

plt.show()

The bounds are as follows:

- 0.1 - 0.6

- 0.3 - 0.7

- 0.1 - 0.7

- 30 - 700

In addition an equality constraint is applied on the first three variables with all coefficients equal to 1 and an RHS of 1.

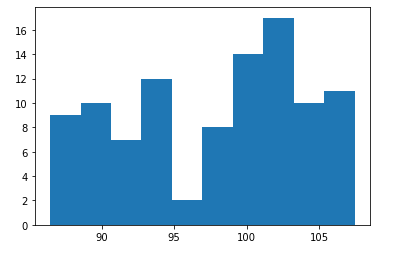

Sampling 100 samples leads to the following histogram for the fourth variables (bounds: 30-700). The distribution is not uniform at all and the values stay in a very slim interval.

In the next step, I scaled all variables to into the 0 - 1 range, including the equality constraint by using the formula below:

where

where xi* is the scaled variable and s_i is the difference between upper and lower bound for the variable. The code below generates 100 samples for this case including scaling back into the original bounds:

lower = tensor([0.1,0.3,0.1,30.])

upper = tensor([0.6,0.7,0.7,700.])

space = upper - lower

scaled_samples = get_polytope_samples(

n=100,

bounds = tensor([[0., 0., 0., 0.],[1., 1., 1., 1.]]),

equality_constraints=[(tensor([1, 2, 0]), tensor([0.4000, 0.6000, 0.5000]), 0.5)]

)

samples = lower + scaled_samples*space

fig, ax = plt.subplots()

ax.hist(samples.numpy()[:,-1])

plt.show()

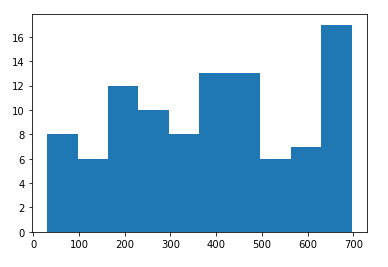

Now, the fourth variables is sampled in much more uniform way:

I think the reason for this behavior is that the Hit and Run sampler needs only a small step to reach the boundary of the polytope (defined by the equality constraint) and that this step is to small to guarantee a proper sampling of the fourth variable.

I think the reason for this behavior is that the Hit and Run sampler needs only a small step to reach the boundary of the polytope (defined by the equality constraint) and that this step is to small to guarantee a proper sampling of the fourth variable.

I would recommend to build this scaling directly into the get_polytope_samples method (PR could be provided by me), as it is also used by gen_batch_initial_conditions when linear constraints are present. In the case that people are not running the optimization in the scaled variable space but using a Normalize InputTransform instead, this behavior can lead to problems in the optimization process.

What do you think?

Best,

Johannes

Thanks for flagging, this is a great observation. It is indeed a problem that "small step" means different things in different directions, we really want this to be isotropic. I'm not quite sure I fully understand where things go wrong in the code, I'll have to take a closer look to better understand that.

But I think your suggestion sounds great, and we'd very much welcome a PR! I think we can go even a bit more low-level than build it just into the get_polytope_samples method. Ideally we could actually make this part of the PolytopeSampler class, do the normalization on __init__, save the scaling factors there, and then change draw to call an abstract _draw method and then automatically rescale the outputs. This might be a bit more work than your suggestion though, so I guess we can start with the simpler approach.

One of the things we may want to think about a bit more is how we deal with cases in which we don't have bounds, and the polytope is defined purely via inequality constraints. I guess we could just normalize the coefficients in each column of A to have a range comparable to those in the other columns and then record those scaling factors?

I will first file a PR with the simpler solution in get_polytope_samples for the case that we have actually bounds. For the case without having bounds, I am not yet 100% sure.

I hope to have a first PR until end of the week ;)

I approved #1341, but I'm going to leave this open since there are a few minor improvements we can make:

- #1341 introduces a minor break to backwards compatibility by raising an error when non-integer indices are passed to

inequality_constraintsorequality_constraints. In a way this is good because non-integer indices don't make sense and aren't usually allowed, but it would be good to warn rather than error at least for the next release. We could eventually deprecate support for the dtypes that don't work here. - Since this problem likely arises deeper in the stack than in

get_polytope_samples, the fix should live where the issue starts (likelyHitAndRunPolytopeSampler.draworPolytopeSampler). - Ideally, we'd have tests for

get_polytope_samplesandPolytopeSamplersubclasses that fail given the old behavior and pass given the new behavior. We should have been more specific about what polytope samples ought to look like in the first place to prevent this bug from arising. - It would be nice to have a clear understanding of where the flawed behavior came from in the first place!