Slow CPU performance results in frustrating user experience

I've noticed that in the past few months the user experience for using TorchBench for correctness testing TorchDynamo has gotten much worse.

TorchBench used to run quickly, and I could check all models in minutes. Now doing the same thing seems to take hours.

Doing some bisecting, it seems what has happened to make it so much slower is increasing batch sizes. The new batch sizes seem tuned for GPU and reasonable for GPU, but result in a painful experience on CPU.

I'd propose we use different batch sizes on CPU and GPU, especially for inference where batch size is rather arbitrary. We should target execution times <10 seconds, and ideally <1 second.

We should check if these smaller batch sizes result in different performance characteristics. For example, turning a compute-bound model into an overhead bound one. If not, giving TorchBench users faster time-to-signal would be a huge benefit.

Perhaps we could also have a non-default "large batch size" mode, for extra testing.

cc @xuzhao9

Correct, I agree that we should use small batch sizes for CPU inference. In addition, we should have different scales of for GPU inference, because some users use small GPU, which default test batch size will be CUDA OOM. And for extra large testing, we should support multi-GPU test case.

However, for train usually we just use the batch size in the example repository or the original paper, so the batch size can't be arbitrarily adjusted.

I'm also noticing very low CPU utilization. Usually only 1 thread active. Did something change in how we setup threading?

I'm also noticing very low CPU utilization. Usually only 1 thread active. Did something change in how we setup threading?

I don't think anything changed in setup threading. Can I get a command to reproduce this problem?

./torchbench.py --nothing -n100 -k alexnet

./torchbench.py --nothing -n100 -k alexnet

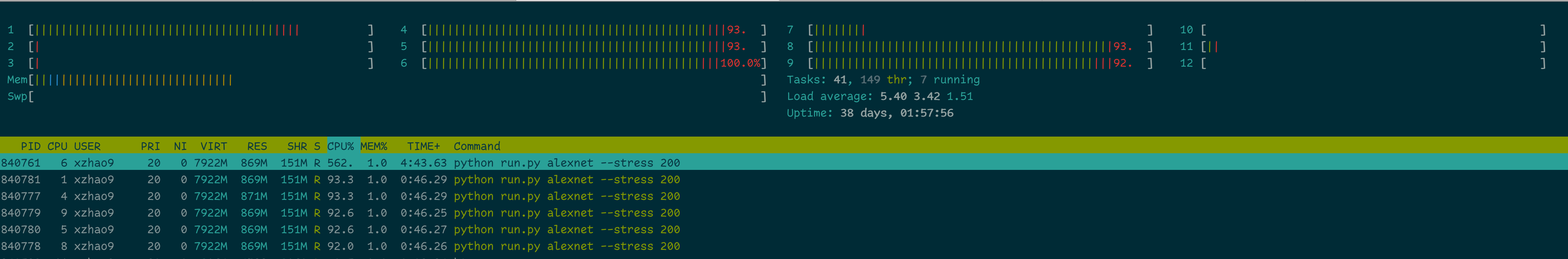

@jansel For the low cpu utilization, I tried to stress testing alexnet using the newly added --stress option (https://github.com/pytorch/benchmark/pull/1002):

$ python run.py alexnet --stress 200

From htop, it looks like all cores are being used:

so I believe it is environment setup issues if you only observe 1 thread is being used. For example, if you are using taskset, you need to specify env GOMP_CPU_AFFINITY at the same time, to enable multi-threading.

Closed by https://github.com/pytorch/benchmark/pull/1003