TensorRT

TensorRT copied to clipboard

TensorRT copied to clipboard

Convert YoloV5 models

It is my understanding that the new stable release should be able to convert any PyTorch model with fallback to PyTorch when operations cannot be directly converted to TensorRT. I am trying to convert

I am trying to convert YoloV5s6 to TensorRT using the code that you can find below. I believe that it would be great to be able to convert this particular model given its popularity.

During the conversion I am encountering some errors. Is this because the model cannot be converted to TorchScript? I also noticed that the model is composed of classes which extends nn.Module.

Of course, YoloV5 code can be found here: https://github.com/ultralytics/yolov5

Thank you!

To Reproduce

import torch

import torch_tensorrt

model = torch.hub.load("ultralytics/yolov5", "yolov5s6")

model.eval()

compile_settings = {

"inputs": [torch_tensorrt.Input(

# For static size

shape=[1, 3, 640, 640], # TODO: depends on the model size

# For dynamic size

# min_shape=[1, 3, 224, 224],

# opt_shape=[1, 3, 512, 512],

# max_shape=[1, 3, 1024, 1024],

dtype=torch.half, # Datatype of input tensor. Allowed options torch.(float|half|int8|int32|bool)

)],

# "require_full_compilation": False,

"enabled_precisions": {torch.half}, # Run with FP16

"torch_fallback": {

"enabled": True, # Turn on or turn off falling back to PyTorch if operations are not supported in TensorRT

}

}

trt_ts_module = torch_tensorrt.compile(model, **compile_settings)

Output:

Using cache found in /home/ubuntu/.cache/torch/hub/ultralytics_yolov5_master

YOLOv5 🚀 2021-11-17 torch 1.10.0+cu113 CUDA:0 (Tesla T4, 15110MiB)

Fusing layers...

Model Summary: 280 layers, 12612508 parameters, 0 gradients

Adding AutoShape...

Traceback (most recent call last):

File "/usr/lib/python3.6/code.py", line 91, in runcode

exec(code, self.locals)

File "<input>", line 21, in <module>

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch_tensorrt/_compile.py", line 96, in compile

ts_mod = torch.jit.script(module)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_script.py", line 1258, in script

obj, torch.jit._recursive.infer_methods_to_compile

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_recursive.py", line 451, in create_script_module

return create_script_module_impl(nn_module, concrete_type, stubs_fn)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_recursive.py", line 513, in create_script_module_impl

script_module = torch.jit.RecursiveScriptModule._construct(cpp_module, init_fn)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_script.py", line 587, in _construct

init_fn(script_module)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_recursive.py", line 491, in init_fn

scripted = create_script_module_impl(orig_value, sub_concrete_type, stubs_fn)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_recursive.py", line 517, in create_script_module_impl

create_methods_and_properties_from_stubs(concrete_type, method_stubs, property_stubs)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_recursive.py", line 368, in create_methods_and_properties_from_stubs

concrete_type._create_methods_and_properties(property_defs, property_rcbs, method_defs, method_rcbs, method_defaults)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_script.py", line 1433, in _recursive_compile_class

return _compile_and_register_class(obj, rcb, _qual_name)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_recursive.py", line 42, in _compile_and_register_class

ast = get_jit_class_def(obj, obj.__name__)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/frontend.py", line 201, in get_jit_class_def

is_classmethod=is_classmethod(obj)) for (name, obj) in methods]

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/frontend.py", line 201, in <listcomp>

is_classmethod=is_classmethod(obj)) for (name, obj) in methods]

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/frontend.py", line 264, in get_jit_def

return build_def(parsed_def.ctx, fn_def, type_line, def_name, self_name=self_name, pdt_arg_types=pdt_arg_types)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/frontend.py", line 302, in build_def

param_list = build_param_list(ctx, py_def.args, self_name, pdt_arg_types)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/frontend.py", line 330, in build_param_list

raise NotSupportedError(ctx_range, _vararg_kwarg_err)

torch.jit.frontend.NotSupportedError: Compiled functions can't take variable number of arguments or use keyword-only arguments with defaults:

File "/usr/lib/python3.6/warnings.py", line 468

def __exit__(self, *exc_info):

~~~~~~~~~ <--- HERE

if not self._entered:

raise RuntimeError("Cannot exit %r without entering first" % self)

'__torch__.warnings.catch_warnings' is being compiled since it was called from 'SPPF.forward'

File "/home/ubuntu/.cache/torch/hub/ultralytics_yolov5_master/models/common.py", line 191

def forward(self, x):

x = self.cv1(x)

with warnings.catch_warnings():

~~~~~~~~~~~~~~~~~~~~~~~ <--- HERE

warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning

y1 = self.m(x)

Expected behavior

The model gets converted.

Environment

Build information about Torch-TensorRT can be found by turning on debug messages

- Torch-TensorRT Version (e.g. 1.0.0): 8.0.1.6

- PyTorch Version (e.g. 1.0): 1.10

- CPU Architecture: Intel i7

- OS (e.g., Linux): Ubuntu 18.04

- How you installed PyTorch (

conda,pip,libtorch, source): pip - Build command you used (if compiling from source): N/A

- Are you using local sources or building from archives:

- Python version: 3.6

- CUDA version: 11.3

- GPU models and configuration: T4

- torch==1.10.0+cu113

- torch-tensorrt==1.0.0

- torchvision==0.11.1+cu113

EDIT: I am going to try to update yolov5 code to remove the warnings that seem to cause the issue, but it would be nice if this wasn't necessary. Let me know if you have suggestions on how to proceed.

I had been able to fix that issue manually but I am still struggling with this error:

RuntimeError:

Expected integer literal for index. ModuleList/Sequential indexing is only supported with integer literals. Enumeration is supported, e.g. 'for index, v in enumerate(self): ...':

File "/home/ubuntu/pycharm/projects/ai-model-yolov5-new/models/yolo.py", line 54

for i in range(self.nl):

i = int(i)

x[i] = self.m[i](x[i]) # conv

~~~~~~~~~ <--- HERE

bs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)

x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()

You can find the corresponding code in the YoloV5 repository.

Hi @mfoglio , Seems that it's caused by a limitation of torchscript, you can handle this piece like I did with a similar change in another repository (I did some experiments to make YOLOv5's torch.jit.script happier in this repo).

https://github.com/zhiqwang/yolov5-rt-stack/blob/4cba043/yolort/models/box_head.py#L46-L62

@zhiqwang following the notebooks in your repository I have been able to successfully create a torchscript model. However, when converting to TensorRT, I get the error:

RuntimeError:

temporary: the only valid use of a module is looking up an attribute but found = prim::SetAttr[name="_has_warned"](%self, %self.model.backbone.body.2.m.0.add)

:

Full code: script1

# Intuition for yolort

import torch_tensorrt

import cv2

import torch

from yolort.utils import cv2_imshow, get_image_from_url, read_image_to_tensor

from yolort.utils.image_utils import color_list, plot_one_box

import os

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"]="5"

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# Read an image

# Let's request and pre-process the images that to be detected.

img_raw = get_image_from_url("https://gitee.com/zhiqwang/yolov5-rt-stack/raw/master/test/assets/bus.jpg")

# img_raw = cv2.imread('../test/assets/bus.jpg')

img = read_image_to_tensor(img_raw)

img = img.to(device)

# Model Definition and Initialization

from yolort.models import yolov5s

model = yolov5s(pretrained=True, score_thresh=0.55)

model.eval()

model = model.to(device)

# Perform inference on an image tensor

model_out = model.predict(img)

# Verify the PyTorch backend inference results

print(model_out[0]['boxes'].cpu().detach())

print(model_out[0]['scores'].cpu().detach())

print(model_out[0]['labels'].cpu().detach())

# Detection output visualization

# Get label names

import requests

labels = []

response = requests.get("https://gitee.com/zhiqwang/yolov5-rt-stack/raw/master/notebooks/assets/coco.names")

names = response.text

for label in names.strip().split('\n'):

labels.append(label)

from yolort.utils.image_utils import load_names

labels = load_names('notebooks/assets/coco.names')

colors = color_list()

for box, label in zip(model_out[0]['boxes'].tolist(), model_out[0]['labels'].tolist()):

img_raw = plot_one_box(box, img_raw, color=colors[label % len(colors)], label=labels[label])

cv2_imshow(img_raw, imshow_scale=0.5)

# Scripting YOLOv5

# TorchScript export

print(f'Starting TorchScript export with torch {torch.__version__}...')

export_script_name = 'yolov5s.torchscript.pt' # filename

model_script = torch.jit.script(model)

model_script.eval()

model_script = model_script.to(device)

# Save the scripted model file for subsequent use (Optional)

model_script.save(export_script_name)

script 2

import torch_tensorrt

import cv2

import torch

from yolort.utils import cv2_imshow, get_image_from_url, read_image_to_tensor

from yolort.utils.image_utils import color_list, plot_one_box

import os

# Scripting YOLOv5

# TorchScript export

print(f'Starting TorchScript export with torch {torch.__version__}...')

export_script_name = 'yolov5s.torchscript.pt' # filename

model_script = torch.jit.load(export_script_name)

model_script.eval()

model_script = model_script.to("cuda")

# Save the scripted model file for subsequent use (Optional)

compile_settings = {

"inputs": [torch_tensorrt.Input(

# For static size

shape=[1, 3, 640, 640], # TODO: depends on the model size

# For dynamic size

# min_shape=[1, 3, 224, 224],

# opt_shape=[1, 3, 512, 512],

# max_shape=[1, 3, 1024, 1024],

dtype=torch.half, # Datatype of input tensor. Allowed options torch.(float|half|int8|int32|bool)

)],

# "require_full_compilation": False,

"enabled_precisions": {torch.half}, # Run with FP16

# "complie_spec": {

# "torch_fallback": {

# "enabled": True, # Turn on or turn off falling back to PyTorch if operations are not supported in TensorRT

# "force_fallback_ops": [

# "aten::max_pool2d" # List of specific ops to require running in PyTorch

# ],

# "force_fallback_modules": [

# "mypymod.mytorchmod" # List of specific torch modules to require running in PyTorch

# ],

# "min_block_size": 3 # Minimum number of ops an engine must incapsulate to be run in TensorRT

# }

# }

}

trt_ts_module = torch_tensorrt.compile(model_script, **compile_settings)

Hi @mfoglio

I didn't test torch_tensorrt now, I check the SSD tutorial here, they use the torch.jit.trace mode in this example, maybe you can test torch.jit.trace with yolort also, the exported graph will be more clean

from yolort.models import yolov5s

from yolort.relaying import get_trace_module

input_shape = (640, 640)

model_func = yolov5s(pretrained=True)

trace_model = get_trace_module(model_func, input_shape=input_shape) # do the torch.jit.trace here

Hello @zhiqwang I seem to have the same issue even tracing the model:

RuntimeError:

temporary: the only valid use of a module is looking up an attribute but found = prim::SetAttr[name="_has_warned"](%self, %self.model.backbone.body.2.m.0.add)

:

@zhiqwang , my bad, I had an error in the code. I am making progress with your suggestion. Now, I have a:

RuntimeError: [Error thrown at core/partitioning/shape_analysis.cpp:116] Unable to process subgraph input type of at::kLong/at::kDouble, try to compile model with truncate_long_and_double enabled

despite enabling truncate_long_and_double. I will look for some documentation and update you.

Well, I am not sure what other settings could be used to convert the model. I feel like this could possibly be a bug, and in any case, the error is quite different from the one that I had at the beginning of this post. I opened a new thread here https://github.com/NVIDIA/Torch-TensorRT/issues/731#issue-1057563108 to spare some times to the new readers.

@mfoglio I wonder if any of your issues are due to the fact that you're trying to export the AutoShape() wrapper with a YOLOv5 model inside it.

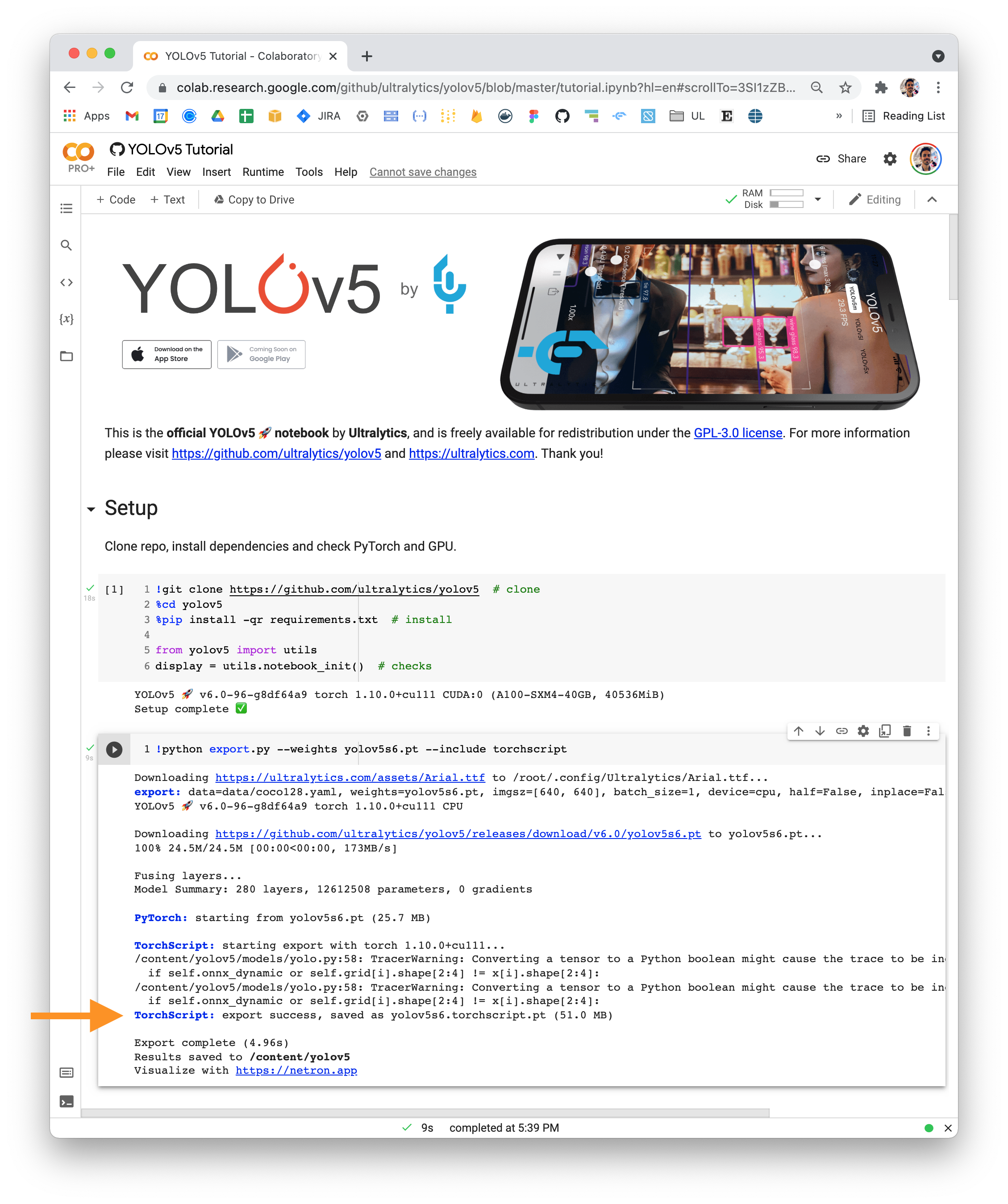

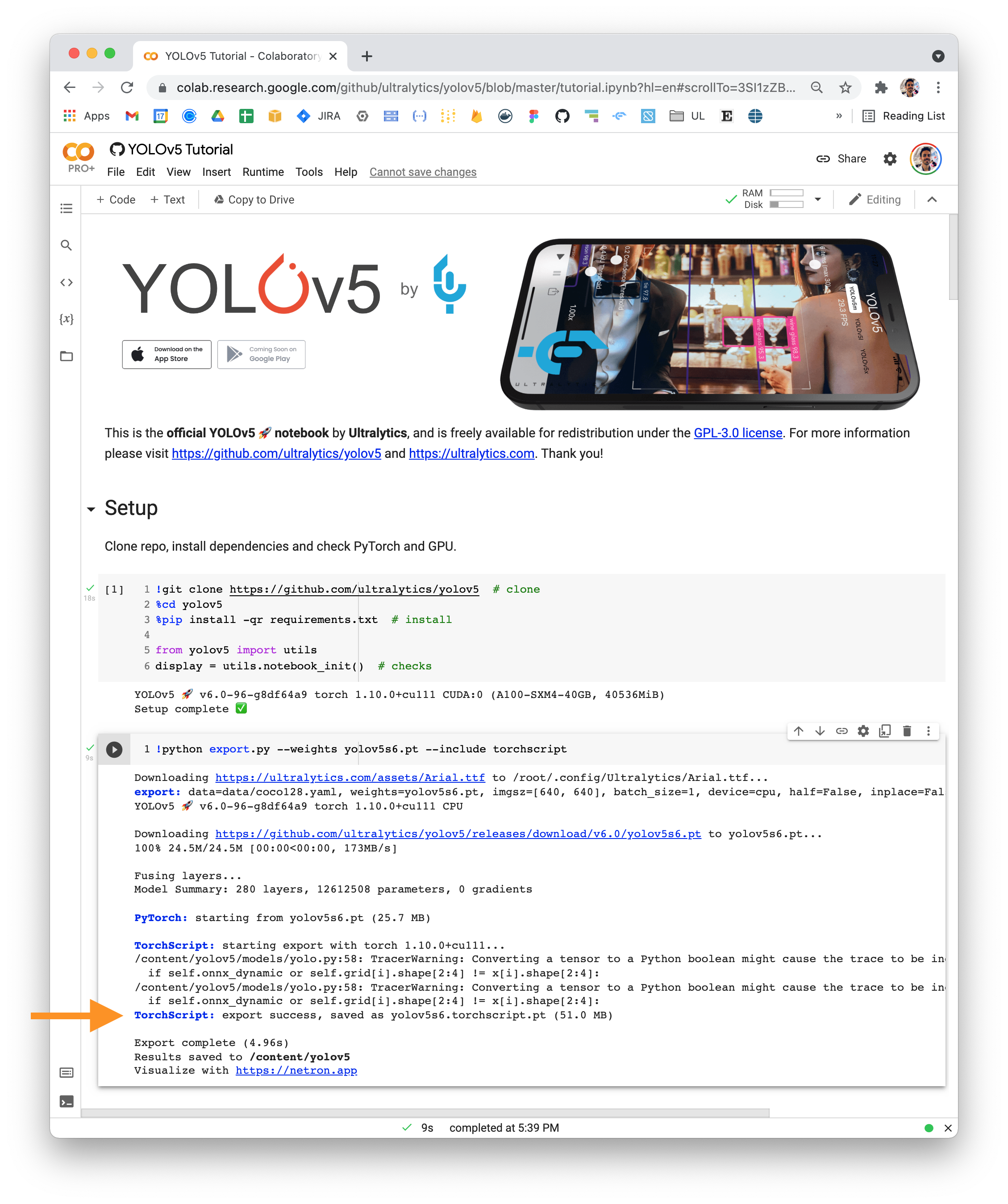

The default YOLOv5 export code works with TorchScript without issue. This is a YOLOv5 export by itself, we don't try to export AutoShape() wrapped models.

@glenn-jocher let me try

@mfoglio I wonder if any of your issues are due to the fact that you're trying to export the AutoShape() wrapper with a YOLOv5 model inside it.

The default YOLOv5 export code works with TorchScript without issue. This is a YOLOv5 export by itself, we don't try to export AutoShape() wrapped models.

I have tried to convert yolov5 from the original ts export a few days ago, but without any luck :/

If we export the model with:

python3 export.py --weights yolov5s6.pt --include torchscript

and then run:

import torch

import torch_tensorrt

model = torch.jit.load("yolov5s6.torchscript.pt")

compile_settings = {

"inputs": [torch_tensorrt.Input(

# For static size

shape=[1, 3, 640, 640], # TODO: depends on the model size

# For dynamic size

# min_shape=[1, 3, 224, 224],

# opt_shape=[1, 3, 512, 512],

# max_shape=[1, 3, 1024, 1024],

dtype=torch.half, # Datatype of input tensor. Allowed options torch.(float|half|int8|int32|bool)

)],

# "require_full_compilation": False,

"enabled_precisions": {torch.half}, # Run with FP16

}

trt_ts_module = torch_tensorrt.compile(model.model, **compile_settings)

The conversion works. Note that I am converting model.model. @glenn-jocher would that makes sense?

Also, I still need to figure out how to run inference on it.

Do I need to export the model to torch script differently if I want to run it on gpu?

If I add the the code above:

model = model.cuda()

import cv2

import numpy as np

from PIL import Image

import requests

url = "https://www.pexels.com/photo/170811/download/?search_query=car&tracking_id=jjn4hcch60g"

img = Image.open(requests.get(url, stream=True).raw)

img = np.array(img)

# img = Frame(manager_id, numpy_frame=img)

img = cv2.resize(img, (640, 640))

img = torch.from_numpy(img)

img = img.unsqueeze(0)

img = img.permute((0, 3, 1, 2))

img = img.to("cuda")

img = img.float()

print(model(img))

I get the error:

Traceback (most recent call last):

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/IPython/core/interactiveshell.py", line 3343, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-12-35a548baa09d>", line 15, in <module>

print(model(img))

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

RuntimeError: The following operation failed in the TorchScript interpreter.

Traceback of TorchScript, serialized code (most recent call last):

File "code/__torch__/models/yolo.py", line 94, in forward

_49 = (_29).forward(_48, )

_50 = (_31).forward((_30).forward(_49, ), _39, )

_51 = (_33).forward(_45, _47, _49, (_32).forward(_50, ), )

~~~~~~~~~~~~ <--- HERE

_52, _53, _54, _55, _56, = _51

return (_56, [_52, _53, _54, _55])

File "code/__torch__/models/yolo.py", line 128, in forward

y = torch.sigmoid(_28)

_29 = torch.mul(torch.slice(y, 4, 0, 2), CONSTANTS.c0)

_30 = torch.add(torch.sub(_29, CONSTANTS.c1), CONSTANTS.c2)

~~~~~~~~~ <--- HERE

xy = torch.mul(_30, torch.select(CONSTANTS.c3, 0, 0))

_31 = torch.mul(torch.slice(y, 4, 2, 4), CONSTANTS.c0)

Traceback of TorchScript, original code (most recent call last):

/home/ubuntu/pycharm/projects/ai-model-yolov5-new/models/yolo.py(67): forward

/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/nn/modules/module.py(1090): _slow_forward

/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/nn/modules/module.py(1102): _call_impl

/home/ubuntu/pycharm/projects/ai-model-yolov5-new/models/yolo.py(151): _forward_once

/home/ubuntu/pycharm/projects/ai-model-yolov5-new/models/yolo.py(128): forward

/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/nn/modules/module.py(1090): _slow_forward

/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/nn/modules/module.py(1102): _call_impl

/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_trace.py(965): trace_module

/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/jit/_trace.py(750): trace

export.py(69): export_torchscript

export.py(322): run

/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch/autograd/grad_mode.py(28): decorate_context

export.py(376): main

export.py(381): <module>

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu!

If I try to converted the torch script model in its entirety:

import torch

import torch_tensorrt

model = torch.jit.load("yolov5s6.torchscript.pt")

compile_settings = {

"inputs": [torch_tensorrt.Input(

# For static size

shape=[1, 3, 640, 640], # TODO: depends on the model size

# For dynamic size

# min_shape=[1, 3, 224, 224],

# opt_shape=[1, 3, 512, 512],

# max_shape=[1, 3, 1024, 1024],

dtype=torch.half, # Datatype of input tensor. Allowed options torch.(float|half|int8|int32|bool)

)],

"require_full_compilation": False,

"enabled_precisions": {torch.half}, # Run with FP16

"truncate_long_and_double": True

}

trt_ts_module = torch_tensorrt.compile(model, **compile_settings)

I get the error:

Traceback (most recent call last):

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/IPython/core/interactiveshell.py", line 3343, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-2-09c3eeb6d6ed>", line 1, in <module>

runfile('/home/ubuntu/pycharm/projects/ai-model-yolov5-new/convert_to_tensorrt_2.py', wdir='/home/ubuntu/pycharm/projects/ai-model-yolov5-new')

File "/home/ubuntu/.pycharm_helpers/pydev/_pydev_bundle/pydev_umd.py", line 198, in runfile

pydev_imports.execfile(filename, global_vars, local_vars) # execute the script

File "/home/ubuntu/.pycharm_helpers/pydev/_pydev_imps/_pydev_execfile.py", line 18, in execfile

exec(compile(contents+"\n", file, 'exec'), glob, loc)

File "/home/ubuntu/pycharm/projects/ai-model-yolov5-new/convert_to_tensorrt_2.py", line 32, in <module>

trt_ts_module = torch_tensorrt.compile(model, **compile_settings)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch_tensorrt/_compile.py", line 97, in compile

return torch_tensorrt.ts.compile(ts_mod, inputs=inputs, enabled_precisions=enabled_precisions, **kwargs)

File "/home/ubuntu/pycharm/venv/lib/python3.6/site-packages/torch_tensorrt/ts/_compiler.py", line 119, in compile

compiled_cpp_mod = _C.compile_graph(module._c, _parse_compile_spec(spec))

RuntimeError: upper bound and larger bound inconsistent with step sign

@mfoglio This will not solve your exact problem, but if your goal is to have a TRT version of yolo, you can try this repo: https://github.com/alxmamaev/jetson_yolov5_tensorrt . It uses onnx and trtexec to create the trt engine. Works fine

@mfoglio Did you end up succeeding eventually? I am trying to load a YOLOv5-torchscript module into libtorch-tensorrt right now and also failing.

Example error messages:

ERROR: [Torch-TensorRT TorchScript Conversion Context] - 4: [layers.cpp::validate::2385] Error Code 4: Internal Error (%3264 : Tensor = aten::mul(%3263, %3257) # /home/..../yolov5/models/yolo.py:66:0: operation PROD has incompatible input types Float and Int32)

...

ERROR: [Torch-TensorRT TorchScript Conversion Context] - 2: [elementWiseNode.cpp::computeOutputExtents::14] Error Code 2: Internal Error (Assertion x.nbDims == y.nbDims failed. )

This issue has not seen activity for 90 days, Remove stale label or comment or this will be closed in 10 days

@mfoglio Did you end up succeeding eventually? I am trying to load a YOLOv5-torchscript module into libtorch-tensorrt right now and also failing.

Example error messages:

ERROR: [Torch-TensorRT TorchScript Conversion Context] - 4: [layers.cpp::validate::2385] Error Code 4: Internal Error (%3264 : Tensor = aten::mul(%3263, %3257) # /home/..../yolov5/models/yolo.py:66:0: operation PROD has incompatible input types Float and Int32) ... ERROR: [Torch-TensorRT TorchScript Conversion Context] - 2: [elementWiseNode.cpp::computeOutputExtents::14] Error Code 2: Internal Error (Assertion x.nbDims == y.nbDims failed. )

If you insist on using TRT Backend to accelerate inference speed, I suggest you to convert this model to Tensorflow format and then convert to tensorrt. I failed to convert from torchscript to tensorrt too. Maybe there is some mismatches in floating point between torchscript and tensorrt which generates this error

This issue has not seen activity for 90 days, Remove stale label or comment or this will be closed in 10 days

This issue has not seen activity for 90 days, Remove stale label or comment or this will be closed in 10 days