Cannot authenticate with SASL_SSL and SCRAM-SHA-512 (comma in password)

Describe the bug I am attempting to authenticate via docker-compose with the following confguration:

version: "3"

services:

kafka-ui:

image: provectuslabs/kafka-ui

container_name: kafka-ui

ports:

- 18080:8080

restart: always

environment:

KAFKA_CLUSTERS_0_NAME: test

KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: kafka:9096

KAFKA_CLUSTERS_0_ZOOKEEPER: zookeper:2181

KAFKA_CLUSTERS_0_PROPERTIES_SECURITY_PROTOCOL: SASL_SSL

KAFKA_CLUSTERS_0_PROPERTIES_SASL_MECHANISM: SCRAM-SHA-512

KAFKA_CLUSTERS_0_PROPERTIES_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="ABC" password="123";'

However, I get the following errors when running the container:

kafka-ui | 2022-06-03 21:23:18,329 ERROR [kafka-admin-client-thread | adminclient-1] o.a.k.c.NetworkClient: [AdminClient clientId=adminclient-1] Connection to node -1 (redacted-url.zone:9096) failed authentication due to: Authentication failed during authentication due to invalid credentials with SASL mechanism SCRAM-SHA-512

My kafka cluster is setup to use SASL/SCRAM. The same configuration works from the kafka CLI and from various other apps hosted on the same machine running in various different languages (go, c#, python).

Set up Run the above docker-compose yaml against a cluster configured for SASL_SSL and SCRAM-SHA-512 authentication

Steps to Reproduce Try the above

Expected behavior I should be able to authenticate with the specified configuration.

Additional context I am also open to the possibility that I am missing some environment variable in my docker-compose.

Hello there andrewm42! 👋

Thank you and congratulations 🎉 for opening your very first issue in this project! 💖

In case you want to claim this issue, please comment down below! We will try to get back to you as soon as we can. 👀

Hi, don't you have any special characters in your password, like commas?

Hi, don't you have any special characters in your password, like commas?

It has special characters, though no commas. I created a new user for the UI with just uppercase and lowercase letters and numbers and it authenticates just fine.

Thank you for the help.

I think this issue should be addressed, however. Does anyone else agree?

Yeaaah, it seems like it has to be, since it's not the first time we encounter such a problem. Thanks! #1928 (#911?)

@andrewm42 could you provide a kafka setup example to reproduce the issue? Docker-compose would be fine.

This issue has been automatically marked as stale because no requested feedback has been provided. It will be closed if no further activity occurs. Thank you for your contributions.

@andrewm42 @Haarolean hello 👋

Today I tested authentication with some special characters (abc1212!,asd) in a password and couldn't reproduce the issue.

KAFKA_CLUSTERS_0_PROPERTIES_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.plain.PlainLoginModule required username="enzo" password="abc1212!,asd";'

@andrewm42 Are you still facing the issue? Could you check on the latest version, please?

By and large, I couldn't reproduce the issue with different special characters in a password :( Please, let us know if you encounter such an error again.

@Haarolean You have closed my issue as duplicate of this issue and asked to provide example of docker compose. So here is my docker compose file

version: '2.6'

services:

kafka-ui:

image: provectuslabs/kafka-ui

container_name: kafka-ui

ports:

- "8001:8080"

restart: always

environment:

KAFKA_CLUSTERS_0_NAME: kafka1

KAFKA_CLUSTERS_0_BOOTSTRAPSERVERS: xxxx:9092,xxyy:9092

KAFKA_CLUSTERS_0_PROPERTIES_SECURITY_PROTOCOL: SASL_SSL

KAFKA_CLUSTERS_0_PROPERTIES_SASL_MECHANISM: SCRAM-SHA-512

KAFKA_CLUSTERS_0_PROPERTIES_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="username" password="password";'

KAFKA_CLUSTERS_0_PROPERTIES_SSL_TRUSTSTORE_LOCATION: /ca-dv.ks

KAFKA_CLUSTERS_0_PROPERTIES_SSL_TRUSTSTORE_PASSWORD: password

volumes:

- ./ssl/ca-dv.ks:/ca-dv.ks

Password has characters like "#", "*", "$" Login has "_" Server name has "-"

@KozyrevychYaroslav thanks, but the problem is that we probly need a full example with kafka itself. As you can see in comments above we weren't able to reproduce the issue.

I have the same issue when try to authenticate with SCRAM-SHA-512. My password don't have any special character.

My config:

bootstrap.servers = [kafka-1:9096, kafka-2:9096]

client.dns.lookup = use_all_dns_ips

client.id = kafka-ui-admin-client-1677145586653

connections.max.idle.ms = 300000

default.api.timeout.ms = 60000

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

receive.buffer.bytes = 65536

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 30000

retries = 2147483647

retry.backoff.ms = 100

sasl.client.callback.handler.class = null

sasl.jaas.config = [hidden]

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.connect.timeout.ms = null

sasl.login.read.timeout.ms = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.login.retry.backoff.max.ms = 10000

sasl.login.retry.backoff.ms = 100

sasl.mechanism = SCARM-SHA-512

sasl.oauthbearer.clock.skew.seconds = 30

sasl.oauthbearer.expected.audience = null

sasl.oauthbearer.expected.issuer = null

sasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000

sasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000

sasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100

sasl.oauthbearer.jwks.endpoint.url = null

sasl.oauthbearer.scope.claim.name = scope

sasl.oauthbearer.sub.claim.name = sub

sasl.oauthbearer.token.endpoint.url = null

security.protocol = SASL_SSL

security.providers = null

send.buffer.bytes = 131072

socket.connection.setup.timeout.max.ms = 30000

socket.connection.setup.timeout.ms = 10000

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.3]

ssl.endpoint.identification.algorithm = https

ssl.engine.factory.class = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.certificate.chain = null

ssl.keystore.key = null

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLSv1.3

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.certificates = null

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

JAAS config

KAFKA_CLUSTERS_0_PROPERTIES_SASL_JAAS_CONFIG='org.apache.kafka.common.security.scram.ScramLoginModule required username="username" password="password";'

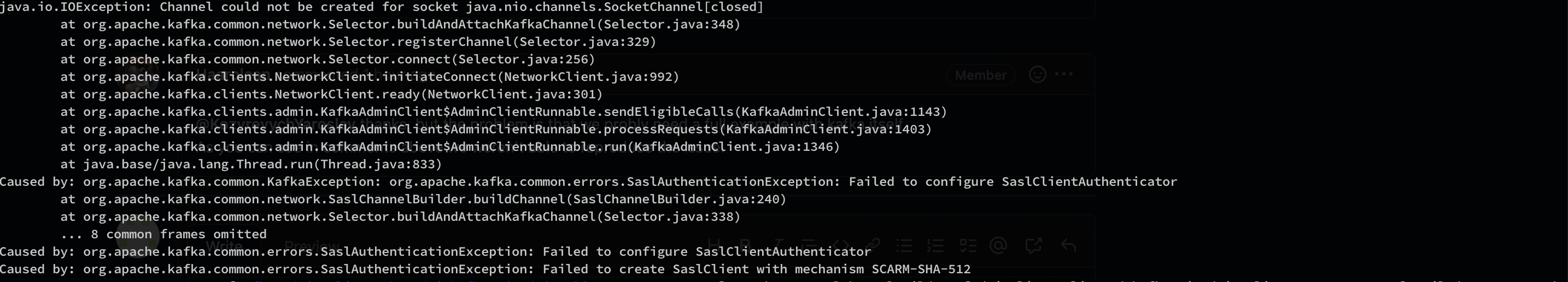

Error logs

@Haarolean

kafka-ui | bootstrap.servers = [xx-xx-xxxx-dv-01.xx.tech:9092, xx-xxx-xxx-dv-05.xxx.tech:9092]

kafka-ui | client.dns.lookup = use_all_dns_ips

kafka-ui | client.id = kafka-ui-admin-client-1677150243681

kafka-ui | connections.max.idle.ms = 300000

kafka-ui | default.api.timeout.ms = 60000

kafka-ui | metadata.max.age.ms = 300000

kafka-ui | metric.reporters = []

kafka-ui | metrics.num.samples = 2

kafka-ui | metrics.recording.level = INFO

kafka-ui | metrics.sample.window.ms = 30000

kafka-ui | receive.buffer.bytes = 65536

kafka-ui | reconnect.backoff.max.ms = 1000

kafka-ui | reconnect.backoff.ms = 50

kafka-ui | request.timeout.ms = 30000

kafka-ui | retries = 2147483647

kafka-ui | retry.backoff.ms = 100

kafka-ui | sasl.client.callback.handler.class = null

kafka-ui | sasl.jaas.config = [hidden]

kafka-ui | sasl.kerberos.kinit.cmd = /usr/bin/kinit

kafka-ui | sasl.kerberos.min.time.before.relogin = 60000

kafka-ui | sasl.kerberos.service.name = null

kafka-ui | sasl.kerberos.ticket.renew.jitter = 0.05

kafka-ui | sasl.kerberos.ticket.renew.window.factor = 0.8

kafka-ui | sasl.login.callback.handler.class = null

kafka-ui | sasl.login.class = null

kafka-ui | sasl.login.connect.timeout.ms = null

kafka-ui | sasl.login.read.timeout.ms = null

kafka-ui | sasl.login.refresh.buffer.seconds = 300

kafka-ui | sasl.login.refresh.min.period.seconds = 60

kafka-ui | sasl.login.refresh.window.factor = 0.8

kafka-ui | sasl.login.refresh.window.jitter = 0.05

kafka-ui | sasl.login.retry.backoff.max.ms = 10000

kafka-ui | sasl.login.retry.backoff.ms = 100

kafka-ui | sasl.mechanism = SCRAM-SHA-512

kafka-ui | sasl.oauthbearer.clock.skew.seconds = 30

kafka-ui | sasl.oauthbearer.expected.audience = null

kafka-ui | sasl.oauthbearer.expected.issuer = null

kafka-ui | sasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000

kafka-ui | sasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000

kafka-ui | sasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100

kafka-ui | sasl.oauthbearer.jwks.endpoint.url = null

kafka-ui | sasl.oauthbearer.scope.claim.name = scope

kafka-ui | sasl.oauthbearer.sub.claim.name = sub

kafka-ui | sasl.oauthbearer.token.endpoint.url = null

kafka-ui | security.protocol = SASL_SSL

kafka-ui | security.providers = null

kafka-ui | send.buffer.bytes = 131072

kafka-ui | socket.connection.setup.timeout.max.ms = 30000

kafka-ui | socket.connection.setup.timeout.ms = 10000

kafka-ui | ssl.cipher.suites = null

kafka-ui | ssl.enabled.protocols = [TLSv1.2, TLSv1.3]

kafka-ui | ssl.endpoint.identification.algorithm = https

kafka-ui | ssl.engine.factory.class = null

kafka-ui | ssl.key.password = null

kafka-ui | ssl.keymanager.algorithm = SunX509

kafka-ui | ssl.keystore.certificate.chain = null

kafka-ui | ssl.keystore.key = null

kafka-ui | ssl.keystore.location = null

kafka-ui | ssl.keystore.password = null

kafka-ui | ssl.keystore.type = JKS

kafka-ui | ssl.protocol = TLSv1.3

kafka-ui | ssl.provider = null

kafka-ui | ssl.secure.random.implementation = null

kafka-ui | ssl.trustmanager.algorithm = PKIX

kafka-ui | ssl.truststore.certificates = null

kafka-ui | ssl.truststore.location = /ca-dv.ks

kafka-ui | ssl.truststore.password = [hidden]

kafka-ui | ssl.truststore.type = JKS

@KozyrevychYaroslav thanks, but the problem is that we probly need a full example with kafka itself. As you can see in comments above we weren't able to reproduce the issue.

Have you tried to use all characters that I use in login/password/server name?

@vunamphuong17 that's unrelated, a different error message. Your sasl mechanism is "SCARM...", there's a typo.

@KozyrevychYaroslav yep, just tried with KAFKA_CLUSTERS_0_PROPERTIES_SASL_JAAS_CONFIG: 'org.apache.kafka.common.security.plain.PlainLoginModule required username="admin_" password="admin-secret#*$$";' within kafka-ui-sasl.yaml example, works fine.

@Haarolean now I get new error when I try to view topics in UI. Note that I have read-only access. Caused by: org.apache.kafka.common.errors.ClusterAuthorizationException: Cluster authorization failed.

@KozyrevychYaroslav https://provectus.gitbook.io/kafka-ui/configuration/configuration/required-acls Please let's keep the discussion about the aforementioned issue. Feel free to join us on discord if you'd need additional help.

This issue requires a minimal, reproducible example in form of docker-compose with kafka and kafka-ui both for us to take a look into this.

Any preview of docker-compose example using SASL/SCRAM-512? integrating kafka, kafka-ui, schema registry, ksqldb? It would be very useful

@andreyolv https://github.com/provectus/kafka-ui/blob/master/documentation/compose/kafka-ui-sasl.yaml

@Haarolean This example uses PLAIN, not SCRAM-512

I too would love to see a working example of SCRAM-512