Alert grouping in PagerDuty is not very useful

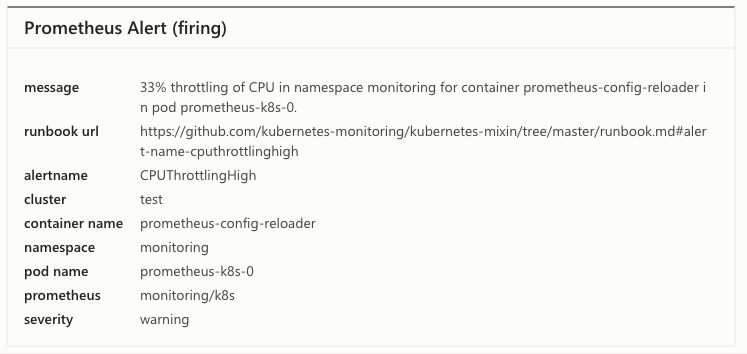

When triggering multiple alerts that get grouped together, the result in PagerDuty winds up being like this:

Only the alert summary gets updated, and subsequent messages get logged. The alert details are not updated (it still reflects the original message and summary). You have to click through the detailed log to see each individual API message sent from Alertmanager.

Ideally I'd want grouped alerts to show up individually in PagerDuty, and then be associated with the same incident, but AIUI there isn't an API for this.

The main issue here is that as further alerts are grouped together, new notifications are not triggered. This is dangerous, because it means that alerts can go unnoticed quite silently (until the operator manually polls Prometheus and/or Alertmanager). Basically, prometheus is "editing" an existing alert instead of creating new ones. The only workaround is to disable grouping altogether, but then this invalidates the grouping of simultaneous alerts, which means alert storms when several things break due to one root cause.

I'm honestly not sure what the right solution here is, but maybe something like generate new Alerts per group with a new dedup_key whenever additional alerts are grouped together, and try to do something smart about resolution events, like track when sent events have each of their root alerts resolved and send the resolution event then? With the current behavior, the only clean setup I can see is to just disable alert grouping altogether.

- Alertmanager version:

alertmanager, version 0.15.2 (branch: HEAD, revision: d19fae3bae451940b8470abb680cfdd59bfa7cfa)

Alternatively, and this I think is useful as a generic option, maybe a new grouping behavior can be introduced where alerts are only grouped inside the initial group_wait period. After notifications are sent for a group, it is effectively frozen, and new alerts matching the group key would create a new group instead. Does that make sense? I think that would work better in the context of PagerDuty, and perhaps other alert mechanisms.

And apparently Alertmanager doesn't actually support disabling grouping, which makes this even worse. I supposed I can just throw in there a bunch of labels I don't expect to ever collide (like alertname/instance/yada), but this is kind of silly.

The main issue here is that as further alerts are grouped together, new notifications are not triggered.

This is the entire concept behind alertmanager's grouping. After an initial notification, comprising whatever alerts are received within group_wait, is sent, new notifications are only sent if:

- the group changes (new alert is received, old alert is resolved, e.g.) within

group_interval repeat_intervalelapses

This is dangerous, because it means that alerts can go unnoticed quite silently

group_interval can be reduced so that you're alerted more frequently about changes to the alert group, while still avoiding a pager storm. For example, setting an initial group_wait of 20s, and then group_interval of 1m. How are your intervals currently configured?

What I mean is that no notifications are actually delivered even after group_interval expires. They make it to PagerDuty, but they update the existing alert only, in a very non-helpful way. Effectively, once Alertmanager sends the initial notification to PagerDuty for a given alert group, no further escalations are triggered, and no further actual notifications in PagerDuty are triggered, until that incident is manually or automatically resolved (alerts cleared) and triggered again. After the initial notification and alert, you get zero subsequent updates through the escalation path. The subsequent notifications are only visible as the alert title changing in the Alert view (but not the details, nor the incident title), and as log entries.

For example, if I set both group_wait and group_interval to 5 seconds, this happens:

- 0s: Alertmanager receives an alert

- 2s: Alertmanager receives another alert in the same group criteria

- 5s: Alertmanager sends a notification to PagerDuty comprising the two alerts (e.g. subject:

[FIRING:2] ...) - 5s: PagerDuty creates an alert, creates an incident for that alert, and notifies the on-call user

- 10s: the on-call user acks the escalation

- 60s: Alertmanager receives another alert in the same group criteria

- 60s (or 65s?): Alertmanager sends a notification to PagerDuty comprising the three alerts (e.g. subject:

[FIRING:3] ...) but with the samededup_keyas before - 60s: Because it got the same

dedup_key, PagerDuty updates the existing alert:- The overall alert subject changes to

[FIRING:3] ... - The details do NOT change: only the original alert subject, message, and label info is visible.

- There is a new log entry and if you click through, that is the only place where the now 3 alert details are shown

- The incident title is NOT updated

- The incident does NOT re-escalate and nobody is notified again

- The overall alert subject changes to

- As alerts resolve, further notifications are sent to PagerDuty, changing the alert subject to

[FIRING:2] ...,[FIRING:1] ..., etc. - Once the last alert in the group expires, the alert resolution message is sent to PagerDuty and that closes the alert and the incident.

Basically, the new notifications are all but useless. I haven't tested repeat_interval but I expect it to be completely useless with the PagerDuty integration, as it would just result in sending the same message, which would update the subject to the same text, thus doing nothing other than adding a log entry. Its only use would be if a human closed the PagerDuty incident while the alert was still active (in which case a new alert/incident would be created).

Basically the problem is that PagerDuty has no API to say "Hey! I have new stuff for that previous alert I sent you, notify the user again!". You have to create an entirely new alert. So as far as I can tell the options are:

- (Current status): Send updates to the existing event, user does not get notified. Bad.

- Send a new PD event with all the current alerts, resolve the previous event with now-superseded info. Bad, causes incident churn.

- Send a new event with all the current alerts, leave the previous event around, send resolved messages for both when the relevant grouped alerts are all resolved. Not great, now you have two PD alerts/incidents but one is a superset of the other.

- Do not further group alerts together with the previous group after a notification; just create a new event comprising whatever new alerts fired, and keep track of it as a separate group. I think this is the best option. Users should manually merge incidents in PD to keep everything related together (which might cross the boundaries of what Alertmanager considers grouped alerts too, and that is okay).

Users might see it the other way round: “Once I have ack'd the page, I don't want to get re-paged on the same alert group. It's all the same issue anyway, and I told PD I'm working on it.”

If you wont to get re-paged, you could simply Resolve a PD incident rather than merely Ack 'ing it. That should result in a new page as soon as Alertmanager sends an update to the alert.

In different news: I talked to a PD engineer long ago about the problem that all those additional updates to an alert are hidden quite deeply in the alert. They told me they would think about improving that, but apparently that hasn't happened.

Right, this depends on the use case. The issue is the possibility that a problem is aggravated but the user is not aware of it because they did not receive further (grouped) alerts. Some users may not want to be notified, some might.

Personally, I'd like to have notifications reach me for every alert firing; batching is good to avoid page storms, but I still want to know about any additional alerts that fire. This is how it works with e.g. Pushover (which I use personally), which does not do any grouping itself. As an alert group grows, I get additional notifications. In that case, since it's a stateless service, I don't care about "resolution" events mapping to older alerts; I'd like to keep the current behavior where just one resolution event is sent at the end, which I basically use as the "all clear" signal. This is why I suggest this should be a core setting inside alert grouping: whether alert groups should be "frozen" after notification and a new group created, or appended to.

I think this is something to be solved on PD's side. And arguably it already is. Wouldn't it solve your problem if you just always Resolve in PD instead of Ack? That's what I tell the engineers at SoundCloud, and it seems to work quite well.

That seems kind of a hack, in that it negates any incident management you might want to do in PD (basically you wind up using it only for escalation and notification), and there's also a race condition there. I mean, it works, but wouldn't what I suggested make more sense?

Part of the problems certainly stems from an overlap of concerns between AM and PD. If there is an easy way to untangle that, sure, but it seems we needed to introduce conceptual inconsistencies in AM to cater for the subset of people that happen to use PD for incident management. Personally, I'm not sure what's the best course of action here. I leave that to the AM maintainers. :o)

To me the idea of "one-shot" alert grouping (wait, collect alerts, notify, start from scratch for any further alerts) makes sense as a general feature potentially useful for other notification mechanisms too, but maybe that's just me :-)

Personally, I think the AM configuration options are already very confusing. I often get caught in them myself. Adding yet another way of managing groups (like the one you suggest) would confuse everybody even more. Also, I do think it will lead to "group spam" with the right pattern, e.g. imagine a slow death of a 1000-instance microservice where you essentially create updates to a group of "instance down" alerts every couple of seconds over many minutes. You would create many, many alert groups not only in PD but also in AM. And a page every group_wait, i.e. a page every 30s.

Fundamentally, we are working against a lack of feature in PD. It would be very easy for them to represent updates to an incident in a more visible form, and that's what I discussed with them back then. Perhaps you could file a feature request for them (we all pay them, after all, in contrast to the AM developers). Also, it would be nice if users of PD could configure if they want to get re-notified on updates of an incident. In this way, every users could decide what their preferred paging pattern is.

Just to add to this discuss - I find myself here with a similar issue regards to grouping but using OpsGenie.

The issue I have is exactly the same, so not ideal - I see that group _by is good - especially when feeding alerts through Slack or email etc....

However I think that grouping should be configurable at route level - so I can keep grouping on slack etc... but for OpsGenie / PD Id want individual alerts to be fired through not grouped together.

Grouping is configurable at the route level. Each route can define its own grouping.

https://prometheus.io/docs/alerting/configuration/

Ok - That is good, but I guess same problem applies - grouping cannot be disabled easily.

Someone from @PagerDuty should weigh in on this issue...

I opened a support case at @PagerDuty pointing to this issue.

Hi Sylvain, thanks for directing us to the thread ongoing in Github!

When reviewing what different customers are desiring with this integration, our Product team noted 3 common requests:

-

When triggering multiple alerts via the API and those alerts get grouped, only the alert summary gets updated, and subsequent messages get logged. The alert details are not updated (it still reflects the original message and summary). At this time, you have to click through the detailed log to see each individual API message sent from AlertManager. Ideally you would want grouped alerts to show up individually in PagerDuty, and then be associated with the same incident, but AIUI there isn't an API for this.

-

There is a feature request to implement "one-shot" alert grouping (wait, collect alerts, notify, start from scratch for any further alerts).

-

It’s tough to visually distinguish updates to an incident (i.e. if that have alerts grouped to them or when the last alert was added to the incident, etc).

Regarding point one and two, our Product teams responsible for Alerts and Events are currently in a planning cycle right now and they are reviewing these two requests.

Regarding point three, this is on our radar and we plan to make an improvement to this experience soon.

If you have any additional feedback you would like to provide that wasn't shared on this thread, we would love to hear you out! You can always add context to this thread or reach out to PagerDuty Support with your particular use case for us to forward to the Product team.

Cheers!

@geethpd There's one thing I mentioned above but used the wrong acronym (AM instead of PD, I have corrected that now): It would be neat if, as a user of PagerDuty, I could configure my account such that I get notified if my incident gets updated, too (not just acknowledged, resolved, or escalated, as it is configurable right now).

Given that we have group_by: [...] now (see https://github.com/prometheus/alertmanager#example ), can we close this issue as we probably won't do anything else from the AM side? (Having a better view of the timeline and current state of an incident in PD is still desirable, but would not be tracked in an AM issue but rather on the PD side.)

I'm stumbling upon a similar problem at my organization.

When triggering multiple alerts via the API and those alerts get grouped, only the alert summary gets updated, and subsequent messages get logged. The alert details are not updated (it still reflects the original message and summary). At this time, you have to click through the detailed log to see each individual API message sent from AlertManager. Ideally you would want grouped alerts to show up individually in PagerDuty, and then be associated with the same incident, but AIUI there isn't an API for this.

To get around this problem, I'm trying to add the current time in the Pagerduty incident's description, assuming that pagerduty is taking the incident description as dedup_key.

receivers: - name: 'Pager' pagerduty_configs: - service_key: 'xxxxxxxxxxxxx' description: '{{ template "pagerduty.default.description" .}}'

I'm new to Alertmanager and Golang and finding it difficult to give this a try. Any help or suggestion is highly appreciated.

Hi,

I have a similar problem with Prometheus-Alertmanager and the Microsoft Teams Receiver.

I would like to not group. Every notification looks like this now:

It would be nicer to have alert as a single notification and the alert message in the title instead of a generic "Prometheus Alert(firing)" or "Prometheus Alert(resolved)".

Idea: Is it possible to group by the alert message? this would solve my issue.

@shibumi it's possible to use group_by: [...] to disable grouping.

Side-note: please use the mailing list or IRC for such questions rather than commenting an existing issue...

@simonpasquier hi, thanks for your reply. Is ... some sort of special literal? Why is this not documented somewhere? I tried using IRC and slack, but got no answer.. So I've thought about sharing my thoughts here

It is documented in the alertmanager documentation

@roidelapluie ah I see, it's hidden in a comment: https://prometheus.io/docs/alerting/configuration/ thanks!

@geethpd It is 6 years from your comment, and I don't see any significant progress on the PagerDuty side 🧐. Any news?

Here's what I got sharing this GitHub issue and my use case with PagerDuty Support yesterday:

Thanks for your patience while I gathered feedback on this. While we understand your feedback regarding how this can seem intuitive, it is by all intents working as expected. I have shared your feedback, including the GitHub link as a feature suggestion with our team. These are reviewed regularly, and while the product team cannot provide specific responses to all feature suggestions, your case here 00755406 is linked internally with these report,s should our product team wish to gather any more information.

Do any of the major alternatives to PagerDuty have better handling of Alertmanager's notifications and grouping?

Only being able to see the original notification's details under the incident is quite useless, especially if there are new alerts added or old ones resolved.

@akvadrako, my team hasn't actually implemented it yet, but one of the things we've been looking at to work around this issue is using Keep as a middleman between Alertmanager and Pagerduty, since it seems to be able to handle updating Pagerduty incidents instead of just sending to its Events API.

- https://docs.keephq.dev/providers/documentation/pagerduty-provider

- https://docs.keephq.dev/providers/documentation/prometheus-provider#connecting-via-webhook-omnidirectional