Query Engine High Memory Usage

Hi there,

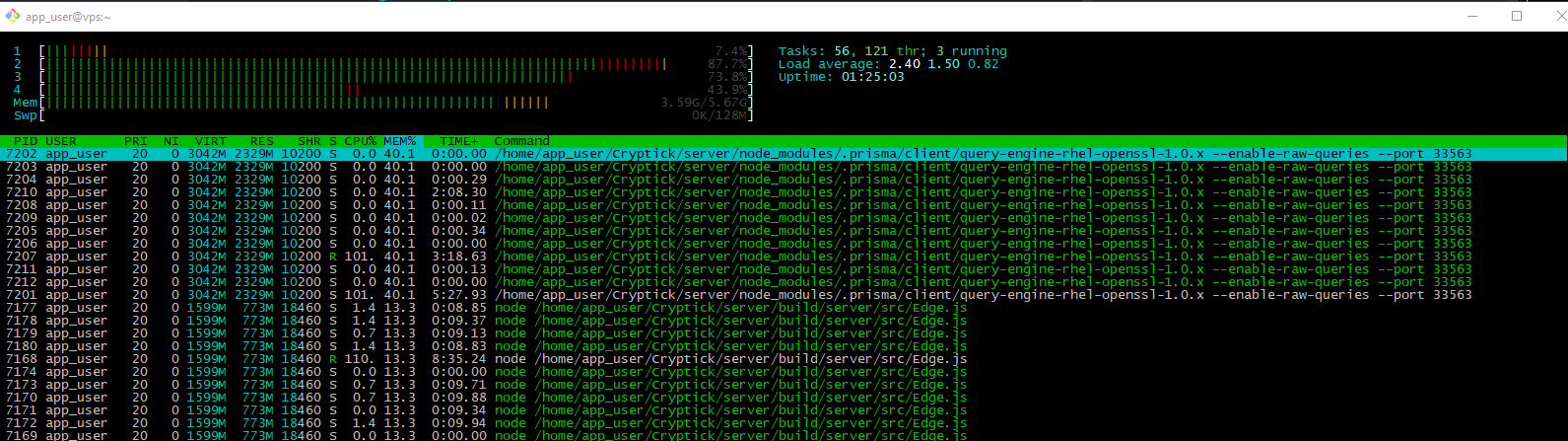

I am running into an issue where the prisma query engine is using an excessive amount of memory on CentOS 7.

The application is rather intensive. It collects data, and inserts into a table. It also combs through the same table, migrates segments of data, then deletes the from the table. At least currently, it's not possible to streamline this process.

On my production VPS, the query engine will use whatever memory is available over time. The VPS has 6 GB of RAM.

This will eventually result in very poor performance as the machine has no memory to handle even basic functionality.

I just implemented a "queue" for the queries that are hitting the table with hopes that slowing down query execution would resolve the matter, however it had no effect.

I am lost here on how to solve this. Is there a way to limit the memory usage of the query engine?

The select, delete and insert statements will all return / delete / create thousands of records for each query. This is by design, and I really hope to continue to use this quantity.

Let me know if I can help by supplying any additional information.

Thanks!

Can you share a bit more details:

- Prisma version

- The connection string (all password/user/hostname info hidden)

- Maybe even your data model, if possible!

No, this doesn't look right to me.

Hi @pimeys, thanks for the quick response!

Prisma: 2.26.0 Node: 14.17.2 Connection String: mysql://username:password@host:3306/database

where "username", "password", "host" and "database" are hidden.

All queries relate to this table: https://pastebin.com/RZ58H67T. Let me know if I can provide any more detail.

Thanks!

Could you try setting a smaller statement_cache_size and see if it helps reducing the memory usage? Something like statement_cache_size=50 and let it run with that.

Hey @pimeys, I guess I found what worked for me was enabling the node api that was released as a preview feature as part of the 2.26 release. The app has been running today without issue so far. I'll leave it up to you if this should be closed or not.

Oh nice. We will for sure use the HTTP API in the future, so an investigation is still needed...

@pimeys I wanted to circle back as I ran into the issue again on 2.29.1 and set statement_cache_size to a small value which solved my problem. Can you go into some detail about how statements are being cached? Also, is there documentation on the various flags that can be passed in that connection string?

Thanks again for your help. I don't believe further investigation is necessary as I have run several versions of prisma with and without nApi enabled, all having the same results.

Documentation of this feature might be good though...

So. Trying not to go too far into details, here is how it works:

In certain databases, the statement caching should be handled in the client (SQL Server being different here, server handles the caching there). What that means is, normally when querying the procedure goes like:

PREPARE SELECT $1, $2;

This is one roundtrip. The server parses the statement for valid SQL and stores a statement with two parameters, giving it a name s1.

Now when we send parameters 1 and 2 to the given statement, we get the actual query results back. The optimization here is to store the statement in the client, so we can reuse it between requests that utilize the same query, but with different (or same) parameters.

This caching is handled by a least-recently used cache (LRU). It's a bit like a hashmap, where the key is the query string and the value is a statement object we can use and make subsequent queries to perform much faster. A LRU is per connection, meaning if you have a connection limit higher than 1, every connection needs to cache the same queries. LRU will kick out the least recently used queries when the cache gets full, but here comes the part where we did wrong in Prisma.

Originally the cache value was chosen to be quite large so we can spend some of the user's memory for faster queries. We just didn't consider how much it will eventually be. Then changing that would be a breaking change, and we don't like to do breaking changes that often if we don't have to. Now when moving to proper semver, there might be a case for that.

Maybe we should, what do you think @janpio?

Thanks for the information @pimeys.