mpich

mpich copied to clipboard

hydra: GPU visibility control revamp

Pull Request Description

- Add interface for querying GPU device list and subdevice list in MPL. The MPL returns array of integers that represents individual GPU device or subdevice.

- Implementation of these interface for ZE, CUDA and HIP.

- Add hydra option

-gpu-subdevs-per-procfor allowing GPU visibility controlled at subdevice level. - Update hydra's round-robin GPU assignment algorithm for visibility control.

Author Checklist

- [ ] Provide Description Particularly focus on why, not what. Reference background, issues, test failures, xfail entries, etc.

- [ ] Commits Follow Good Practice

Commits are self-contained and do not do two things at once.

Commit message is of the form:

module: short descriptionCommit message explains what's in the commit. - [ ] Passes All Tests Whitespace checker. Warnings test. Additional tests via comments.

- [ ] Contribution Agreement For non-Argonne authors, check contribution agreement. If necessary, request an explicit comment from your companies PR approval manager.

(from Yanfei)

Overview

- CPU Affinity

- Process bind to CPU core(s)

mpiexec -bind-to core:4//bind each proc to 4 cores, rr

- Different binding policies

mpiexec -bind-to gpu//bind each proc to cores closest to gpu

- GPU locality focused policy

mpiexec -bind-to gpu:2

- Process bind to CPU core(s)

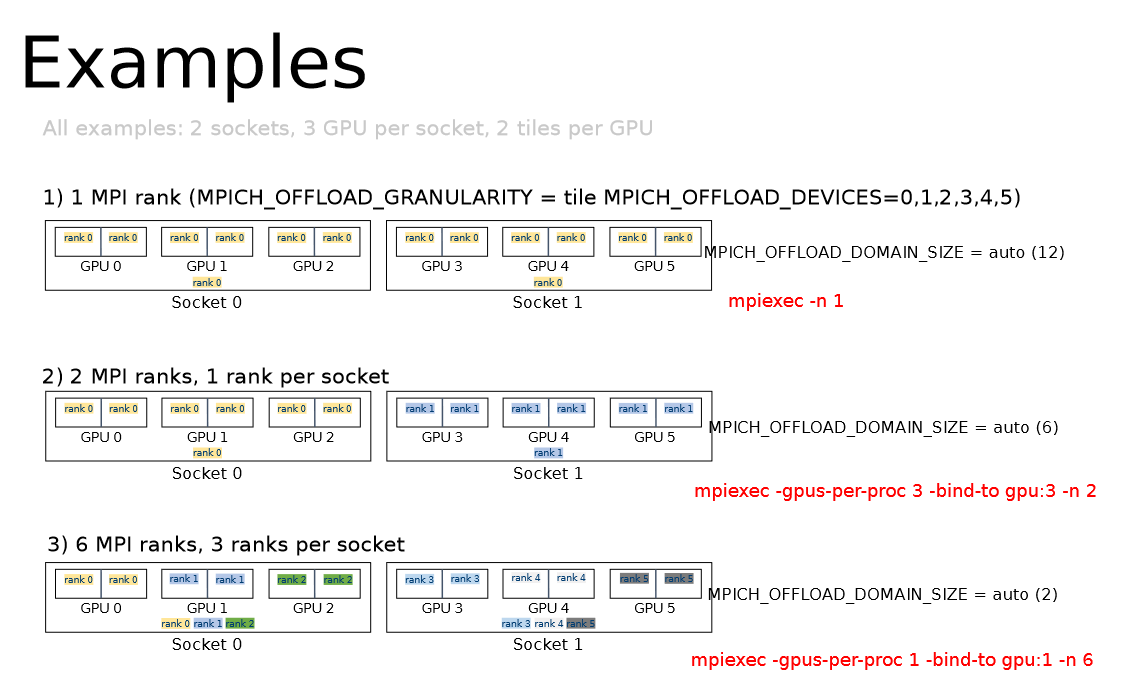

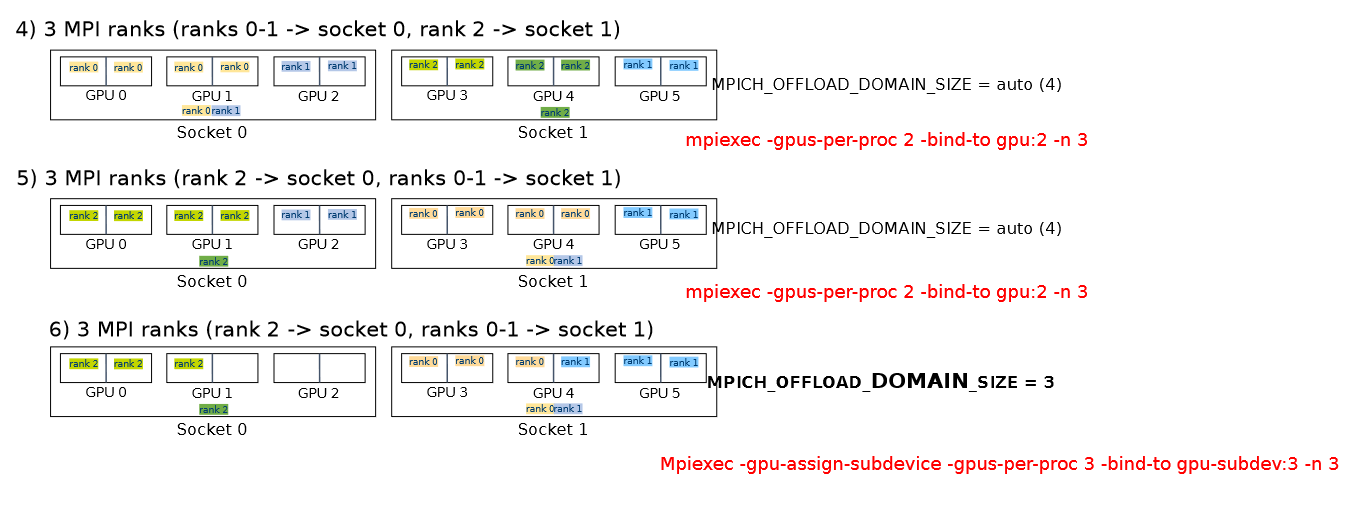

- GPU Visibility

- Which GPUs are visible to which processes

- Does not implies CPU Affinity by default

mpiexec -gpus-per-proc 2//each proc sees 2 GPUs, rr

- GPU sub-device extension

mpiexec -gpus-per-proc 1 -gpu-assign-subdevice// 1 tile visible to each procmpiexec -bind-to gpu-subdev{<id>|:n}

(from Yanfei)

@yfguo Do all the examples work with this PR?

test:mpich/ch4/ofi

test:mpich/ch4/gpu

test:mpich/ch4/gpu

test:mpich/ch4/gpu

test:mpich/ch4/most

GPU failures seems unrelated.