sklearn-evaluation

sklearn-evaluation copied to clipboard

sklearn-evaluation copied to clipboard

SKLearnEvaluationLogger added

Describe your changes

SKLearnEvaluationLogger decorator wraps telemetry log_api functionality and allows to generate logs for sklearn-evaluation as follows:

@SKLearnEvaluationLogger.log(feature='plot')

def confusion_matrix(

y_true,

y_pred,

target_names=None,

normalize=False,

cmap=None,

ax=None,

**kwargs):

pass

this will generate the following log:

{

"metadata": {

"action": "confusion_matrix"

"feature": "plot",

"args": {

"target_names": "None",

"normalize": "False",

"cmap": "None",

"ax": "None"

}

}

}

** since y_true and y_pred are positional arguments without default values it won't log them

we can also use pre-defined flags when calling a function

return plot.confusion_matrix(self.y_true, self.y_pred, self.target_names, ax=_gen_ax(), is_report=True )

which will generate the following log:

"metadata": {

"action": "confusion_matrix"

"feature": "plot",

"args": {

"target_names": "['setosa', 'versicolor', 'virginica']",

"normalize": "False",

"cmap": "None",

"ax": "AxesSubplot(0.125,0.11;0.775x0.77)"

},

"flags": {

"is_report": true

}

},

Queries

Run queries and filter out sklearn-evaluation events by the event name: sklearn-evaluation

Break these events by feature ('plot', 'report', 'SQLiteTracker', 'NotebookCollection')

Break events by actions (i.e: 'confusion_matrix', 'roc', etc...) and/or flags ('is_report')

Checklist before requesting a review

- [X] I have performed a self-review of my code

- [X] I have added thorough tests (when necessary).

- [] I have added the right documentation (when needed). Product update? If yes, write one line about this update.

Pull Request Test Coverage Report for Build 3330294919

- 90 of 90 (100.0%) changed or added relevant lines in 12 files are covered.

- No unchanged relevant lines lost coverage.

- Overall coverage increased (+0.5%) to 90.816%

| Totals | |

|---|---|

| Change from base Build 3064963625: | 0.5% |

| Covered Lines: | 1503 |

| Relevant Lines: | 1655 |

💛 - Coveralls

are you logging the actual values?

things like y_pred and y_true should not be logged for a few reasons. They might contain sensitive data, but because they might be large arrays, logging them will take a lot of time, affecting the user experience.

I think it'd be best only to log function parameters that have default values since those are configuration/flags and give us enough helpful information. although we'd have to check if any of them might log sensitive information.

also, I see that for classes, you're adding a decorator to each method, I think it'd be better to create a Mixin that grabs calls to the methods so we don't have to decorate each one.

something like this:

class LoggerMixin:

def __getattr__(self,name):

# modify the method here so it returns something that has the logger

I think this could be even more efficient:

class LoggerMixin:

def __init__(self, ....):

# iterate over the methods and modify them so they are logged when called

for method in ...:

...

and then to use it:

class SomeClass(SomeSubclass, LoggerMixin):

...

the other thing to keep in mind is that we have not tested how the logging API behaves when internet is bad or there is no connection. since we'll start logging a lot of things, it's worth running some experiences, to ensure minimal disturbance to users.

@yafimvo I see some tests are failing

I noticed test_reprs was failing due to the following behavior:

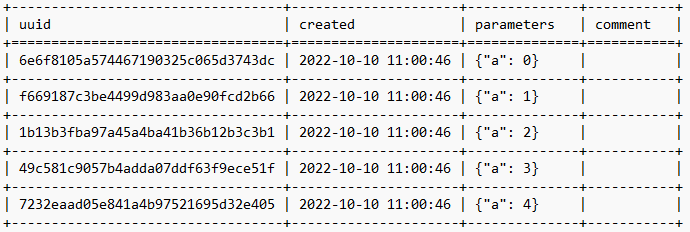

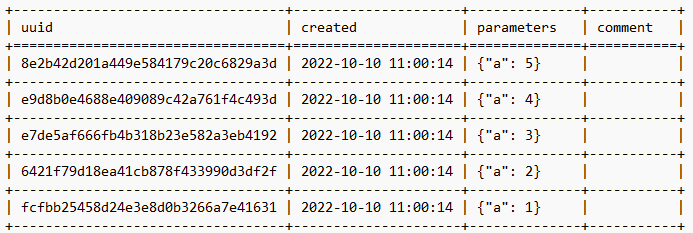

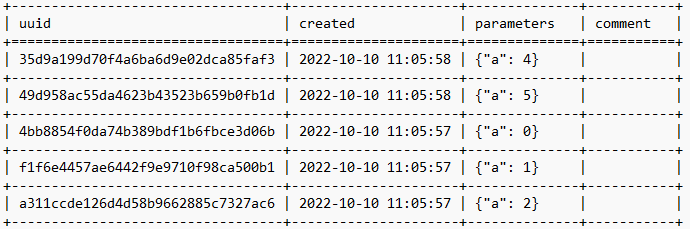

We generate 6 uuids, insert each one in a loop, print the table, and expect the last insert won't appear in this table.

Since the inserted value's timestamp doesn't have milliseconds the DESC query returns an ordered result which isn't true

i.e:

Inserted value is {"a" : i}

Inseted value is {"a": 5 - i}

Once I ran telemetry.log_api from insert method, it created a different timestamp for each value and DESC returned unexpected results

So, I modified test_reprs by adding a 3 seconds delay after the 1st insert so we can expect this item won't appear in our results table.