pino

pino copied to clipboard

pino copied to clipboard

Logs buffering/batching output to stdout despite sync: true

I'm seeing logs batch-written into the syslog and trying to figure out what's going on.

Runtime is a fairly stock Ubuntu image on EC2, nothing fancy.

The app is very low-log volume, producing only about 6-10 log messages per minute. It's running as a standard service in systemctl, and logs to stdout and therefore to syslog.

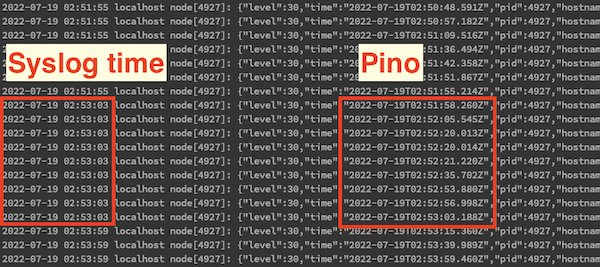

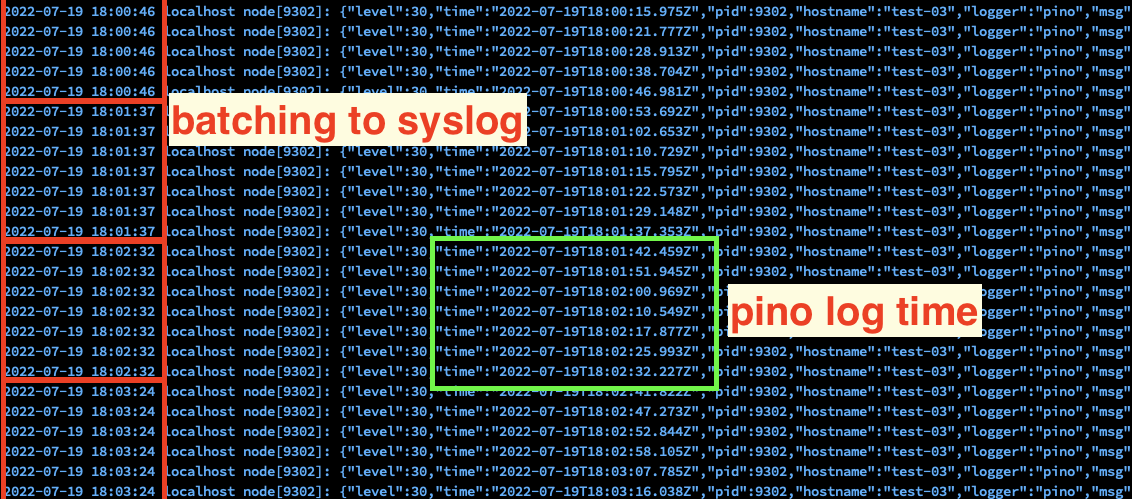

The behavior I'm seeing is that log messages seem to buffer for some number of minutes, (sometimes as many as 3 or more minutes) and then a batch of logs will arrive to /var/log/syslog all at once. The syslog timestamps of these will all match the time of the batch arrival, but the pino timestamps show the messages were originally logged fairly evenly throughout the buffering period.

Here is my pino config, where I've tried explicitly setting synchronous mode:

const pino = require('pino') // v 8.1.0

const logger = pino({

timestamp: pino.stdTimeFunctions.isoTime,

}, pino.destination({ sync: true }))

I've also tried this, with no difference in behavior.

const logger = pino({

timestamp: pino.stdTimeFunctions.isoTime,

})

The problem seems to get worse the longer the app runs, as at first startup there is no buffering at all.

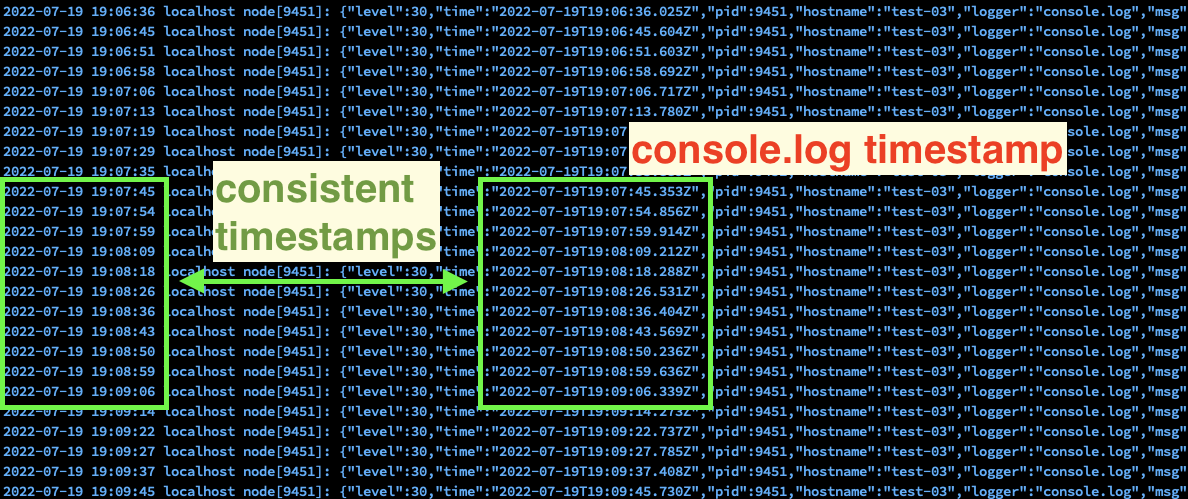

I do not see the buffering behavior when using console.log on the same machine.

Any help appreciated!

UPDATE As noted in https://github.com/pinojs/pino/issues/1497#issuecomment-1195037176, this was due to use of an emoji in the log statement, which resulted in the byte length written being incorrectly calculated, a known issue in sonic-boom and referenced below.

Would you mind trying passing process.stdout instead? I don't expect much difference, but let me know.

My running theory is that systemd is playing havoc. Systemd journal has a rate limit mechanism for all log lines, so it's possible another service is logging a lot and it's limiting you. I would try sending the data straight to syslog instead.

You mean like this? Will give it a try

const logger = pino({

name: 'bot-sales',

timestamp: pino.stdTimeFunctions.isoTime,

}, pino.destination({ dest: process.stddout, sync: true }))

My running theory is that systemd is playing havoc.

Wouldn't I see the same behavior with console.log?

I would try sending the data straight to syslog instead.

Do you mean by configuring pino to write directly to syslog as a file, or do you mean something different?

Thanks!

No, I meant something like this:

const logger = pino({

name: 'bot-sales',

timestamp: pino.stdTimeFunctions.isoTime,

}, process.stddout)

I was able to reproduce using: https://github.com/codersmith/pino-log-test

This allows switching out the logger between pino and console.log using an ENV variable.

Here is the result towards the end of running each for 1 hour:

Pino:

console.log:

And full log outputs attached: syslog-pino.txt syslog-console.txt

Also, this service represents 80% of the log volume going into syslog as there is nothing else really running on the host, and as you can see it is overall a very low-log volume app, producing just 6-10 messages / minute.

Try if this helps at all in your current setup: https://github.com/pinojs/pino/pull/1499.

(I don't have a throwaway machine at my disposal, I'd need to set it up for this case)

If this is what you mean, then I did use that for these tests based on your previous suggestion: https://github.com/codersmith/pino-log-test/blob/main/logger-pino.js#L5

const _l = pino({

timestamp: pino.stdTimeFunctions.isoTime,

}, process.stdout)

No, I recommended you to test a patch in pino to verify a theory as I won't have time soon to set up a custom linux system for this test.

Got it, running some tests with the patch now.

Good news, can confirm the patch addresses the issue I am having.

Now as long as I provide the process.stdout stream in the pino config, then it is used and the logging to syslog doesn't lag behind.

const _l = pino({

timestamp: pino.stdTimeFunctions.isoTime,

}, process.stdout)

On the other hand, if I run with the patch without specifying process.stdout, (and so the stream is instantiated asbuildSafeSonicBoom({ fd: process.stdout.fd }), then I'm able to easily reproduce the problem, and it continues to get worse the longer it runs.

Good thing: #1499 will provide a quick-and-dirty solution for you. I wonder a bit why this works at all.

https://fhackts.wordpress.com/2014/11/27/systemd-journal-not-showing-python-3-print/ shows a similar problem. In pino.destination(), we are not calling https://nodejs.org/api/fs.html#fsfsyncsyncfd after writing.

The idea behaind https://github.com/pinojs/sonic-boom/pull/153 is that somebody that is interested in avoiding any delay they can force an fsync() after every write.

The following should now work and fix this problem:

const logger = pino({

name: 'bot-sales',

timestamp: pino.stdTimeFunctions.isoTime,

}, pino.destination({ dest: process.stddout, sync: true, fsync: true }))

Thanks for the quick fix!! Will upgrade to 8.3.0 as soon as I can and verify.

@mcollina, in testing 8.3.0 to verify this fix, I am now getting an error on my Linux host: "Error: EINVAL: invalid argument, fsync" (also verified with 8.3.1, since I saw that had a quasi-related stdout fix, but get same error as below)

node:fs:1285

handleErrorFromBinding(ctx);

^

Error: EINVAL: invalid argument, fsync

at Object.fsyncSync (node:fs:1285:3)

at SonicBoom.release (/home/ubuntu/test/pino-log-test/node_modules/sonic-boom/index.js:179:10)

at actualWrite (/home/ubuntu/test/pino-log-test/node_modules/sonic-boom/index.js:385:7)

at SonicBoom.write (/home/ubuntu/test/pino-log-test/node_modules/sonic-boom/index.js:250:5)

at Pino.write (/home/ubuntu/test/pino-log-test/node_modules/pino/lib/proto.js:208:10)

at Pino.LOG [as info] (/home/ubuntu/test/pino-log-test/node_modules/pino/lib/tools.js:56:21)

at onEvent (/home/ubuntu/test/pino-log-test/index.js:11:10)

at /home/ubuntu/test/pino-log-test/index.js:16:11

at Object.<anonymous> (/home/ubuntu/test/pino-log-test/index.js:20:3)

at Module._compile (node:internal/modules/cjs/loader:1105:14)

Emitted 'error' event on SonicBoom instance at:

at SonicBoom.filterBrokenPipe (/home/ubuntu/test/pino-log-test/node_modules/pino/lib/tools.js:240:12)

at SonicBoom.emit (node:events:527:28)

at SonicBoom.release (/home/ubuntu/test/pino-log-test/node_modules/sonic-boom/index.js:150:14)

at actualWrite (/home/ubuntu/test/pino-log-test/node_modules/sonic-boom/index.js:387:7)

at SonicBoom.write (/home/ubuntu/test/pino-log-test/node_modules/sonic-boom/index.js:250:5)

[... lines matching original stack trace ...]

at Object.<anonymous> (/home/ubuntu/test/pino-log-test/index.js:20:3) {

errno: -22,

syscall: 'fsync',

code: 'EINVAL'

}

I upgraded the test project (https://github.com/codersmith/pino-log-test) with pino 8.3.0 and with this config:

const _l = pino({

timestamp: pino.stdTimeFunctions.isoTime,

}, pino.destination({ sync: true, fsync: true }))

Note that on my Mac, this works fine, but on my Linux host below I get the fsync error.

Runtime/Host info:

- AWS EC2 micro instance with standard gp2 SSD

- Operating system:

Ubuntu 22.04 LTS (GNU/Linux 5.15.0-1015-aws x86_64) - Node.js version:

v16.15.1(also verified the same error happens inv18.6.0)

Let me know if you'd rather I open a new issue. Thanks!

I'm reopening and tagging as "help wanted". Unfortunately, I've exhausted my time in chasing systemd quirks. A PR that solved this would be awesome.

The fix would be:

- identify what kind of socket Systemd is using for stdout and why it's special

- understand why Node.js

process.stdoutis flushing and sonic-boom is not even if we are passing through mostly the same code paths - either fix sonic-boom or throw as unsupported for that kind of socket

I don't think this is a systemd thing, as it can be reproduced outside of systemd just by directly running the app and observing the output.

I've also reproduced on my Mac now using the steps below.

But the low-level system i/o involved here is beyond my reach to dig in further.

Updated steps to reproduce:

-

Get the

pino-log-testtest app: https://github.com/codersmith/pino-log-test. This app writes a log message about once every 5-10 seconds, and allows you to compare againstconsole.logby changing an environment variable. -

In one terminal, run the project with pino logger:

$ USE_LOGGER=pino node index.js -

In another terminal, run the project with console logger:

$ USE_LOGGER=console node index.js -

Wait. After about 20-30 minutes, you'll start to see the log lines in the

pinoterminal batching in groups of two or three. You may need to press 'Enter' a few times to manually add space in the log output to be able to see the log batches appear at once. -

As time goes on (1 hour, or more), the batches will get larger, 5 lines at a time, 10 lines, etc.

-

Compare to the output behavior of the console terminal -- even after 2 hours, there is no batching of output when logging with console.

In a 30 minutes test I just did I experienced the behavior on both. I think it's just how OS works.

You mean with the console logger you experienced it? There is a very obvious difference I see.

I have never observed this type of behavior with any OS stdout.

Any caching/cache flush behavior for I/O should be based on time and amount cached.

This test app is intentionally logging very slowly to highlight that there is something else going on here.

The OS should never hold onto cached stdout output for up to several minutes. At most, I could imagine the OS having a flush timeout of a few seconds, where a flush occurs after a certain amount of output size has been reached, or the cache timer has triggered.

I captured a video of my terminal output. The left-hand terminal is console.log, the right is pino, and these had been running for nearly an hour when I took the video capture.

https://user-images.githubusercontent.com/4931554/180872511-75245069-ee1c-42c8-9248-3ff110a09c20.mov

Hi @mcollina, I spent some more time in the debugger tonight and found an interesting bit that I think explains the behavior I'm seeing, but I'm not familiar enough with the underlying sonic-boom internals to be able to speculate as to what the fix might be.

In SonicBoom, write(data):

// line 249

if (!this._writing && this._len >= this.minLength) {

actualWrite(this)

}

After writing a batch of data, this._len ends up being negative, and the statement evaluates to false the next time through, where the log line is buffered. The next time a message is logged, its length is added to this._len, and this continues until eventually this._len is large enough to be greater than 0, and the buffered log messages are actually written.

What I noticed is that as time goes on, this._len ends up becoming more and more negative, so that it takes longer (more messages) to get it back above zero (this matches the behavior I observed, where the buffering becomes larger over time).

Just to confirm I am seeing this behavior with:

const _l = pino({

timestamp: pino.stdTimeFunctions.isoTime,

}, pino.destination({ sync: true }))

From a client perspective, the behavior I would expect based on setting of the sync: true flag is that actualWrite would be called for every log message, and not buffered at all.

Then it's a duplicate of: https://github.com/pinojs/sonic-boom/issues/139. Very likely due to the emoji.

Checking!

Confirmed! Damn emoji.

Here is a PR with a partial fix: https://github.com/pinojs/sonic-boom/pull/154

Ah sorry, yeah I'll give it a try.

On Jul 19, 2022, at 11:25 PM, Matteo Collina @.***> wrote:

No, I recommended you to test a patch in pino to verify a theory as I won't have time soon to set up a custom linux system for this test.

— Reply to this email directly, view it on GitHub, or unsubscribe. You are receiving this because you authored the thread.