tidb-dashboard

tidb-dashboard copied to clipboard

tidb-dashboard copied to clipboard

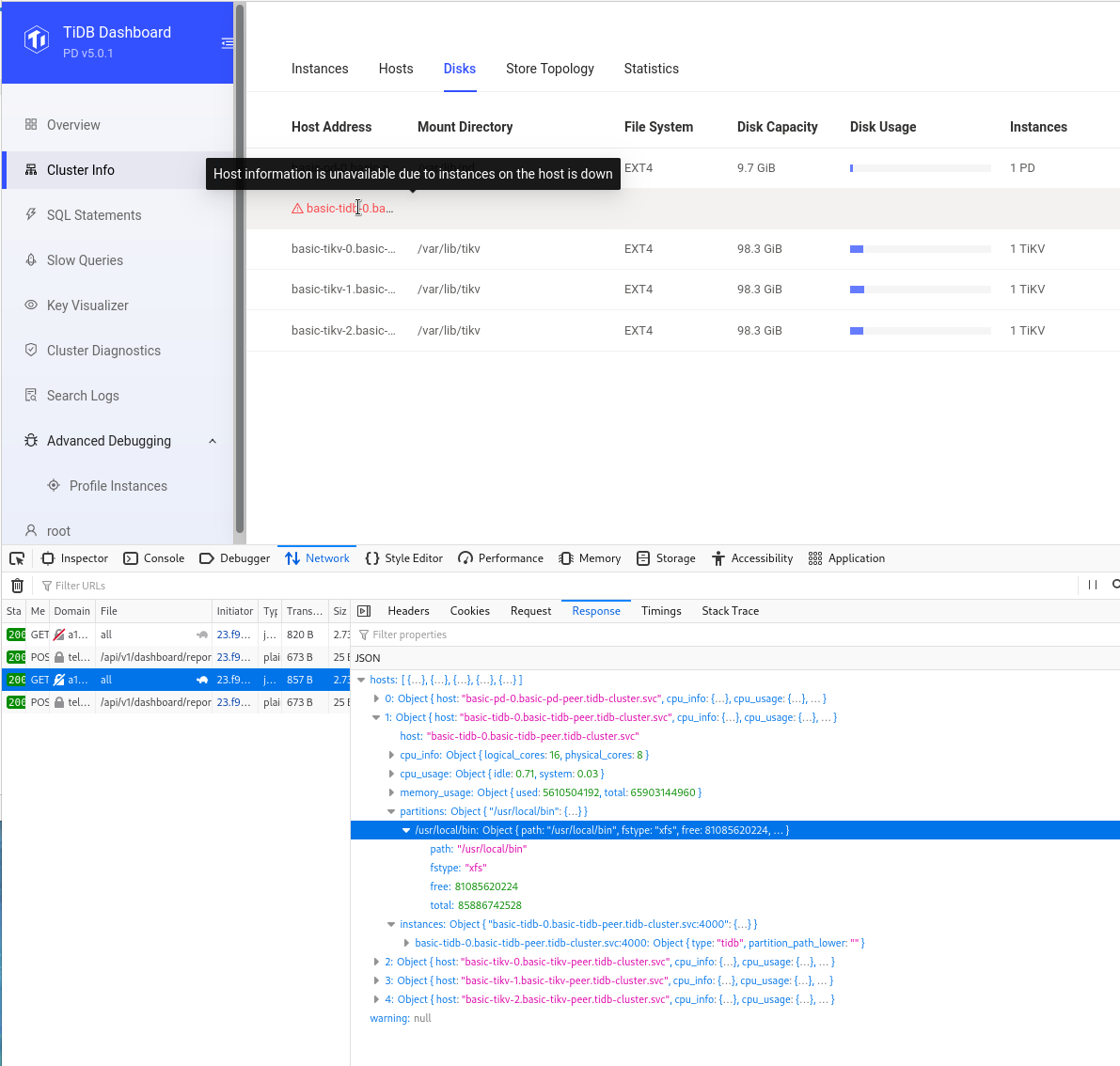

cluster-info: disks showing host down message for tidb

Bug Report

What did you do?

Deploy TiDB with the TiDB Operator on AWS EKS. Then use the dashboard.

What did you expect to see?

Disk info for all node types

What did you see instead?

"Host information is unavailable due to nstances on the host is down"

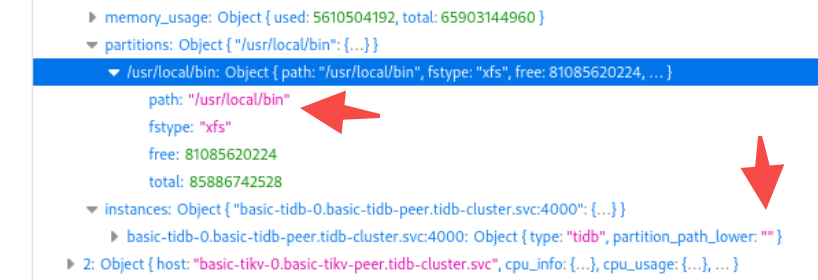

In the debugger it does show partitions. So the host is not down.

What version of TiDB Dashboard are you using (./tidb-dashboard --version)?

The top left corner shows "PD v5.0.1"

@baurine Would you like to take a look?

sorry for just seeing this issue, let me have a look.

hi @dveeden sorry for the late reply, recently I am handling a similar issue.

For this case, according to the console information and code logic, it is because there is no matched partitions.path and instances.partition_path_lower, it is expected that it has at least one pair that their values are the same.

I will investigate why this happens. Would you like to collect some information for us if the environment is still there?

Connect the database, run below 2 SQL:

select * from INFORMATION_SCHEMA.CLUSTER_LOAD where TYPE='tidb';

select * from INFORMATION_SCHEMA.CLUSTER_HARDWARE where TYPE='tidb';

Deploy TiDB with the TiDB Operator on AWS EKS.

What are the detailed steps? Maybe we can try to reproduce it.

Thanks!

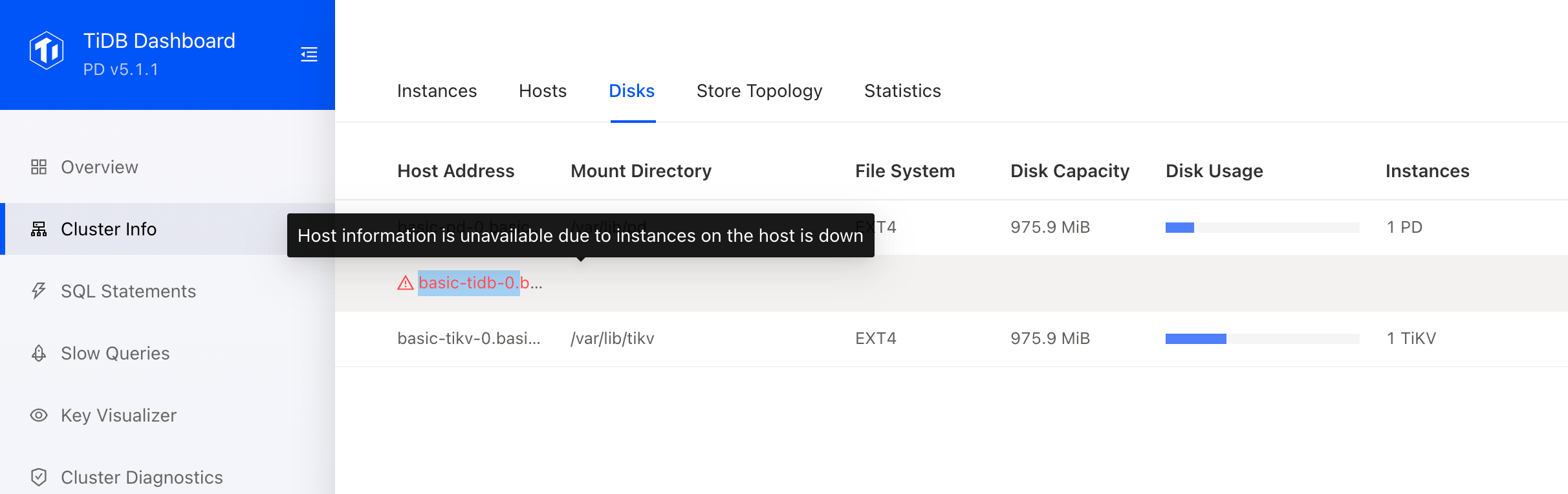

@baurine I see the same error message. I deployed a minimum cluster following this: https://docs.pingcap.com/tidb-in-kubernetes/stable/get-started.

@baurine I see the same error message. I deployed a minimum cluster following this: https://docs.pingcap.com/tidb-in-kubernetes/stable/get-started.

hi @xpepermint , yep, I found the root cause, it is because when we deploy in the cloud, we don't pass the -log-file command parameter to tidb-server, it sounds weird but it is true, the current implementation of disk info depends on this parameter, when this value is empty, something goes wrong. Because this info can be got from grafana, it's not that urgent to fix, but we plan to refactor it.

Good. I'm happy to see that you've found the bug. Is there a chance to get it to a Milestone?

Good. I'm happy to see that you've found the bug. Is there a chance to get it to a

Milestone?

We haven't decided yet.

Sounds like this needs some change in TiDB Operator? @baurine

I am just curious that why disk info needs to depend on the --log-file parameter, can we remove this dependency? @breeswish

I am just curious that why disk info needs to depend on the

--log-fileparameter, can we remove this dependency?

@baurine I guess it is because the disk info contains info for ALL disks, while we only want to display (the most suitable) one in this case.

Do we have further updates? @baurine

Do we have further updates? @baurine

nope, maybe we can arrange a task for this issue in the next sprint.

@baurine @breezewish any updates?

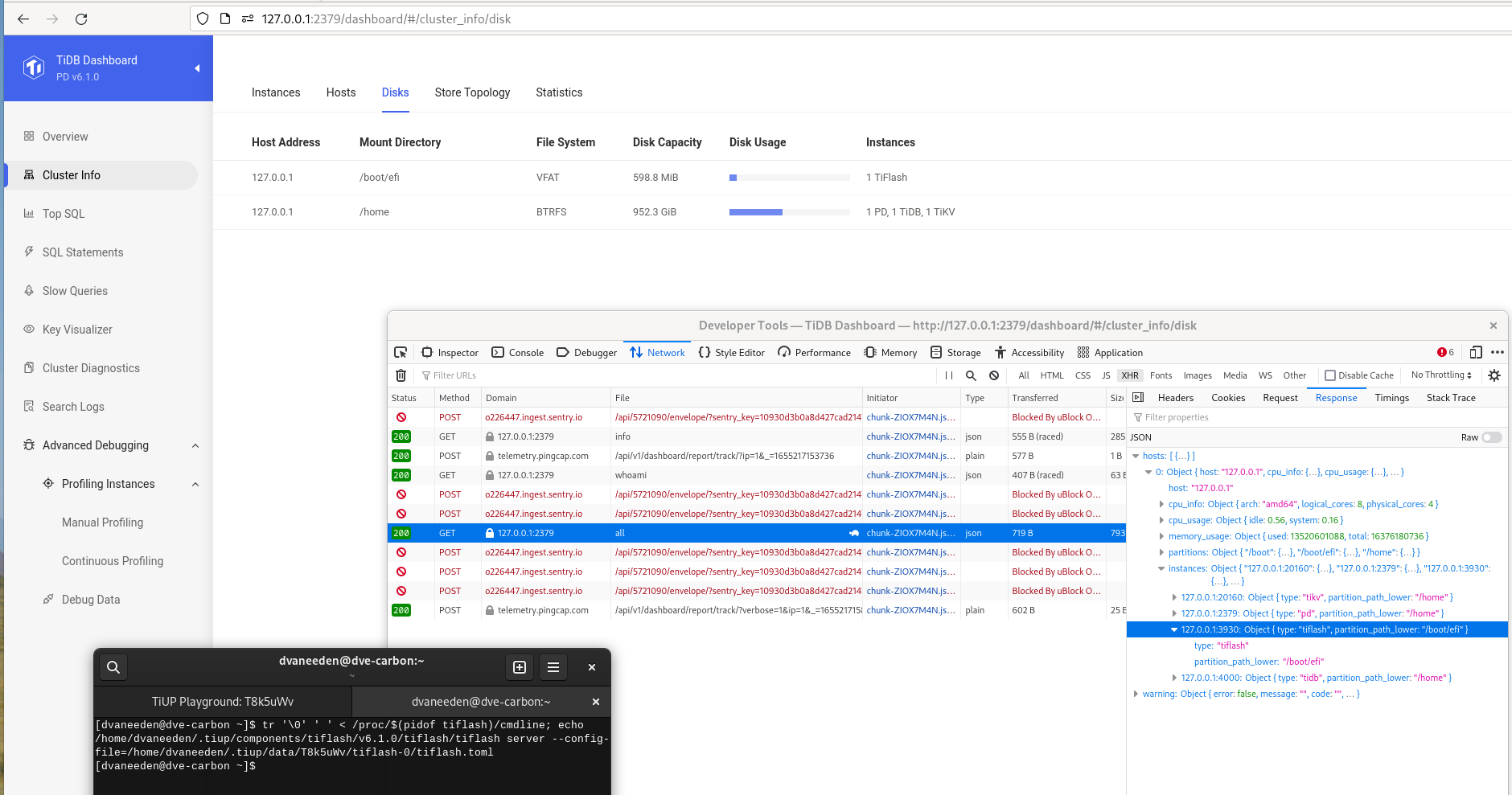

This is with v6.1.0 and it shows the wrong mountpoint for TiFlash when running with

This is with v6.1.0 and it shows the wrong mountpoint for TiFlash when running with tiup playground

hi @dveeden , I will try to resolve it in the next release.

/cc @lilyjazz

As discussed with PM, we decide to update the wrong tooltip first by PR #1469 .