zfs

zfs copied to clipboard

zfs copied to clipboard

Huge CPU usage on system when used IOV_ITER

System information

| Linux | self-build linux |

|---|---|

| Distribution Name | Debian based |

| Kernel Version | 4.9.232 |

| Architecture | x86_64 |

| OpenZFS Version | 2.0.x -> 2.1.x |

Describe the problem you're observing

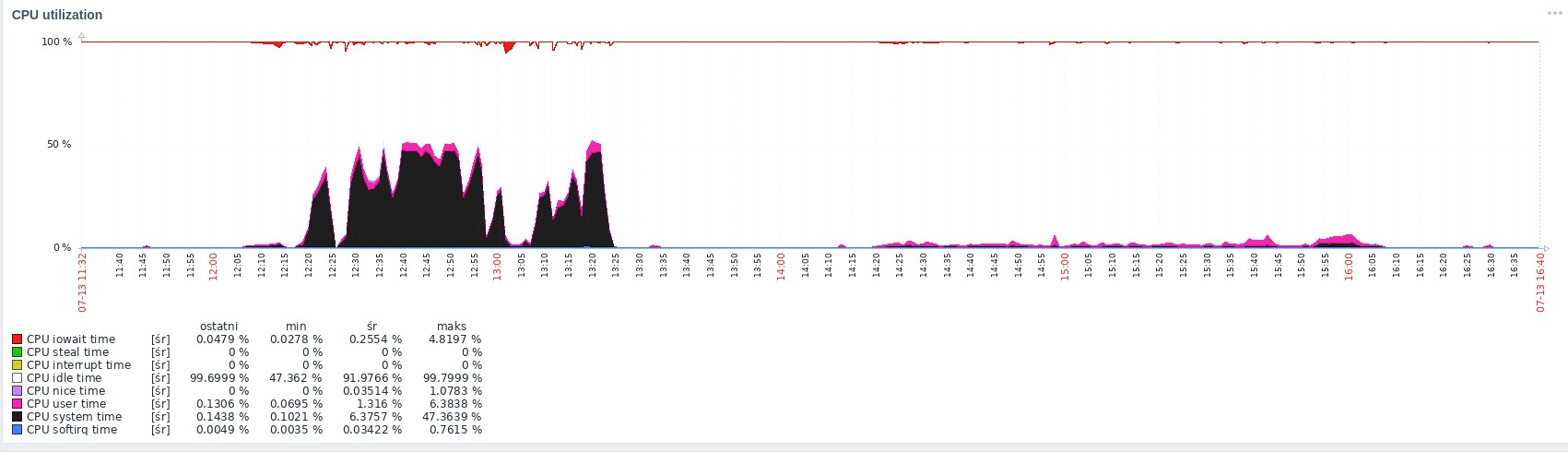

After update zfs from 0.8.6 to 2.x, observs huge CPU system used (attached cpu graph between 12:30 -> 13:20)

when strace processes that use a lot of CPU I noticed that they were looping like this:

sendfile(178, 179, [0], 447733) = -1 EAGAIN (Resource temporarily unavailable)

poll([{fd=178, events=POLLOUT}], 1, 300000) = 1 ([{fd=178, revents=POLLOUT}])

sendfile(178, 179, [0], 447733) = -1 EAGAIN (Resource temporarily unavailable)

poll([{fd=178, events=POLLOUT}], 1, 300000) = 1 ([{fd=178, revents=POLLOUT}])

sendfile(178, 179, [0], 447733) = -1 EAGAIN (Resource temporarily unavailable)

poll([{fd=178, events=POLLOUT}], 1, 300000) = 1 ([{fd=178, revents=POLLOUT}])

sendfile(178, 179, [0], 447733) = -1 EAGAIN (Resource temporarily unavailable)

poll([{fd=178, events=POLLOUT}], 1, 300000) = 1 ([{fd=178, revents=POLLOUT}])

sendfile(178, 179, [0], 447733) = -1 EAGAIN (Resource temporarily unavailable)

poll([{fd=178, events=POLLOUT}], 1, 300000) = 1 ([{fd=178, revents=POLLOUT}])

sendfile(178, 179, [0], 447733) = -1 EAGAIN (Resource temporarily unavailable)

poll([{fd=178, events=POLLOUT}], 1, 300000) = 1 ([{fd=178, revents=POLLOUT}])

sendfile(178, 179, [0], 447733) = -1 EAGAIN (Resource temporarily unavailable)

poll([{fd=178, events=POLLOUT}], 1, 300000) = 1 ([{fd=178, revents=POLLOUT}])

sendfile(178, 179, [0], 447733) = -1 EAGAIN (Resource temporarily unavailable)

poll([{fd=178, events=POLLOUT}], 1, 300000) = 1 ([{fd=178, revents=POLLOUT}])

While debugging the problem, I started to browse the changelog and found information that it was added from version 2.x iov_iter in uio structure. Additionally I changed the kernel 4.4 -> 4.9 Bingo 4.9 started to support iov, and zfs added iov. Following this lead I turned IOV_ITER off in my zfs config

--- /root/chmura/zbudowane/zfs/zfs-2.1.5/zfs_config.h 2022-07-13 11:38:01.041129918 +0200

+++ /root/chmura/zbudowane/kernel-old-vservers/kernel/linux-4.9/include/zfs/zfs_config.h 2022-07-13 14:04:28.996182192 +0200

@@ -379,22 +379,22 @@

/* #undef HAVE_IOPS_SYMLINK_USERNS */

/* iov_iter_advance() is available */

-#define HAVE_IOV_ITER_ADVANCE 1

+/* #undef HAVE_IOV_ITER_ADVANCE */

/* iov_iter_count() is available */

-#define HAVE_IOV_ITER_COUNT 1

+/* #undef HAVE_IOV_ITER_COUNT */

/* iov_iter_fault_in_readable() is available */

-#define HAVE_IOV_ITER_FAULT_IN_READABLE 1

+/* #undef HAVE_IOV_ITER_FAULT_IN_READABLE */

/* iov_iter_revert() is available */

-#define HAVE_IOV_ITER_REVERT 1

+/* #undef HAVE_IOV_ITER_REVERT */

/* iov_iter_type() is available */

/* #undef HAVE_IOV_ITER_TYPE */

/* iov_iter types are available */

-#define HAVE_IOV_ITER_TYPES 1

+/* #undef HAVE_IOV_ITER_TYPES */

/* yes */

#define HAVE_IO_SCHEDULE_TIMEOUT 1

@@ -736,7 +736,7 @@

/* #undef HAVE_VFS_FILEMAP_DIRTY_FOLIO */

/* All required iov_iter interfaces are available */

-#define HAVE_VFS_IOV_ITER 1

+/* #undef HAVE_VFS_IOV_ITER */

/* fops->iterate() is available */

/* #undef HAVE_VFS_ITERATE */

After apply new build zfs module a miracle has come ;) , processes started working normally, no loops, low CPU load on the system (same attached cpu graph after restart 14:10)

From my observations, looped only php (mod_apache) processes in version 5.x. It is possible that they cannot interpret messages from IOV (I'm guessing because I have no other suspicions)... Yes, I know php 5.x is medieval, EOL and should be migrated to minimum 7.x, but it doesn't depend on me ;(

Inquiry

Is it possible to add disable used IOV_ITER to ./configure. When i looking at the configure test code, it analyzes the kernel sources and enables support IOV when kernel supports it.

So, first of all, I have no idea how to try reproducing this from your report - it's extremely disjointed, I have no idea what you changed between situation A and B, or even what programs were misbehaving.

Second, I think the odds of adding a disable for that check would be slim to none compared to figuring out what's actually going wrong.

Maybe to simplify my post:

- kernel upgrade 4.4 -> 4.9 + keep zfs 0.8.6

- no problems, no high cpu system time usage, the server worked fine for several weeks

- no kernel change, update zfs module from 0.8.6 -> 2.x

- high CPU load system time (immediately after enabling php scripts)

- tests with different versions of zfs 2.0.x 2.1.x

- the issue still exists

- back to kernel 4.4 + zfs 2.1.5

- no problem

Investigation why the newer kernel 4.9 + zfs 2.x is consuming very high CPU system time. It happened that kernel 4.9 added iov support and new zfs 2.x started using it if the kernel supports iov.

- tested disabling iov support in zfs.2.1.5 + kernel 4.9

- problem disappears, everything works fine like kernel 4.9 + zfs 0.8.6 or 4.4 with zfs 2.x

the attached picture shows the behavior on:

- kernel 4.9.323 + zfs 2.5.1 (default ./configure with define IOV_ITER)

- server reset (14:00)

- kernel 4.9.323 + zfs 2.5.1 (IOV disabled with my patch)

If you have any ideas how I can cause the problem to be obtainable in the test environment, I'm listening. I have a very old environment (I must have to use it for some reasons), old glibc, old system libraries - generally everything is based on debian wheezy ;(. Maybe that's the problem. It is also strange that only PHP 5.x scripts looping (EAGAIN - Resource temporarily unavailable). PHP 7.x or 8.x do not generate problems with the CPU system time.

It is possible that everything is a matter of my archaic environment (some additional system libraries). Like e.g. the problem I had with the new zfs zpool create (out of memory) and I was debugging this problem in this thread:

https://github.com/openzfs/zfs/issues/13538

It does seem like ZFS is incorrectly returning EAGAIN for sendfile(2). Would it be possible for you to see if this is reproducible with the latest code from the master branch. Or better yet cherry-pick the master commit 59eab1093a361ca76849ca76b2ec079c8736e8e3 on top of 2.1.5. It should apply cleanly and I believe this may explain the issue you're seeing. If so we'll want to get this backported.

Or better yet cherry-pick the master commit 59eab10 on top of 2.1.5. It should apply cleanly and I believe this may explain the issue you're seeing. If so we'll want to get this backported.

I have been testing the proposed solution on zfs 2.1.5 + kernel 4.9 + my environment and all working fine, it seems this patch solve my problem. Scripts don't get into loops, no huge cpu system time.