openvino

openvino copied to clipboard

openvino copied to clipboard

[Bug]maskrcnn.onnx converted to IR

System information (version)

- OpenVINO => 2022.1

- Operating System / Platform => Windows 64 Bit

- Compiler => Visual Studio 2019

- Problem classification: Model Conversion

- Framework: pytorch

- Model name:maskrcnn_resnet50_fpn_coco

Detailed description

The inference result of maskrcnn_resnet50_fpn_coco.pth is correct when it is converted to onnx, but after inferring to IR through the mo tool provided by openvion2022.1, the number of labels, boxes and scores of the model is correct, but the number of masks results is only 1, that is, the Batch_size of masks is 1, Only one mask information can be obtained.

onnx: IR:

IR:

@cheny985 Could you provide these:

- Your model files for us to validate from our side

- The commands and steps that you used to convert model into IR

1.model_169.pth is my training weight,mask_rcnn9.onnx is the onnx model. 2.The commands and steps :mo --input_model C:/Users/chenyang/Desktop/1/mask_rcnn9.onnx --input_shape [1,3,512,512] ------------------ 原始邮件 ------------------ 发件人: "openvinotoolkit/openvino" @.>; 发送时间: 2022年9月15日(星期四) 中午12:43 @.>; @.@.>; 主题: Re: [openvinotoolkit/openvino] [Bug]maskrcnn.onnx converted to IR (Issue #13057)

Could you provide these:

Your model files for us to validate from our side

The commands and steps that you used to convert model into IR

— Reply to this email directly, view it on GitHub, or unsubscribe. You are receiving this because you authored the thread.Message ID: @.***>

从QQ邮箱发来的超大附件

mask_rcnn9.onnx (167.78M, 2022年10月15日 13:52 到期)进入下载页面:http://mail.qq.com/cgi-bin/ftnExs_download?t=exs_ftn_download&k=7635313090bee796252dfbae4437564c57030655550750551f535207541a505a05561c525054554e0106080452530256545706516238640e53465a6f10540a0d0b1b5e5e0c4f645e&code=2510b7dc

maskrcnn_resnet50_fpn.pth (169.84M, 2022年10月15日 13:53 到期)进入下载页面:http://mail.qq.com/cgi-bin/ftnExs_download?t=exs_ftn_download&k=2b34643290bf5b90722caeac17300b4a5c01525650530d074804560a001d0d52000c490a54010f4854065c5305005804065101043129390804470f40525e573a1751175c54440c553a52145c1f404d0d6509&code=e4d2109e

model_169.pth (335.02M, 2022年10月15日 13:53 到期)进入下载页面:http://mail.qq.com/cgi-bin/ftnExs_download?t=exs_ftn_download&k=76656663fcd03f89237dacfd4565024d00070400055053521954075a004804010d5c4b01075c034f01505555075c0607010703526368300f5b01030f3c54065b1a15120b6358&code=4efcce0b

@cheny985 OpenVINO does support native ONNX format, if the model is supported by OpenVINO both native and IR format should be able to be inferred with the OpenVINO Benchmark Application (Benchmark_app).

However, your ONNX model failed to be inferred with the benchmark_app:

Looking at the error, it seems that you are using dynamic shape for your native ONNX model.

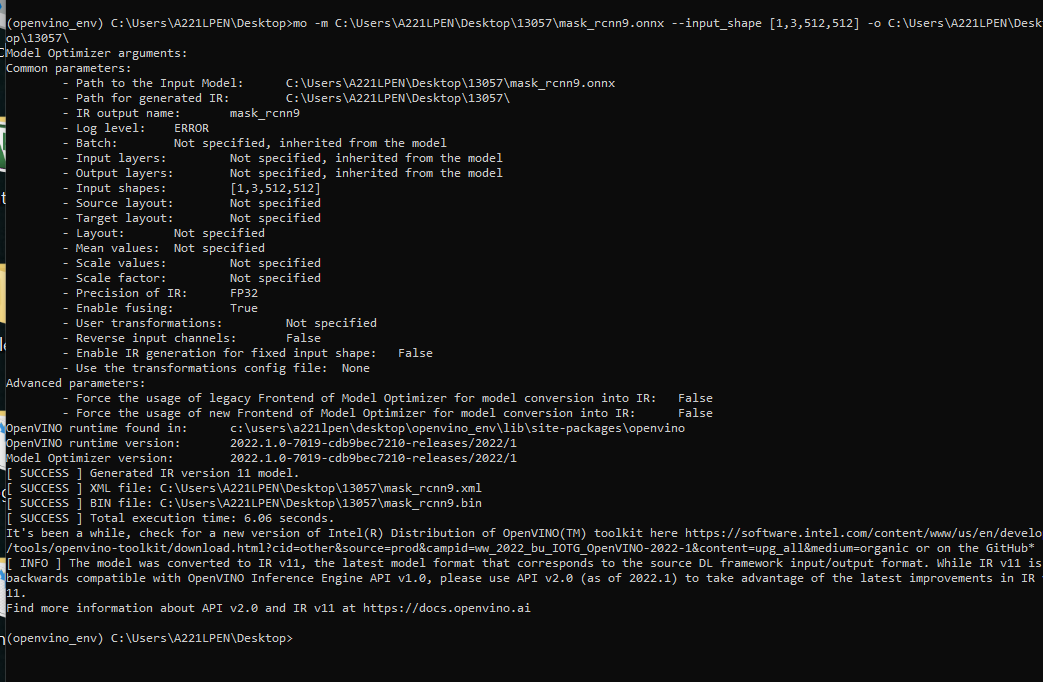

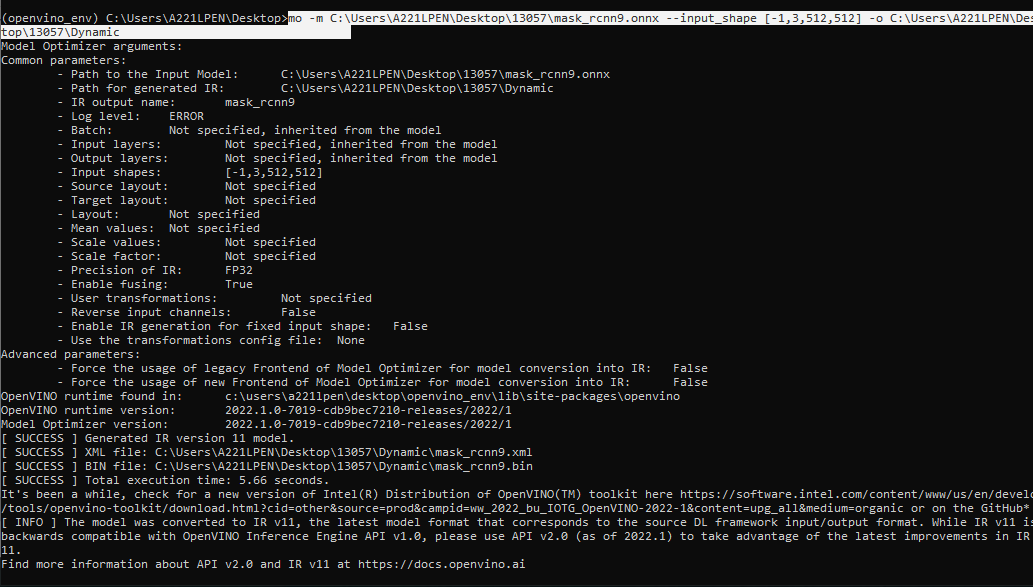

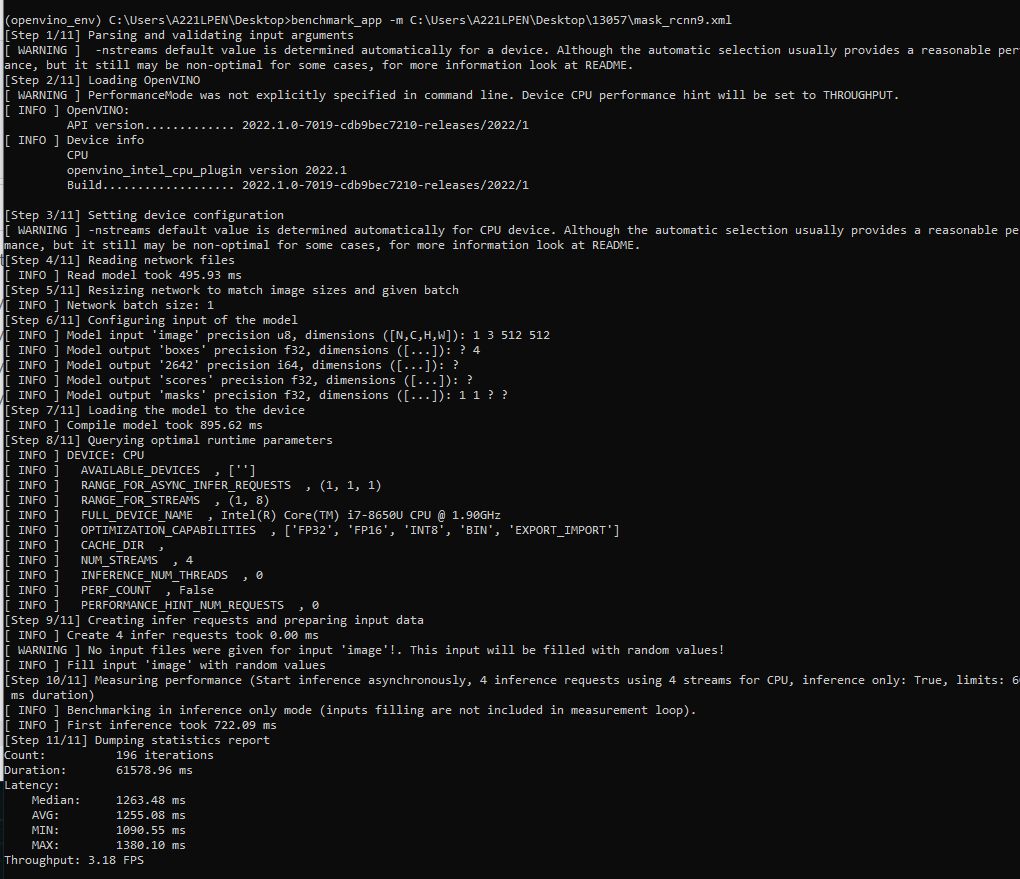

However, I managed to convert your ONNX model into IR using Model Optimizer and run it with benchmark_app:

Conversion into IR:

Static

Dynamic

benchmark_app

As I mentioned above, both ONNX and IR format should be able to be inferred if they are supported by OpenVINO. In your case, the native ONNX failed while the IR succeed.

One of the possible reasons is that some layers in your model might be cut off by the Model Optimizer during the IR conversion. This also explains your less accurate result after converting into IR format.

We'll further investigate this and get back to you with a possible workaround.

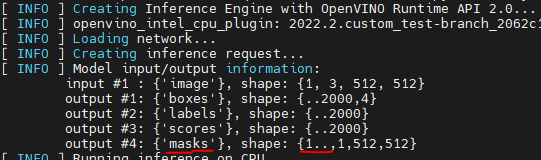

@cheny985 there was a similar issue reported and is currently under investigation, it seems some information about dynamic shapes is lost during conversion from ONNX to IR. I will share more details as they become available.

Ref. 90554

@cheny985 this PR https://github.com/openvinotoolkit/openvino/pull/10684 might fix the issue you are observing, please check it out and give it a try on your side.

FYI I've performed a quick run with your provided onnx model and I can see the masks output layer is now dynamic. Let me know if you have any issues.

PR has been merged to master branch, closing this issue. Feel free to reopen and ask additional questions related to this topic.