mayastor

mayastor copied to clipboard

mayastor copied to clipboard

allocated 2M hugepages, but 1G used during init

Describe the bug mayastor deteced 1024x 2M hugepages, but tries to reserve hugepages of size 1073741824, causing failure.

To Reproduce

- find a node supports multiple hugepage pools

- snap install microk8s from edge channel

- microk8s enable core/mayastor

- kubectl -n mayastor get pods <--- id the failing mayastor-$ID pod

- get logs

Expected behavior Ready

Screenshots

> kubectl -n mayastor logs --since=2m mayastor-t278c

[2022-05-11T04:54:55.812470509+00:00 INFO mayastor:mayastor.rs:94] free_pages: 1024 nr_pages: 1024

[2022-05-11T04:54:55.812531636+00:00 INFO mayastor:mayastor.rs:133] Starting Mayastor version: v1.0.0-119-ge5475575ea3e

[2022-05-11T04:54:55.812597744+00:00 INFO mayastor:mayastor.rs:134] kernel io_uring support: yes

[2022-05-11T04:54:55.812610107+00:00 INFO mayastor:mayastor.rs:138] kernel nvme initiator multipath support: yes

[2022-05-11T04:54:55.812632989+00:00 INFO mayastor::core::env:env.rs:600] loading mayastor config YAML file /var/local/mayastor/config.yaml

[2022-05-11T04:54:55.812652179+00:00 INFO mayastor::subsys::config:mod.rs:168] Config file /var/local/mayastor/config.yaml is empty, reverting to default config

[2022-05-11T04:54:55.812668723+00:00 INFO mayastor::subsys::config::opts:opts.rs:155] Overriding NVMF_TCP_MAX_QUEUE_DEPTH value to '32'

[2022-05-11T04:54:55.812687806+00:00 INFO mayastor::subsys::config:mod.rs:216] Applying Mayastor configuration settings

EAL: 4 hugepages of size 1073741824 reserved, but no mounted hugetlbfs found for that size

EAL: alloc_pages_on_heap(): couldn't allocate memory due to IOVA exceeding limits of current DMA mask

EAL: alloc_pages_on_heap(): Please try initializing EAL with --iova-mode=pa parameter

EAL: error allocating rte services array

EAL: FATAL: rte_service_init() failed

EAL: rte_service_init() failed

thread 'main' panicked at 'Failed to init EAL', mayastor/src/core/env.rs:543:13

stack backtrace:

0: std::panicking::begin_panic

1: mayastor::core::env::MayastorEnvironment::init

2: mayastor::main

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.

** OS info (please complete the following information):**

- Distro: Ubuntu

- Kernel version: Linux $(hostname) 5.16.0-0.bpo.4-amd64 #1 SMP PREEMPT Debian 5.16.12-1~bpo11+1 (2022-03-08) x86_64 GNU/Linux

- MayaStor revision or container image v1.0.0-119-ge5475575ea3e inside 1.0.1-microk8s-1

Additional context Add any other context about the problem here.

This looks like a dupe of #494 , but that one was closed without any progress.

Hi @luginbash, we don't really test on microk8s AFAIK. I'm trying to set it up on a VM, but this doesn't seem to do anything:

tiago@vmt:~$ microk8s enable core/mayastor

Nothing to do for `core/mayastor`.

Any clues on what I might be missing?

@tiagolobocastro you need to switch the channel to the version 1.24+

e.g.

sudo snap install microk8s --classic --channel=1.24/stable

Are we sure that rte_eal_init() does the right thing? i.e try either 2MB pages and if not found try 1GB pages? When I wrote the code -- back in the day, I recall the need to pass the page size explicitly. I cant see it now but I'm not up to date on what happens if both page sizes are found.

any news on this one ? (i'm stuck installing a microk8s cluster on baremetal servers because of this one)

Just tried with latest everything, and got

lug@sin9 ~> microk8s.kubectl logs -n mayastor mayastor-cnnd9

Defaulted container "mayastor" out of: mayastor, registration-probe (init), etcd-probe (init), initialize-pool (init)

[2022-06-02T03:31:28.271337047+00:00 ERROR mayastor:mayastor.rs:84] insufficient free pages available PAGES_NEEDED=1024 nr_pages=1024

error gets a bit more vague.

Not sure if it's the same issue but I started with :

[2022-05-11T04:54:55.812687806+00:00 INFO mayastor::subsys::config:mod.rs:216] Applying Mayastor configuration settings

EAL: 4 hugepages of size 1073741824 reserved, but no mounted hugetlbfs found for that size

EAL: alloc_pages_on_heap(): couldn't allocate memory due to IOVA exceeding limits of current DMA mask

EAL: alloc_pages_on_heap(): Please try initializing EAL with --iova-mode=pa parameter

EAL: error allocating rte services array

EAL: FATAL: rte_service_init() failed

EAL: rte_service_init() failed

thread 'main' panicked at 'Failed to init EAL', mayastor/src/core/env.rs:543:13

Eventually I moved to latest helm chart in mayastor-extensions (and probably develop tag of docker) and now I have this :

io-engine

[2022-06-04T10:01:07.170524572+00:00 INFO io_engine:io-engine.rs:132] Engine responsible for managing I/Os version 1.0.0, revision a6424b565ea4 (v1.0.0)

io-engine

thread 'main' panicked at 'failed to read the number of pages: Os { code: 2, kind: NotFound, message: "No such file or directory" }', io-engine/src/bin/io-engine.rs:74:10

io-engine

stack backtrace:

io-engine

0: rust_begin_unwind

io-engine

at ./rustc/59eed8a2aac0230a8b53e89d4e99d55912ba6b35/library/std/src/panicking.rs:517:5

io-engine

1: core::panicking::panic_fmt

io-engine

at ./rustc/59eed8a2aac0230a8b53e89d4e99d55912ba6b35/library/core/src/panicking.rs:101:14

io-engine

2: core::result::unwrap_failed

io-engine

at ./rustc/59eed8a2aac0230a8b53e89d4e99d55912ba6b35/library/core/src/result.rs:1617:5

io-engine

3: io_engine::hugepage_get_nr

io-engine

4: io_engine::main

io-engine

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.

Obivously huge pages are enabled (tried running privileged pod with both centos and nixos):

[root@centos /]# grep HugePages /proc/meminfo

HugePages_Total: 2048

HugePages_Free: 2033

HugePages_Rsvd: 9

HugePages_Surp: 0

I also have 1 node with just 2M hugepages and 1 node with both 2M and 1G to always check both cases. I don't get the issue, I checked the paths in centox/nixos :

[root@centos /]# cat /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

2048

[root@centos /]# cat /sys/kernel/mm/hugepages/hugepages-2048kB/free_hugepages

2032

I even changed the entrypoint into the pod to log what's happening there :

command: [ "cat" ]

args: [ "/sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages", "/sys/kernel/mm/hugepages/hugepages-2048kB/free_hugepages", "/sys/kernel/mm/hugepages/hugepages-1048576kB/nr_hugepages", "/sys/kernel/mm/hugepages/hugepages-1048576kB/free_hugepages" ]

Log reflects exactly how the nodes are set up and my experimentation (some with more or less 2M hugepages, some with 1G enabled as well). 3 nodes :

4 - 2048

3 - 2043

2 - cat: can't open '/sys/kernel/mm/hugepages/hugepages-1048576kB/nr_hugepages': No such file or directory

1 - cat: can't open '/sys/kernel/mm/hugepages/hugepages-1048576kB/free_hugepages': No such file or directory

4 - 1024

3 - 1024

2 - 2

1 - 2

4 - 2048

3 - 2032

2 - cat: can't open '/sys/kernel/mm/hugepages/hugepages-1048576kB/nr_hugepages': No such file or directory

1 - cat: can't open '/sys/kernel/mm/hugepages/hugepages-1048576kB/free_hugepages': No such file or directory

they are correct and values are there, so what is failing here?

fn hugepage_get_nr(hugepage_path: &Path) -> (u32, u32) {

let nr_pages: u32 = sysfs::parse_value(hugepage_path, "nr_hugepages")

.expect("failed to read the number of pages");

let free_pages: u32 = sysfs::parse_value(hugepage_path, "free_hugepages")

.expect("failed to read the number of free pages");

(nr_pages, free_pages)

}

fn hugepage_check() {

let (nr_pages, free_pages) =

hugepage_get_nr(Path::new("/sys/kernel/mm/hugepages/hugepages-2048kB"));

let (nr_1g_pages, free_1g_pages) = hugepage_get_nr(Path::new(

"/sys/kernel/mm/hugepages/hugepages-1048576kB",

));

I passed this issue by adding :

resources:

limits:

....

hugepages-1Gi: {{ .Values.mayastor.resources.limits.hugepages1Gi | quote }}

requests:

....

hugepages-1Gi: {{ .Values.mayastor.resources.limits.hugepages1Gi | quote }}

in io-engine-daemonset.yaml

And corresponding :

requests:

...

hugepages1Gi: "2Gi"

in values.yaml.

Now back to

60 - EAL: 2 hugepages of size 1073741824 reserved, but no mounted hugetlbfs found for that size 59 - EAL: alloc_pages_on_heap(): couldn't allocate memory due to IOVA exceeding limits of current DMA mask 58 - EAL: alloc_pages_on_heap(): Please try initializing EAL with --iova-mode=pa parameter 57 - EAL: error allocating rte services array 56 - EAL: FATAL: rte_service_init() failed 55 - EAL: rte_service_init() failed 54 - thread 'main' panicked at 'Failed to init EAL', io-engine/src/core/env.rs:556:13

@robert83ft, what happens if you try adding --env-context='--iova-mode=pa' to the mayastor container arguments?

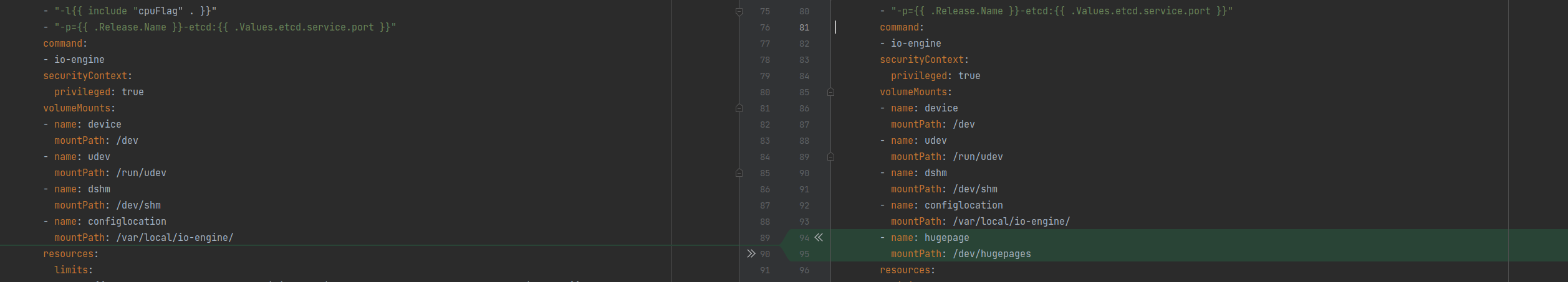

@tiagolobocastro Eventually I solved it without changing "iova-mode" to pa. I found that the io-engine deployment (yaml) is missing a volume mounted. It's declared (at the bottom of yaml) but not mounted.

When I made the last change that helped (above) I still had both 2M and 1G hugepages on node enabled. My default is 2M. I learned that when 2 types of hugepages are enabled mounts should be differentiated

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/6/html/virtualization_tuning_and_optimization_guide/sect-virtualization_tuning_optimization_guide-memory-huge_pages-1gb-runtime

however my running setup now only has single mount in io-engine deployment. This seems to suggest it does start now with 2M only. I will probably roll-back my nodes to 2M only and try to confirm.

Using microk8s and mayastor addon on 3 nodes which are each running ubuntu 22.04. followed these instructions https://microk8s.io/docs/addon-mayastor And Noticed I almost had enough free pages so I decided to change the suggested: sudo sysctl vm.nr_hugepages=1024 echo 'vm.nr_hugepages=1024' | sudo tee -a /etc/sysctl.conf to: sudo sysctl vm.nr_hugepages=1048 echo 'vm.nr_hugepages=1048' | sudo tee -a /etc/sysctl.conf sudo nvim /etc/sysctl.conf

and that seemed to do the trick.

I've added the sysctl and can verify that vm.nr_hugepages is 1024 and I still get the same error. Restarted kubelet and all the nodes multiple times.