whisper

whisper copied to clipboard

whisper copied to clipboard

Per Token Confidence + Color terminal example

Hello! I implemented per-token confidence scores and also added a little example under examples/confidence_per_token.py, where you get a fancy colored text output resembling the confidence score of each token:

(image incorrect, look at last commented image)

Example WAV: https://www.voiptroubleshooter.com/open_speech/american/OSR_us_000_0010_8k.wav

Example WAV: https://www.voiptroubleshooter.com/open_speech/american/OSR_us_000_0010_8k.wav

I am aware of the work of others to this topic, but I as I needed the I might give the PR a shot.

(the README.md is identical, I just initially added some lines locally but replaced it again with the full original readme) Works with GreedyDecoder for now

EDIT: Done

TODO: correct propability display when supplying prompts (prompt tokens seem to get assigned prob of 0, if anyone can please help, I'd appreciate it)

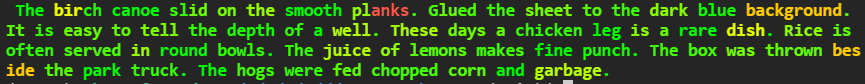

Fixed probs offset (and prompt offset)

New (correct) output:

+1

This can be really useful for proofing the output via something like Subtitle Edit.

Would really need an command line option to output an additional subtitle though, right?

I get the impression @jongwook doesn't want to stuff too many features in though, so how does such a useful feature get added without having a fork?

I get the impression @jongwook doesn't want to stuff too many features in

Although the colour terminal stuff might be questionable, I think adding per-token timestamps and confidence to the raw JSON results could itself be useful to a wider range of use cases. Exposing the raw data from Whisper would then make it possible to write your own external script on top of that to do the colour terminal staff.

One example of the wider potential uses of per-token data is that German is known to have very long compound words, and if you wanted to break them up (e.g. to compute a line break timestamp, or for sub-word highlighting, etc.), it would be helpful to have access to the raw data per token.

Hello!

Although the colour terminal stuff might be questionable

I implemented the per-token confidence as is and implemented the colorful CLI output only in an example. The main whisper code does not contain anything with color

@jongwook is there anything I should modify or change for you to accept the PR?

I'm hesitant to add this because the incremental utility of this compared to the probabilities returned by word_timestamps=True is quite niche, versus the added complexity & latency due to the additional GPU operations. The decoding logic is already taking as much as the forward pass, and I'm hoping to reduce this overhead. The subword token probabilities are not very useful anyway, because it's usually influenced more by language modeling than from speech recognition.

For the case you need per-token probs, you can add another forward pass without modifying decoding.py (similar to how it's done in timing.py) without incurring too much additional latency. It may even be faster than adding GPU operations for every autoregressive step.

The example script looks nifty, but i'd prefer it in the show and tell section.

I see, thank you for the comprehensive response!

@SinanAkkoyun thanks for your contribution. Not sure, but seems it works incorrect,

I made distorted speech example https://drive.google.com/file/d/12zGWllJg6edftcnwuHX_ZHMuwk7PlVjg/view?usp=sharing .

If I don't set the language of decoding i.e. options = whisper.DecodingOptions() , the output is correct in terms of locating mispronounce (I can read this slavic) though it translates it to random language.

But if I set 'en' for decoding options = whisper.DecodingOptions(language="en") the picture is wrong.

The rest of the code is the same as in your PR except I used "small" model.

@Rtut654 Hi, I don't quite understand the issue you are having, the "I like to play badminton and football." seems to be correct, the football especially sounds vague in the audio you provided. Could you please tell me more about your issue?

Despite that, the PR is not going to get merged, so I stopped working on it and use that modification in my own work which does not include translation

If the random translation is the problem you are referring to, I believe that my PR did not modify nor change the output prediction by any means, it just grabbed the logits and displays them as confidence

@SinanAkkoyun The issue is in the accuracy of token_probs. The first version (with translation to Ukrainian) gives very accurate result since "like" was also mispronounced very much. Also the word "football " was mispronounced in the last part which is correctly shown in the first picture.

I did the same test with other audio, setting language of decoding to English. The picture was same. Somehow it is lowering the prob of the last word even when it is pronounced correctly. At the same time probs of mispronounciations were high which is strange. So something is wrong in the way it predicts probs when language is set to English.

Hello, @jongwook ! Could you please look at the issue?

@Rtut654 Ah I see, I am sorry but in my testing I was not able to reproduce that behaviour, for me it seemed very fine in regards to unclear spoken words and clearly spoken words, even in german transcriptions. To me, the football sounded unclearer than the rest, my code does not do much except that it takes the unmodified logits. Maybe the model has a different sense of misspronunciation than you? It has been trained on many accents.

I currently am working on other projects, you can take a look at the file changes yourself and work your way through, if you have a question feel free to ask and I will give my best to clarify