mmtracking

mmtracking copied to clipboard

mmtracking copied to clipboard

Balanced multiple datasets with variable sizes (for train)?

Assume I have several datasets (CocoVID format and CocoVideoDataset wrapper) however the number of samples between the datasets differs a lot. Is there a way (even if I will have to implement a custom module) to make sure that when training, train samples are picked from all datasets fairly?

So, even if I have Dataset A with 10000 frames and Dataset B with only 100, train samples will consist of %50 Dataset A and %50 Dataset B.

Thank you.

Oh, figured it out. Using a combination of RepeatDataset and ConcatDataset, we can manually balance out the final train set using ratios.

Example: Dataset A has 100 samples, Dataset B has 10 and Dataset C has 1.

By setting the "times" parameter (of RepeatDataset wrapper) for Dataset A as 1, Dataset B as 10 and Dataset C as 100 we can in the end balance things out.

Keeping it here, in case anyone else googles for it.

Yes, we provide some dataset warpper to mix the dataset or modify the dataset distribution for training.

Oh, figured it out. Using a combination of RepeatDataset and ConcatDataset, we can manually balance out the final train set using ratios.

Example: Dataset A has 100 samples, Dataset B has 10 and Dataset C has 1.

By setting the "times" parameter (of RepeatDataset wrapper) for Dataset A as 1, Dataset B as 10 and Dataset C as 100 we can in the end balance things out.

Keeping it here, in case anyone else googles for it.

Hello, I had a question about how to use the RepeatDataset. @askarbozcan

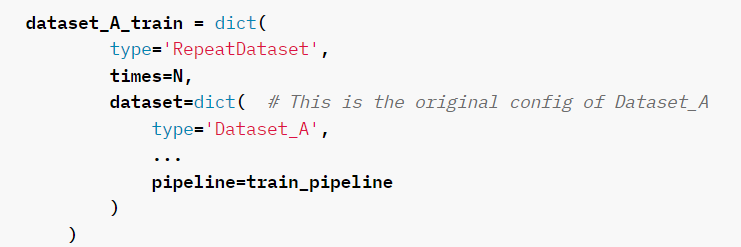

If i use the dataset_type is 'CocoVideoDataset' ,so i need to rewrite the config like :

**train** = dict(

type='RepeatDataset',

times=10,

dataset=dict( # This is the original config of Dataset_A

type=**'CocoVideoDataset'**,

classes=classes,

ann_file='path/to/your/train/data',

pipeline=train_pipeline

)

)

Is that right? Could you please give me some advice? Thank you so much!