mmdetection3d

mmdetection3d copied to clipboard

mmdetection3d copied to clipboard

[Feature] Support MinkowskiUnet for semantic segmentation

Thanks for your contribution and we appreciate it a lot. The following instructions would make your pull request more healthy and more easily get feedback. If you do not understand some items, don't worry, just make the pull request and seek help from maintainers.

Motivation

It is a continued integration of MinkowskiEngine framework for sparse tensors processing (#1422). This PR includes implementation of the semantic segmentation methods and color augmentations described in 4D-SpatioTemporal ConvNets: Minkowski Convolutional Neural Networks, CVPR'19.

Modification

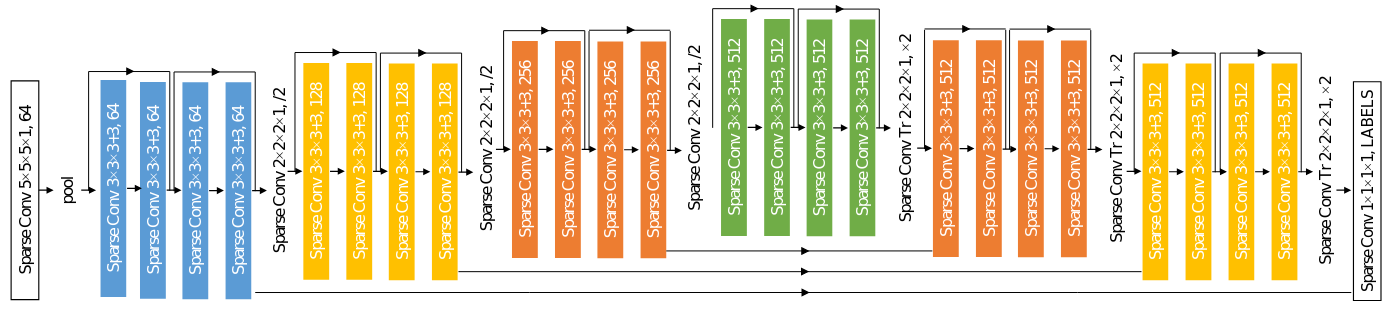

Add MinkowskiUnet instance segmentation module and its submodules like:

segmentors/sparse_encoder_decoder.py-->SparseEncoderDecoder3D[inherited fromEncoderDecoder3Dmodule]backbones/mink_unet.py-->MinkUNetBase[inherited fromnn.Modulemodule]decode_heads/mink_unet_head.py-->MinkUNetHead[inherited fromBase3DDecodeHeadmodule]

Add color augmentations for point clouds like:

pipelines/transform_3d.py-->YOLOXHSVPointsRandomAug[inherited fromYOLOXHSVRandomAugmodule]pipelines/transform_3d.py-->ChromaticJitter(originally taken from the code for the paper)

BC-breaking (Optional)

MinkowkiEngine support. Updated Dockerfile was also added.

Use cases (Optional)

Model declaration looks like this:

model = dict(

type='SparseEncoderDecoder3D',

voxel_size=0.05,

backbone=dict(

type='MinkUNetBase',

depth=18,

in_channels=3,

planes='A',

D=3,

),

decode_head=dict(

type='MinkUNetHead',

channels=96,

num_classes=20,

loss_decode=dict(

type='CrossEntropyLoss',

class_weight=None, # should be modified with dataset

loss_weight=1.0,

)))

Augmentations can be included into a common pipeline:

# scannet semantic segmentation dataset example

train_pipeline = [

dict(

type='LoadPointsFromFile',

coord_type='DEPTH',

shift_height=False,

use_color=True,

load_dim=6,

use_dim=[0, 1, 2, 3, 4, 5],

),

dict(type='LoadAnnotations3D',

with_bbox_3d=False,

with_label_3d=False,

with_mask_3d=False,

with_seg_3d=True,

),

dict(

type='PointSegClassMapping',

valid_cat_ids=(1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 14, 16, 24, 28,

33, 34, 36, 39),

max_cat_id=40,

),

dict(

type='IndoorPatchPointSample',

num_points=num_points,

block_size=1.5,

ignore_index=len(class_names),

use_normalized_coord=False,

enlarge_size=0.2,

min_unique_num=None,

),

dict(

type='YOLOXHSVPointsRandomAug', # example of new augmentation

hue_delta=2,

saturation_delta=15,

value_delta=15,

),

dict(

type='NormalizePointsColor',

color_mean=None,

),

dict(

type='GlobalRotScaleTrans',

rot_range=[-0.087266, 0.087266],

scale_ratio_range=[.9, 1.1],

translation_std=[.1, .1, .1],

shift_height=False,

),

dict(

type='RandomFlip3D',

sync_2d=False,

flip_ratio_bev_horizontal=0.5,

flip_ratio_bev_vertical=0.5,

),

dict(type='DefaultFormatBundle3D', class_names=class_names),

dict(type='Collect3D', keys=['points', 'pts_semantic_mask']),

]

Checklist

- Pre-commit or other linting tools are used to fix the potential lint issues.

- The modification is covered by complete unit tests. If not, please add more unit test to ensure the correctness.

- If the modification has potential influence on downstream projects, this PR should be tested with downstream projects.

- The documentation has been modified accordingly, like docstring or example tutorials.

@HuangJunJie2017 @ZwwWayne plz help me with readthedocs build failed problem. I dont know what to do with this

Hi @Ilyabasharov , thanks for your awesome work! The doc build error is really strange. We will fix the mmcv version problem in the new version release and then have a look at the doc error. Please first fix the preliminary comments and resolve the conflict.

Codecov Report

:exclamation: No coverage uploaded for pull request base (

dev@14c5ded). Click here to learn what that means. Patch has no changes to coverable lines.

:exclamation: Current head bb20469 differs from pull request most recent head 2874a2a. Consider uploading reports for the commit 2874a2a to get more accurate results

Additional details and impacted files

@@ Coverage Diff @@

## dev #1646 +/- ##

======================================

Coverage ? 50.16%

======================================

Files ? 223

Lines ? 19104

Branches ? 3134

======================================

Hits ? 9584

Misses ? 8940

Partials ? 580

| Flag | Coverage Δ | |

|---|---|---|

| unittests | 50.16% <0.00%> (?) |

Flags with carried forward coverage won't be shown. Click here to find out more.

Help us with your feedback. Take ten seconds to tell us how you rate us. Have a feature suggestion? Share it here.

:umbrella: View full report at Codecov.

:loudspeaker: Do you have feedback about the report comment? Let us know in this issue.

Hi @Ilyabasharov !We are grateful for your efforts in helping improve mmdetection3d open-source project during your personal time.

Welcome to join OpenMMLab Special Interest Group (SIG) private channel on Discord, where you can share your experiences, ideas, and build connections with like-minded peers. To join the SIG channel, simply message moderator— OpenMMLab on Discord or briefly share your open-source contributions in the #introductions channel and we will assist you. Look forward to seeing you there! Join us :https://discord.gg/UjgXkPWNqA If you have a WeChat account,welcome to join our community on WeChat. You can add our assistant :openmmlabwx. Please add "mmsig + Github ID" as a remark when adding friends:)

Thank you again for your contribution❤