onnx-tensorrt

onnx-tensorrt copied to clipboard

onnx-tensorrt copied to clipboard

Loading ONNX models converted using `--large_model` flag

Description

I converted a large SavedModel (3.3 GB) from TensorFlow to ONNX. Now, when I am trying to prepare the TensorRT backend, I am running into:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python3.8/dist-packages/onnx_tensorrt-8.5.1-py3.8.egg/onnx_tensorrt/backend.py", line 237, in prepare

File "/usr/local/lib/python3.8/dist-packages/onnx/backend/base.py", line 92, in prepare

onnx.checker.check_model(model)

File "/usr/local/lib/python3.8/dist-packages/onnx/checker.py", line 111, in check_model

model if isinstance(model, bytes) else model.SerializeToString()

ValueError: Message onnx.ModelProto exceeds maximum protobuf size of 2GB: 3442329055

Environment

TensorRT Version: 8.5.1

ONNX-TensorRT Version / Branch: 8.5.1

GPU Type: Tesla V100

Nvidia Driver Version: 3090

CUDA Version: 11.6

CUDNN Version:

Operating System + Version: Ubuntu 20.08

Python Version (if applicable): 3.8.10

TensorFlow + TF2ONNX Version (if applicable): TensorFlow 2.10.0 and 1.13.0

PyTorch Version (if applicable):

Baremetal or Container (if container which image + tag): nvcr.io/nvidia/tensorflow:22.12-tf2-py3

Relevant Files

The SavedModel I am converting can be found here: https://huggingface.co/keras-sd/tfs-diffusion-model/tree/main

Steps To Reproduce

- Convert to ONNX:

python -m tf2onnx.convert --saved-model tfs-diffusion-model --output diffusion_model.onnx --large_model

You should expect the following outputs after which the conversion process will conclude:

...

2022-12-29 06:41:09,395 - INFO - After optimization: Cast -1423 (2311->888), Concat -512 (1049->537), Const -1554 (7561->6007), Gather -390 (642->252), GlobalAveragePool +192 (0->192), Identity -2337 (2337->0), Less -1 (9->8), Placeholder -1 (707->706), ReduceMean -192 (436->244), ReduceProd -640 (640->0), Reshape +47 (1835->1882), Shape -192 (450->258), Squeeze -122 (371->249), Transpose -512 (1176->664), Unsqueeze -1377 (1798->421)

2022-12-29 06:41:10,553 - WARNING - missing output shape for map/while/TensorArrayV2Write/TensorListSetItem:0

2022-12-29 06:41:24,606 - INFO -

2022-12-29 06:41:24,606 - INFO - Successfully converted TensorFlow model diffusion_model to ONNX

2022-12-29 06:41:24,606 - INFO - Model inputs: ['batch_size', 'context', 'num_steps', 'unconditional_context']

2022-12-29 06:41:24,606 - INFO - Model outputs: ['latent']

2022-12-29 06:41:24,606 - INFO - Zipped ONNX model is saved at diffusion_model.onnx. Unzip before opening in onnxruntime.

- Unzip the ONNX model:

unzip diffusion_model.onnx

- Load in ONNX:

import onnx

import onnx_tensorrt.backend as backend

model = onnx.load("__MODEL_PROTO.onnx")

engine = backend.prepare(model, device='CUDA:1')

It should lead to:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/local/lib/python3.8/dist-packages/onnx_tensorrt-8.5.1-py3.8.egg/onnx_tensorrt/backend.py", line 237, in prepare

File "/usr/local/lib/python3.8/dist-packages/onnx/backend/base.py", line 92, in prepare

onnx.checker.check_model(model)

File "/usr/local/lib/python3.8/dist-packages/onnx/checker.py", line 111, in check_model

model if isinstance(model, bytes) else model.SerializeToString()

ValueError: Message onnx.ModelProto exceeds maximum protobuf size of 2GB: 3442329055

Hi @sayakpaul , can you show what are the external data generated by tf2onnx? It looks like the model is invalid / the path to the external data can't be found by onnx.checker, or something like this.

In pytorch, torch.onnx.export uses external data automatically: https://github.com/pytorch/pytorch/pull/62257 & https://github.com/pytorch/pytorch/pull/67080 . So far I haven't had issues to use onnx-tensorrt with ONNX models generated from torch.onnx.export, having external data.

Hi @sayakpaul , can you show what are the external data generated by tf2onnx?

tf2onnx produces diffusion_model.onnx with the following warning:

2022-12-29 06:41:24,606 - INFO - Zipped ONNX model is saved at diffusion_model.onnx. Unzip before opening in onnxruntime.

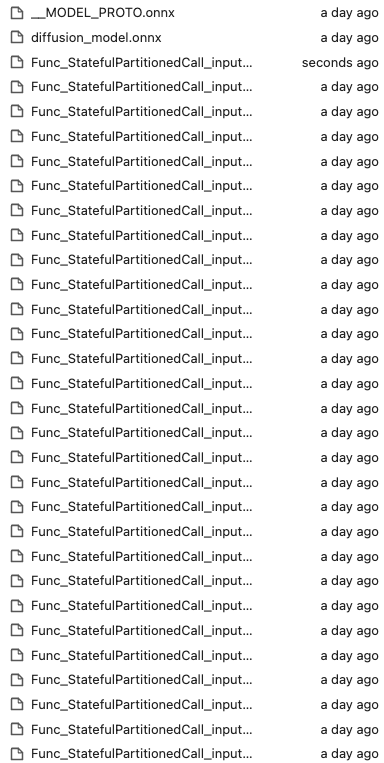

When unzipped diffusion_model.onnx produces a bunch of things along with __MODEL_PROTO.onnx.

Actually, I have always used onnx-tensorrt along trtexec or ONNX Runtime, so not sure about the TensorRTBackend way. What are the bunch of things along __MODEL_PROTO.onnx?

You could try

import onnx

import onnx_tensorrt.backend as backend

model = onnx.load("__MODEL_PROTO.onnx", load_external_data=False)

engine = backend.prepare(model, device='CUDA:1')

and see what happens, but I guess it's not intended to be used this way.

For models larger >2 GB, I've learned that providing simply the path to onnx.checker.check_model() do the job.

What are the bunch of things along __MODEL_PROTO.onnx?

Here's the diffusion_model.onnx file in case you want to investigate: https://storage.googleapis.com/demo-experiments/diffusion_model.onnx.