onnx-tensorrt

onnx-tensorrt copied to clipboard

onnx-tensorrt copied to clipboard

Invalid Node - model.0.conv.bias_DequantizeLinear

I use onnx-tensorrt to convert the model and run into this problem. The onnx file is generated by using onnxruntime.quantization.quantize_static.

onnx-tensorrt support the "DequantizeLinear " operator, but i run into this problem.

Are you able to share the model? Dequantize nodes should have floating point input types (activation types in TRT), and this error suggests that there is a type mismatch.

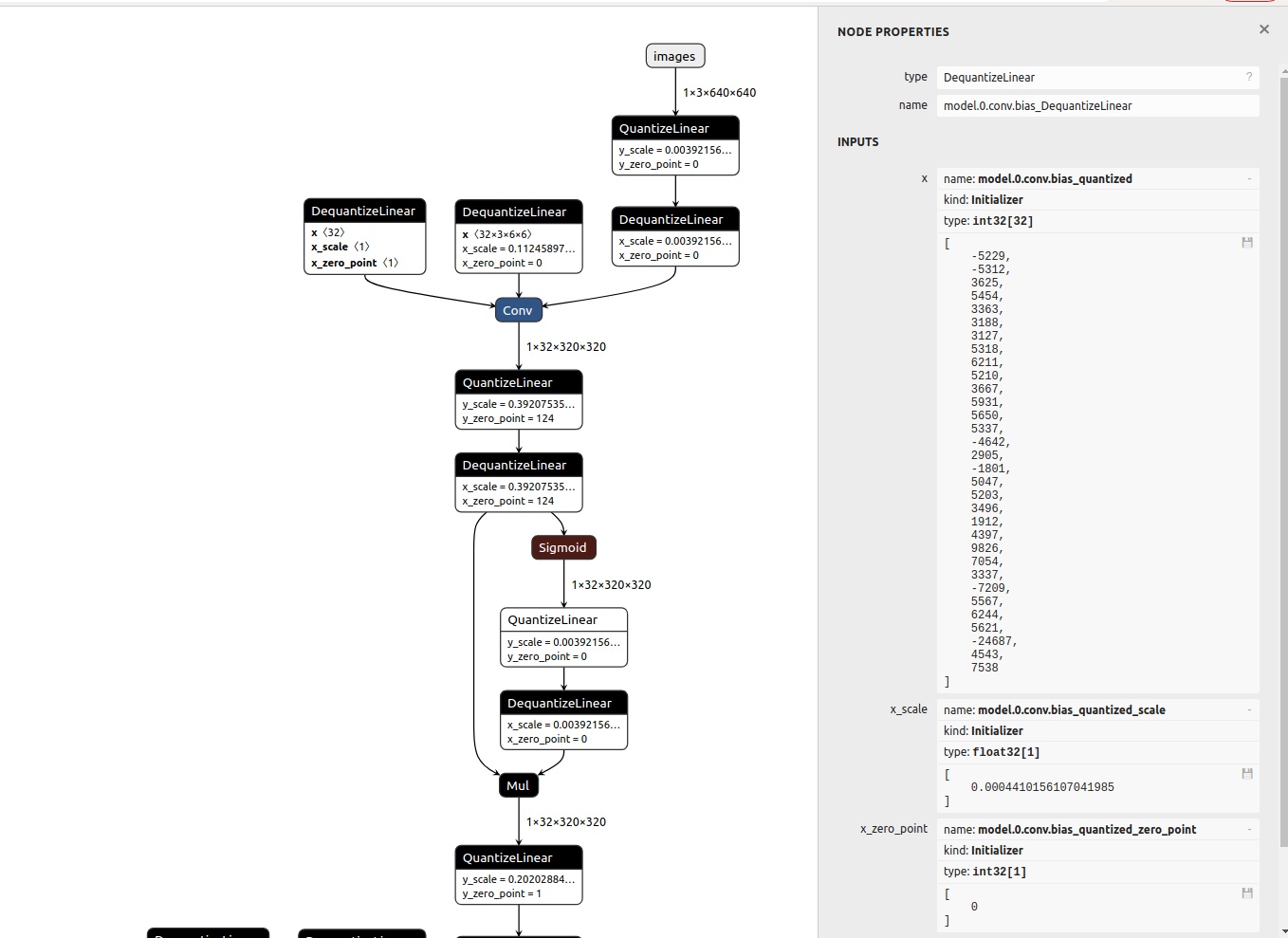

I'm not sure if you can use Baidu online disk. The link to the model is https://pan.baidu.com/s/1GYyfi2-dWEhSZIp_cyo5Kw?at=1655446711172 and the extraction code is vgqp. Below I give a picture of the parameters of the node in question. Thank you for your reply.

The model is here. You need to unzip it. model.onnx.zip

@kevinch-nv https://github.com/onnx/onnx/blob/main/docs/Operators.md#DequantizeLinear Here the input type of DequantizeLinear is constrained to T : tensor(int8), tensor(uint8), tensor(int32) So it should have Integer input type right? Am I understanding this in the right way?