onnx-tensorrt

onnx-tensorrt copied to clipboard

onnx-tensorrt copied to clipboard

TopK not supporting INT32 data type for input tensor

Hello,

I am having trouble converting an ONNX model that contains a TopK node. In my model, the TopK input tensor data type is INT32.

However, the TensorRT OnnxParser gives the following error:

In node 413 (importTopK): UNSUPPORTED_NODE: Assertion failed: tensorPtr->getType() != nvinfer1::DataType::kINT32

I found the assertion code in https://github.com/onnx/onnx-tensorrt/blob/fe081b2fa358759a1f43899162d134f62f989971/builtin_op_importers.cpp#L3251 so this seems to be on purpose.

I am wondering why there is such a constraint. I tried using INT64 data type for my input tensor, but during the conversion to TRT it is converted down to INT32 due to the following:

Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

The only solution I see is converting my input tensor to a float data type and converting back the output to INT32, which seems neither very clean nor effective.

Is there a particular reason why the INT32 datatype is not allowed for the input tensor?

I use:

- TensorRT 7.0.0.11

- ONNX==1.6.0

- ONNX opset 11

- Everything is done using Python APIs

I have a similar problem. The parser gives this error:

In node -1 (importTopK): UNSUPPORTED_NODE: Assertion failed: inputs.at(1).is_weights()

Thanks for the issue report. Our TopK layer doesn't support INT32 type yet, I'll open up an issue internally to see if it can be worked on.

Did you solve this error? I also encountered. My input is also int32, not the type he wants.

TopK should support int32 inputs since TensorRT 8.5. @nicolasgorrity @scuizhibin please check and let us know if you are still facing issues. Thanks.

@kevinch-nv @rajeevsrao I was having this same issue months ago, and recently came back and saw the comment about it being resolved for TensorRT 8.5. I'm converting it for Jetson Xavier NX, so I used The NGC Docker container with 8.5.2 to try again.

However, upon running I run into an integer issue with TopK still:

[04/20/2023-18:52:48] [E] [TRT] ModelImporter.cpp:726: While parsing node number 892 [TopK -> "/TopK_1_output_0"]:

[04/20/2023-18:52:48] [E] [TRT] ModelImporter.cpp:727: --- Begin node ---

[04/20/2023-18:52:48] [E] [TRT] ModelImporter.cpp:728: input: "/Slice_188_output_0"

input: "/Constant_924_output_0"

output: "/TopK_1_output_0"

output: "/TopK_1_output_1"

name: "/TopK_1"

op_type: "TopK"

attribute {

name: "axis"

i: 2

type: INT

}

attribute {

name: "largest"

i: 0

type: INT

}

[04/20/2023-18:52:48] [E] [TRT] ModelImporter.cpp:729: --- End node ---

[04/20/2023-18:52:48] [E] [TRT] ModelImporter.cpp:731: ERROR: onnx2trt_utils.cpp:23 In function notInvalidType:

[8] Found invalid input type of INT32

I am on Jetpack 5.0.2, which natively runs TensorRT 8.4.1, and when I run it there, it catches on the same layer, but with a different error print, coming from the same builtin_op_importers.cpp file mentioned above:

[04/20/2023-15:14:51] [E] [TRT] ModelImporter.cpp:773: While parsing node number 892 [TopK -> "/TopK_1_output_0"]:

[04/20/2023-15:14:51] [E] [TRT] ModelImporter.cpp:774: --- Begin node ---

[04/20/2023-15:14:51] [E] [TRT] ModelImporter.cpp:775: input: "/Slice_188_output_0"

input: "/Constant_924_output_0"

output: "/TopK_1_output_0"

output: "/TopK_1_output_1"

name: "/TopK_1"

op_type: "TopK"

attribute {

name: "axis"

i: 2

type: INT

}

attribute {

name: "largest"

i: 0

type: INT

}

[04/20/2023-15:14:51] [E] [TRT] ModelImporter.cpp:776: --- End node ---

[04/20/2023-15:14:51] [E] [TRT] ModelImporter.cpp:778: ERROR: builtin_op_importers.cpp:4424 In function importTopK:

[8] Assertion failed: (tensorPtr->getType() != nvinfer1::DataType::kINT32) && "This version of TensorRT does not support INT32 input for the TopK operator."

Is it because INT32 is still listed as an incompatible type with the operator (i.e. in the invalidTypes input into notInvalidType())? Is it because the values were originally int64 that are clamped down to int32?

ONNX model file (compressed): fast_acvnet_plus_generalization_opset16_256x320.zip

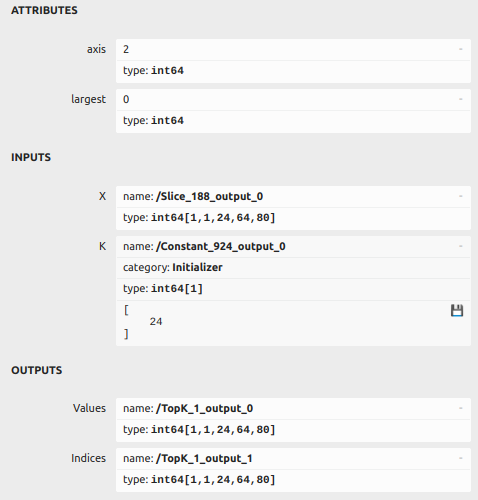

Information on layer in question: