onnx-tensorrt

onnx-tensorrt copied to clipboard

onnx-tensorrt copied to clipboard

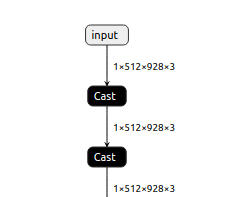

Crash when model with cast

Got a crash when cast the input from int8 to float32. Is there any suggestion?

Thanks

( int8->float32, int8->int32->float32 will cause crash.

int32->float32 is OK.

)

( int8->float32, int8->int32->float32 will cause crash.

int32->float32 is OK.

)

Input filename: xxx.onnx

ONNX IR version: 0.0.3

Opset version: 9

Producer name: pytorch

Producer version: 0.4

Domain:

Model version: 0

Doc string:

Parsing model [2020-02-28 13:28:41 WARNING] onnx-tensorrt/onnx2trt_utils.cpp:232: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32. Building TensorRT engine, FP16 available:1 Max batch size: 32 Max workspace size: 1024 MiB [2020-02-28 13:28:43 BUG] Assertion failed: regionRanges != nullptr ../builder/cudnnBuilder2.cpp:1884 Aborting...

[2020-02-28 13:28:43 ERROR] ../builder/cudnnBuilder2.cpp (1884) - Assertion Error in makePaddedScale: 0 (regionRanges != nullptr) terminate called after throwing an instance of 'std::runtime_error' what(): Failed to create object Aborted (core dumped)

@BobDLA Have you found any solution on this? I encountered exactly the same error when casting int8 to float32.

I tried both tensorrt 6 and 7 and none worked for me

i meet same problem

Same problem.

I have the same problem. What's puzzling me is that trtexec successfully runs inference with the same ONNX file! Verbose output is identical for both my code (adatped from sampleOnnxMNIST) and trtexec, up to the error mentioned above.

Well, actually trtexec was able to run inference because it would use fp32 inputs, which it does by default.

When forced to use int8 inputs via the --inputIOFormats parameters, it stops with the exact same error.

Same here

What's the use case of casting INT8->FP32 in this way outside of quantization? It looks from the OP in input is just being casted between different datatypes, so it can be simplified to have the input type be the final casted datatype to begin with

@kevinch-nv In my case, the goal was to reduce the total processing latency by sending 8-bit data instead of fp32 to the GPU.

@kevinch-nv In my case, the goal was to reduce the total processing latency by sending 8-bit data instead of fp32 to the GPU.

Same here

Unfortunately, we currently do not support int8->float32 casting outside of quantization. You will have to use full floating point precision to provide the data to TensorRT.

Seems like a long time issue that hasn't been resolved yet. I actually found a workaround last year and hope it helps.

I wrote a cuda kernal which accepts int8 from CPU and cast to float on GPU, then go ahead with tensorrt as normal process.

Anyway I think it should be a useful enhancement for some cases when copying latency is really a concern. Tensorrt is a powerful tool, but thinking about how frustrated when tensorrt inference time takes just around 10ms while memory copy takes another 10ms. Using int8 as input without full quantization can be an acceptable trade off.

2022s here, and still no progress?

how about int8->int8 ?