gazelle_plugin

gazelle_plugin copied to clipboard

gazelle_plugin copied to clipboard

Native SQL Engine plugin for Spark SQL with vectorized SIMD optimizations.

**Describe the bug** DPP is broken, here's the example on TPC-DS Q1  **To Reproduce** run TPC-DS Q1 **Expected behavior** fix the DPP feature **Additional context** Add any other context...

## What changes were proposed in this pull request? Add developer, license, and plugin for publish jar ## How was this patch tested? Pass in local

**Describe the bug** repartition impacted the null count number dfw=spark.read.format("arrow").load("/ss_customer_sk.parquet") dfw.where("ss_customer_sk is null").count() > 129583501 dfw.repartition(144).where("ss_customer_sk is null").count() > 64804994 **To Reproduce** **Expected behavior** the same number as without repartition...

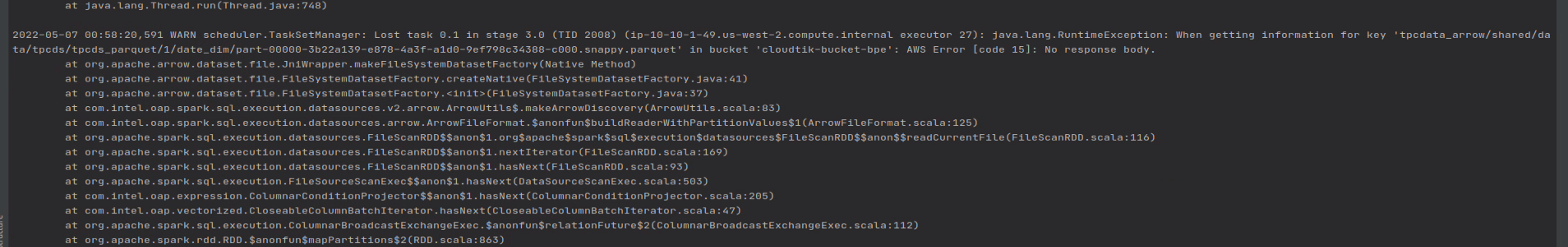

**Describe the bug**  We are trying to use gazelle on AWS EC2 instance with s3 storage. It encounter one java.lang.RuntimeException when getting information for key 'tpcdata_arrow/shared/data/tpcds/tpcds_parquet/1/date_dim/part-00000-3b22a139-e878-4a3f-a1d0-9ef798c34388-c000.snappy.parquet' in bucket 'cloudtik-****':...

**Describe the bug** When using get_physical_plan in gazelle_analysis, there is an issue raised "error: unbalanced parenthesis at position 1" **To Reproduce** Just use the gazelle_analysis to call get_physical_plan function. **Expected...

**Describe the bug** Current framework cannot share below 3 jar files, 1. spark-arrow-datasource-standard, 2. spark-columnar-core, 3. spark-sql-columnar-shims-common I would suggest to add Spark version in the artifact id and create...

We will need to support multiple Spark versions on a single code base. The ideal solution is to use Shims to separate version specific code into a Shim layer specific...

**Describe the bug** TPC-DS Q2, columnar exchange is not reused. ``` == Physical Plan == AdaptiveSparkPlan (95) +- == Final Plan == ArrowColumnarToRow (56) +- ColumnarSort (55) +- ColumnarCustomShuffleReader (54)...

**Describe the bug** [ERROR] [Error] : Symbol 'type org.apache.log4j.AppenderSkeleton' is missing from the classpath. This symbol is required by 'class org.apache.spark.SparkFunSuite.LogAppender'. Make sure that type AppenderSkeleton is in your classpath...

**Is your feature request related to a problem or challenge? Please describe what you are trying to do.** I have tried to use JDK11 to compile and run project. However...