notebooks

notebooks copied to clipboard

notebooks copied to clipboard

poker-dvs_classifier - error

I get this error in cell[11] when trying to run poker-dvs_classifier.ipynb : only Tensors of floating point and complex dtype can require gradients.

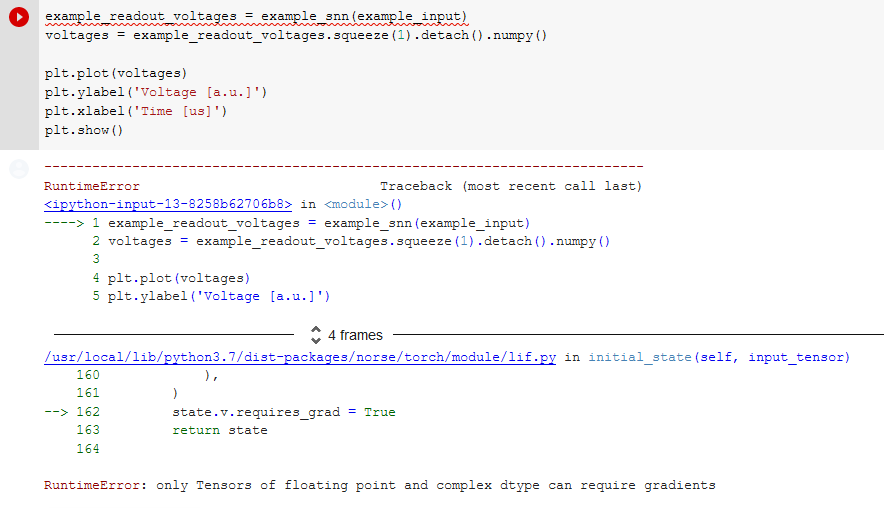

I get the same error when running the unmodified notebook in Google Colab. Specifically, the line "example_readout_voltages = example_snn(example_input)" fails with following error in method initial_state of class LIFRecurrentCell:

RuntimeError Traceback (most recent call last)

[4 frames]

/usr/local/lib/python3.7/dist-packages/norse/torch/module/lif.py in initial_state(self, input_tensor) 160 ), 161 ) --> 162 state.v.requires_grad = True 163 return state 164

RuntimeError: only Tensors of floating point and complex dtype can require gradients

Based on a quick online search, I figured it might be related to the PyTorch version, as Google Colab currently uses PyTorch 1.9 which was released around the same time this notebook was updated last. I tried to uninstall PyTorch 1.9 and instead install PyTorch 1.8.0 but it threw the same error.

hello, this was an issue of the sparse tensor not having float values. I fixed it in https://github.com/neuromorphs/tonic/commit/85b6968ae499c0d5220f1892347c946b64b1d7ff feel free to ping me directly for issues regarding tonic: @biphasic so that I can see them earlier.

I'm grateful for your quick reaction @biphasic, but I wonder whether this could also be fixed on the Norse side.

As it appears to me, the problem is that the Long-typed voltage can't be used to calculate gradients. That voltage tensor is right now naïvely copied in Norse, so the type is simply inherited. Meaning, if the input tensor is a Long, the voltage tensor becomes a Long.

We might want to keep event-based tensors as natural numbers and not floats to avoid unnecessary complexity such as space, rounding errors, etc. That would require a bit of work on the Norse side to force conversions into floating point numbers, but that's negligible and can be done over the weekend.

I added a Norse issue for this here: https://github.com/norse/norse/issues/228

I think it might make sense to have a compact representation as bit vectors, but if we want to use the standard pytorch infrastructure, we should probably stick to float values (or half float) for now.

I definitely see your point @Jegp, but I think I agree with @cpehle here. Let's make our lives easy for the moment and if we need to optimize, we can do that later.

That's a valid point, and I agree we should keep Tonic out of it for now.

I do, though, believe the two things are mutually exclusive. We can use the standard PyTorch infrastructure with boolean vectors. We just need to ensure that the states are floating points, so the gradients can flow.

I agree that premature optimization is the root of all evil (thanks Knuth), but, unfortunately, I'm running into some real performance problems. We're having a hackathon over the weekend to dive into this a bit, so I'll try to explore some options and perhaps benchmark the significance of the datatype. You're of course more than welcome to join us @biphasic ;-)

sounds like a great plan to me :)

https://github.com/norse/notebooks/pull/18 shows how to use binned (integer) frames, which speeds up the whole thing by a lot.