SHARK

SHARK copied to clipboard

SHARK copied to clipboard

512x512 Causes Black Images or Memory Error on AMD RX580

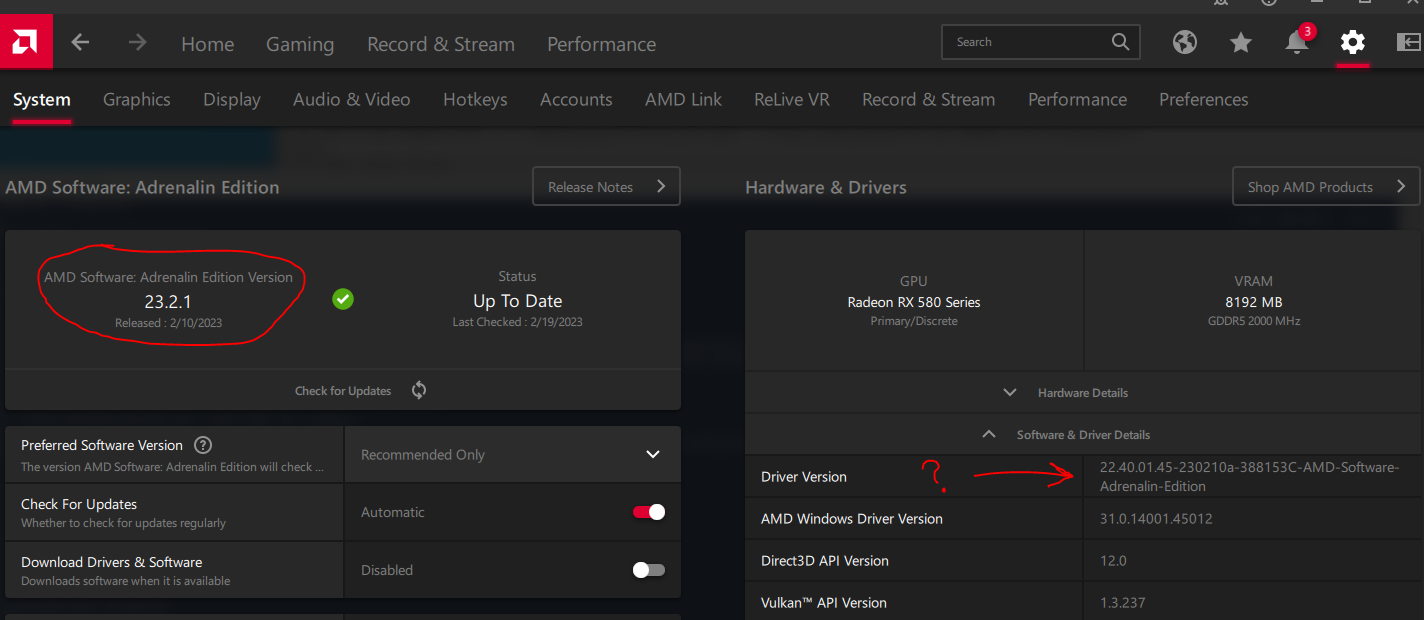

OS: Windows 10 Pro x64 (Version 10.0.19044 Build 19044) with 64gb RAM CPU: Intel i7-10700K GPU: Radeon RX580 (8gb VRAM) Shark version: v539 (used Quick Start .exe from https://github.com/nod-ai/SHARK) Driver: Adrenaline Edition Version 23.2.1 (released 2/10/2023) (Under the Software and Driver Details dropdown in the AMD app, it lists the driver version as "22.40.01.45-230210a-388153C-AMD-Software-Adrenalin-Edition" instead though. See attached image)

Problem: I'm a new user to Shark and Stable Diffusion in general, and tried all the options I've seen to fix this but I'm still getting either black images being generated, or I'm getting memory errors (RESOURCE_EXHAUSTED; VK_ERROR_OUT_OF_DEVICE_MEMORY).

Before listing what I've tried, I wanted to say that even after it gives me an error or generates a black image, my VRAM usage remains in the 7,000MB range (according to the AMD app > Performance > VRAM Metrics), as if Shark isn't releasing the VRAM back for future usage and is hogging it instead, thus leading to memory errors possibly? VRAM usage doesn't go down until I Cntrl-C in powershell to exit Shark. I'm not sure if this is how it's supposed to function though.

What I've tried so far and what I typed into PowerShell:

-

Setting the system wide env variable AMD_ENABLE_LLPC to 0

-

running Shark from PowerShell with --no-use_tuned

& "D:\AI\SHARK\v539\shark_sd_20230216_539.exe" --local_tank_cache="D:\AI\SHARK\v539\localcache\" --no-use_tuned -

running Shark with --use_base_vae --vulkan_large_heap_block_size=0

& "D:\AI\SHARK\v539\shark_sd_20230216_539.exe" --local_tank_cache="D:\AI\SHARK\v539\localcache\" --use_base_vae --vulkan_large_heap_block_size=0 -

running Shark with all 3:

& "D:\AI\SHARK\v539\shark_sd_20230216_539.exe" --local_tank_cache="D:\AI\SHARK\v539\localcache\" --no-use_tuned --use_base_vae --vulkan_large_heap_block_size=0

I generate with the default options and prompt, on the localhost:8080 GUI Device is listed as "Radeon RX580 Series => vulkan://0"

And have tried using Models: stabilityai/stable-diffusion-2-1-base stabilityai/stable-diffusion-2-1 CompVis/stable-diffusion-v1-4

Lower Res 384x384 work: Out of curiosity, I tried reducing the Height and Width to 384x384, keeping everything else default and it finally seemed to work and it generated an image (says around 2.66s/it while generating), and I was able to generate a few images in a row.

But when I raised it up to 512x512 again, it would either give errors or just black images (also around 2.50s/it).

Also to note: While I didn't notice any system lag with 384 or the 512 sizes, when I tried 416x416 my system started lagging very badly and I noticed it now said over 50.29s/it (I'm unsure what those figures mean) but everything got slow and took forever to complete. It did complete though, and generated a non-black image, but it took ~21minutes! "Total image generation time: 1294.1131sec"

Errors: Black images don't show an error, only a black image appears.

When it did say Error, the PowerShell says:

Traceback (most recent call last):

File "gradio\routes.py", line 374, in run_predict

File "gradio\blocks.py", line 1017, in process_api

File "gradio\blocks.py", line 835, in call_function

File "anyio\to_thread.py", line 31, in run_sync

File "anyio\_backends\_asyncio.py", line 937, in run_sync_in_worker_thread

File "anyio\_backends\_asyncio.py", line 867, in run

File "apps\stable_diffusion\scripts\txt2img.py", line 144, in txt2img_inf

File "apps\stable_diffusion\src\pipelines\pipeline_shark_stable_diffusion_txt2img.py", line 128, in generate_images

File "apps\stable_diffusion\src\pipelines\pipeline_shark_stable_diffusion_utils.py", line 95, in decode_latents

File "shark\shark_inference.py", line 138, in __call__

File "shark\shark_runner.py", line 93, in run

File "shark\iree_utils\compile_utils.py", line 381, in get_results

File "iree\runtime\function.py", line 130, in __call__

File "iree\runtime\function.py", line 154, in _invoke

RuntimeError: Error invoking function: D:\a\SHARK-Runtime\SHARK-Runtime\c\runtime\src\iree\hal\drivers\vulkan\vma_allocator.cc:693: RESOURCE_EXHAUSTED; VK_ERROR_OUT_OF_DEVICE_MEMORY; vmaCreateBuffer; while invoking native function hal.device.queue.alloca; while calling import;

[ 1] native hal.device.queue.alloca:0 -

[ 0] bytecode module.forward:3000 [

<eval_with_key>.13:7:18,

<eval_with_key>.13:10:20,

<eval_with_key>.13:12:27,

<eval_with_key>.13:13:15,

<eval_with_key>.13:21:10,

<eval_with_key>.13:40:20,

<eval_with_key>.13:37:11,

<eval_with_key>.13:42:29,

<eval_with_key>.13:43:17,

<eval_with_key>.13:51:12,

<eval_with_key>.13:67:13,

<eval_with_key>.13:70:20,

<eval_with_key>.13:71:12,

<eval_with_key>.13:74:29,

<eval_with_key>.13:75:17,

<eval_with_key>.13:83:12,

<eval_with_key>.13:94:12,

<eval_with_key>.13:100:16,

<eval_with_key>.13:117:12,

<eval_with_key>.13:142:18,

<eval_with_key>.13:110:12,

<eval_with_key>.13:143:14,

<eval_with_key>.13:144:15,

<eval_with_key>.13:130:12,

<eval_with_key>.13:146:12,

<eval_with_key>.13:154:12

(This continues for many lines.....)

Other errors I noticed in PowerShell:

RuntimeError: Error registering modules: D:\a\SHARK-Runtime\SHARK-Runtime\c\runtime\src\iree\hal\drivers\vulkan\native_executable.cc:157: INTERNAL; VK_ERROR_DEVICE_LOST; while invoking native function hal.executable.create; while calling import;

WARNING: [Loader Message] Code 0 : windows_read_data_files_in_registry: Registry lookup failed to get layer manifest files.

Tuned models are currently not supported for this setting.

Using target triple -iree-vulkan-target-triple=rdna2-unknown-windows from command line args

Screenshot of AMD driver:

Please set the PowerShell environment var $env:AMD_ENABLE_LLPC='1' and run from the terminal

Thank you, that mostly worked but with some issues.

Short Version: Works up to 768x768, but I can't change Image size, Models, or switch between Text/Image-to-Image tabs without it causing an error.

Long Version:

After setting "$Env:AMD_ENABLE_LLPC=1" in powershell, and running again with "--no-use_tuned --use_base_vae --vulkan_large_heap_block_size=0" I was able to generate a 512x512 image. I did around 5 in a row.

Then I tried to do a 512x768 and it gave me a memory error. After that, it wouldn't let me generate 512x512's again, but it would let me do a 384x384.

Then I Cntrl+C in powershell to quit shark, and restarted it with same command line as above. This time I tried to generate a 512x768 first, and it worked. I did around 5 in a row, although it was lagging my system. Then I switched over to 512x512 again, and it stopped working and gave a memory error.

As a final test, I quit shark again and restarted it. This time I used 768x768. It worked but was slow and lagged my system. 784x784 did not work though and gave a different error.

Also, changing only the Models dropdown (but keeping the same image size) causes an error and I need to quit/restart Shark.

So it seems whatever settings I use for my first generate works, but I can't change them after I generate my first image without causing errors.

Definitely a step in the right direction though.

In general larger image sizes are going to take longer/use more resources, so this is largely expected.

A fix went in recently that should allow for switching from img2img back to txt2img.

I'm not able to reproduce the error on changing sizes, but it does take some time to recompile. Could you post your error messages?