nextflow

nextflow copied to clipboard

nextflow copied to clipboard

resume: cached results sometimes are processed again

Bug report

Re-rerunning pipeline several times with option -resume causing that some tasks are processed again.

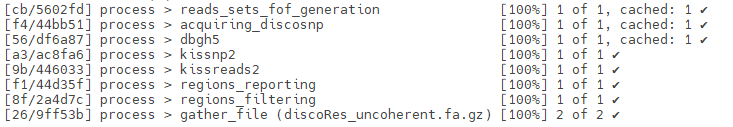

For example the pipeline is finished, then I change something in the LAST task. After re-running the pipeline with -resume option, I can see a very nice cached list:

[71/bf7732] process > bwa_rmdup (g37) [100%] 2 of 2, cached: 2 ✔

[61/65d78a] process > hap_call (merged_flank100.27) [100%] 60 of 60, cached: 60 ✔

[c5/cd472e] process > gath_hcbams (pat1) [100%] 2 of 2, cached: 2 ✔

[ae/829f44] process > gath_gvcfs_gatk (pat1) [100%] 2 of 2, cached: 2 ✔

[ee/26c340] process > gvcf_to_vcf (pat1) [100%] 2 of 2, cached: 2 ✔

Then I change something in the last taks again, and again, and again resuming everytime, and randomly, occasionally the pieline starts calculating again from i.e. hap_call process.

I do use .groupTuple() so I guess the bug is similar as reported here: https://groups.google.com/g/nextflow/c/zA9f4bfbwwE/m/fSL4k4HeBAAJ?utm_medium=email&utm_source=footer however I don't know the status of issue... will it be fixed?

Environment

N E X T F L O W version 21.10.5 build 5658 created 08-12-2021 14:39 UTC (15:39 CEST)

openjdk version "1.8.0_282" OpenJDK Runtime Environment (Zulu 8.52.0.23-CA-linux64) (build 1.8.0_282-b08) OpenJDK 64-Bit Server VM (Zulu 8.52.0.23-CA-linux64) (build 25.282-b08, mixed mode)

Ubuntu 20.04.3 LTS

GNU bash, version 5.0.17(1)-release (x86_64-pc-linux-gnu)

I've experienced this too. Many times, in fact, and it's annoying. If any of the preceding processes that are re-run, apparently unnecessarily, take a significant time to complete then many hours can be wasted. If there is a solution to this it would be most appreciated.

I have some issue with groupTuple too that it sometimes mixes the files from different tuples, which causes an error in the next process.

Hi @thedam @bobamess , could either of you post a minimal example that reproduces this bug? It would be good if we can pinpoint groupTuple as the culprit.

@nvnieuwk your issue seems different enough that it might be good to submit an issue for it, also with a minimal example.

Thanks for the response, it hasn't happened to me anymore since I posted here and I don't really know how to reproduce it. But if I would encounter it again I'll make an issue about it.

Hi @bentsherman, sorry, but it's hard to reproduce as it happens randomly.

My code is a simple scatter-gather pipeline:

hap_call(BamCh, BedSplits, BamCh.last()) //BamCh.last() = we are sure all bams are created

HcBams = hap_call.out.HCbams.groupTuple()

HcGvcfs = hap_call.out.GVCFsParts.groupTuple()

gath_hcbams(HcBams)

gath_gvcfs_gatk(HcGvcfs)

gvcf_to_vcf(gath_gvcfs_gatk.out.RawGvcfs)

RawVCFs = gvcf_to_vcf.out.RawVCF.groupTuple().join(BamCh)

filter_raw_gatk(RawVCFs)

////// FreeBayes

free_call(BamCh, BedSplits, BamCh.last())

FbRawVcfs = free_call.out.RawVCFsParts.groupTuple()

free_gather_vcfs(FbRawVcfs)

////// Merge VCFs

FreebayesFinalVCFgz = free_gather_vcfs.out.FreebayesFinalVCFgz

GatkFinalVCFgz = filter_raw_gatk.out.GatkFinalVCFgz

MergedVCFgz = GatkFinalVCFgz.join(FreebayesFinalVCFgz, remainder: true)

merge_final_vcfs(MergedVCFgz)

Now, inside merge_final_vcfs script just add echo "aaa" so after re-run with -resume it will be processed again.

If everything will run correctly, change merge_final_vcfs again (i.e. remove echo "aaa") and rerun the pipeline again.

After a few times of doing it, you may have a chance to see that pipeline starts again from hap_call or free_call etc...

I don't know how to report it or describe it in more specific manner or which part of log files to copy here...

Cheers Damian

Hi

I too do not know how to reproduce this. Not sure exactly when I last saw this, but it would have been on an older version than the latest Nextflow.

When it does happen, it’s as though the cache is incomplete.

Is the cache solely dependent on the contents of the workDir? Or does it also depend on .nextflow/cache/* ?

I suspect the latter.

I’m sure all of the workDir and their contents are there, but could there be sometimes be a delay in the cache being written to in .nextflow/cache/* when errors occur, and so some of this is missing?

I’ve never thought to check this and am not sure I would know what to look for.

Or, is it that some value or parameter produced by an earlier process, or channel, only exists in memory while the workflow is running, and so the workflow resumes at that earlier process, or channel, to re-create it?

From: Ben Sherman @.> Sent: 21 July 2022 23:36 To: nextflow-io/nextflow @.> Cc: Robert Amess @.>; Mention @.> Subject: Re: [nextflow-io/nextflow] resume: cached results sometimes are processed again (Issue #3005)

Hi @thedamhttps://github.com/thedam @bobamesshttps://github.com/bobamess , could either of you post a minimal example that reproduces this bug? It would be good if we can pinpoint groupTuple as the culprit.

@nvnieuwkhttps://github.com/nvnieuwk your issue seems different enough that it might be good to submit an issue for it, also with a minimal example.

— Reply to this email directly, view it on GitHubhttps://github.com/nextflow-io/nextflow/issues/3005#issuecomment-1191998829, or unsubscribehttps://github.com/notifications/unsubscribe-auth/ANZBGH2TSPY453LGXLVYIETVVHGELANCNFSM52J5XSUA. You are receiving this because you were mentioned.Message ID: @.@.>>

@thedam @bobamess - a quick request to please add the -dump-hashes command to the NF CLI for

- a fresh run

- resumed run

This can give us more insights on why this might be happening.

Hey, I finally catched this issue with --dump-hashes.

However I don't want to post here my full pipeline, I can send you the dumps privately. Let me know how can I send you the files.

Cheers

Hi @thedam ,

Sure, feel free to ping me on the Nextflow slack channel (abhi18av).

Hi,

I think I got a similar issue while experimenting. If required, I have a pipeline which is consistent on which processes caches are not used. Even on a test dataset.

I'm currently checking if the issue is not from my side but I find nothing (still, I may be dumb and missing something). However, if needed I could send the pipeline + test data (privately).

Hi @AxVE ,

I'm currently working on a content piece which can be used as guidance for debugging these issues better and it should be out this week/early next week.

In the meantime, I encourage you to refer these blog posts

- https://www.nextflow.io/blog/2019/demystifying-nextflow-resume.html

- https://www.nextflow.io/blog/2019/troubleshooting-nextflow-resume.html

@abhi18av

I put the caching in lenient and it works. I already tried but I did not run 2 times to validate this was working... (silly me).

So thanks !

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.