nats-server

nats-server copied to clipboard

nats-server copied to clipboard

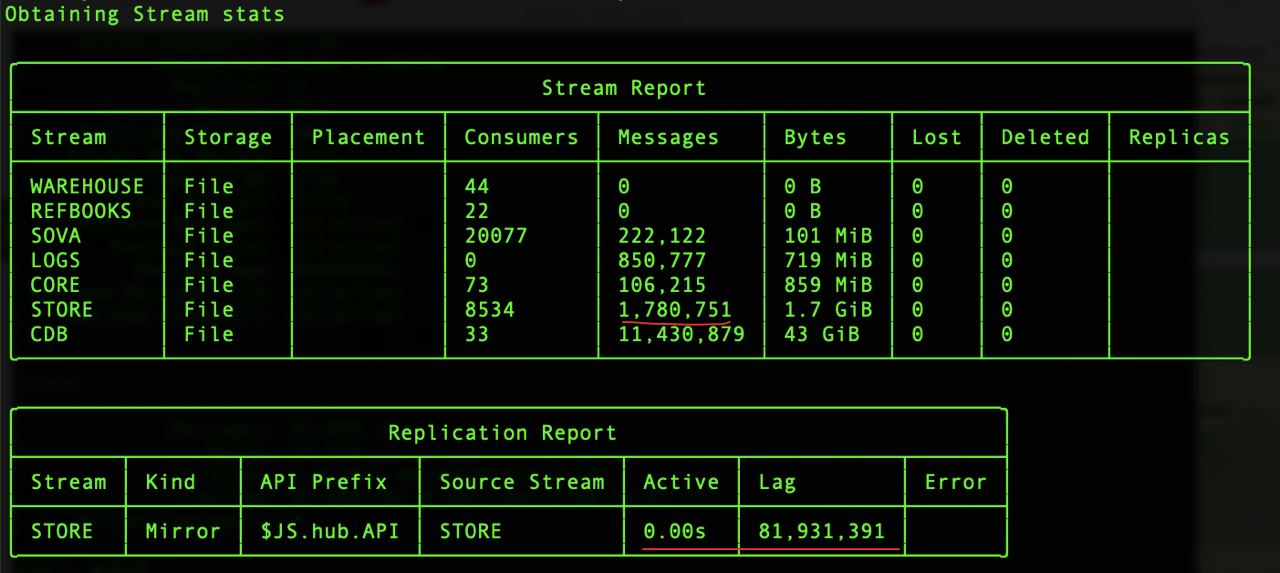

nats str report replication report lag != 0

nats str report replication report lag != 0

How can I speed up writing to leaf on a configured mirror In our case, the data in the hub in the stream STORE data arrives faster than it appears on the leaf mirror speed is much lower. What are the recommendations for setting up mirror on leaf nodes

To me it looks like the mirror is not active at all, so no mirroring is happening within your setup.

You would need to share setup configs so we can double check if there is a mis-configurations.

I also noticed a very large set of consumers for SOVA and STORE. What is the role of these consumers. Consumers are not as lightweight as NATS core subscriptions.

Mirror active, but replication is very slow when. But if consumers are a few count replication speed is very fast.

Config of my mirror stream on leaf1:

nats str info STORE --js-domain=leaf1

Information for Stream STORE created 2022-04-23T11:52:03+03:00

Configuration:

Acknowledgements: true

Retention: File - Limits

Replicas: 1

Discard Policy: Old

Duplicate Window: 2m0s

Allows Msg Delete: true

Allows Purge: true

Allows Rollups: false

Maximum Messages: unlimited

Maximum Bytes: 93 GiB

Maximum Age: 30d0h0m0s

Maximum Message Size: unlimited

Maximum Consumers: unlimited

Sources: STORE, API Prefix: $JS.hub.API, Delivery Prefix:

Source Information:

Stream Name: STORE

Lag: 195,875,433

Last Seen: 0.00s

Ext. API Prefix: $JS.hub.API

State:

Messages: 47,099,356

Bytes: 46 GiB

FirstSeq: 1 @ 2022-04-23T08:52:04 UTC

LastSeq: 47,099,356 @ 2022-04-25T15:35:47 UTC

Active Consumers: 8122

nats str info STORE --js-domain=hub

Information for Stream STORE created 2022-04-16T17:34:39+03:00

Configuration:

Subjects: STORE.>

Acknowledgements: true

Retention: File - Limits

Replicas: 1

Discard Policy: Old

Duplicate Window: 2m0s

Allows Msg Delete: true

Allows Purge: true

Allows Rollups: false

Maximum Messages: unlimited

Maximum Bytes: unlimited

Maximum Age: 3d0h0m0s

Maximum Message Size: unlimited

Maximum Consumers: unlimited

State:

Messages: 234,335,999

Bytes: 216 GiB

FirstSeq: 8,638,792 @ 2022-04-22T15:35:43 UTC

LastSeq: 242,974,790 @ 2022-04-24T20:52:13 UTC

Active Consumers: 0

@derekcollison which optimal max consumers recommened per stream (or per leaf node) if my hardware Xeon 64 kernels and RAM 32Гб ? Role of large consumers - 40000 offices allocated per 8 leafs. Each office has 2 consumers on leaf.

So you can see the mirrors message count increase over time?

8122 consumers is quite alot. Consumers are not like NATS core subscriptions, they are active individual states that use much more resources.

What are you trying to accomplish with over 8k consumers? You are trying to consume the messages by each of 40,000 offices such that each has their own consumer and state, is that correct?

@derekcollison

Well, yes.

- We see that the mirror is being updated but very slowly

- Yes, each of our 40,000 offices has its own messages and states.

What number of consumers is considered acceptable to increase throughput? Is it possible to increase the mirror speed with settings? Something like ackall, or ask none between leaf and hub?

What is the ingest rate to the main stream that is being mirrored and fanned out? Are all leafnode mirrors exhibiting the same behavior? Are the streams R1 or R>1 and if so what R value? Are the consumers that are attached to the leafnode mirrors durable? Are they push or pull?

Thanks.

What is the ingest rate to the main stream that is being mirrored and fanned out? about 1000 messages per second

Are all leafnode mirrors exhibiting the same behavior? configured source on one mirror on other mirrors in general, approximately the same, but there is a feeling that source is faster

Are the streams R1 or R>1 and if so what R value? We have one stream in one direction from hub to leaf(1-8)

Are the consumers that are attached to the leafnode mirrors durable? Yes

Are they push or pull? Pull

Sincerely, andy

Is the stream replicated? Meaning R3? Thanks.

@perestoronin Closing since there hasn't been a response for some time. If you can reproduce the issue with the latest version of the server, feel free to re-open.