`ghost_batchnorm` with a single normalization op

🚀 Feature Request

An implementation that made only one call to F.batch_norm or F.layer_norm would be more performant than the one we have now. Before implementing the change, some investigation / benchmarking should be done to verify this on current Composer benchmarks.

Some subtleties here learned from spending a couple hours on #342:

- We need to ensure identical behavior to vanilla batchnorm for inference, so that onnx export doesn't get messed up. This mostly just means calling F.batch_norm instead of F.layer_norm, or any custom sequence of ops. This is easy to do by subclassing

_BatchNormand overridingforward()to start with:

if not self.train:

return super().forward(...)

- Getting consistent behavior with variable batch sizes is tricky. And variable batch sizes happen anytime

drop_last=Falsein the dataloader. You can reshape the inputs in the common case that the layout is NCHW and the ghost batch size is a factor of the batch size, but you need a clean story for handling straggler elements and/or NHWC.

Hi @dblalock! Is this still a valid issue? If so I would love to give it a try.

Yes. Go for it!

Hi @dblalock! I have had a little time to take a look at this issue. I am currently facing the problem with updating optimizer parameters with update_params_in_optimizer in some test cases. I am not familiar with MagicMock but I have done some debugging and in the previous version len(remove_params) and len(added_params) equaled 0 which was expected with the older version. But with the new implementation the memory addresses differ which results in going further into update_params_in_optimizer method. My question is regarding validity of the current tests using mocked optimizer. There is some weird behavior that in this function we have both added_parms and removed params in the optimizer which would suggest that the optimizer itself has some param_groups (I have checked the the test for module_surgery itself, namly test_update_params_in_optimizer and that was the case). But in the test cases for ghost_batchnorm the param_groups for the mocked optimizer is empty which would suggest that it has not been initalized with any parameters, hence I am confused why the added and removed params are also not empty. Could you clarify it for me a bit?

Hi @Bearnardd,

Could you help me understand the exact errors you're seeing and what elicits them? I'm looking at the GhostBatchNorm tests and MagicMock is only used to let us access state.model without having to instantiate a full State object. But it looks like you're referring to some other tests?

Hi @dblalock,

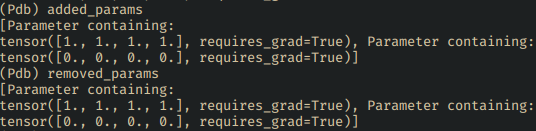

Yeah, sure. So I am talking about the test_normalization_correct_functional. During this test their is a call to the replace_module_classes method which invokes update_params_in_optimizer method during which test case fails with the RuntimeError: Parameter groups [-1, -1] are not in the optimizer. I have done some debugging and the results does not make much sense to me. Let's hop in and debug this method during execution of test_normalization_correct_functional. Firstly we can see that in fact there are some parameters of the optimizer to be removed and added:

So in my understanding if there are some parameters to be removed from the optimizer that implies that this optimizer already has some parameters allocated. But if we take a look at its

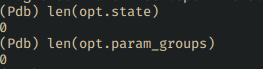

So in my understanding if there are some parameters to be removed from the optimizer that implies that this optimizer already has some parameters allocated. But if we take a look at its param_groups we can see that it is empty:

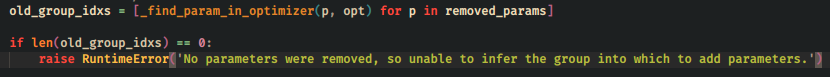

As the result later in the code it triggers the error:

As the result later in the code it triggers the error:

In the previous version of the

In the previous version of the ghost_batchnorm this problem did not occur since there was no removing/adding parameters to the optimizer.

Okay, just spent some time going through all the relevant code and verifying that the test passes with the current GhostBatchNorm implementation. If I'm understanding correctly, something in your branch has changed the behavior such that there's now an optimizer passed into the apply_ghost_batchnorm() function.

It's not surprising that there are params to add and remove--since the module surgery function is just passing in all the params from the original and new batchnorm modules. It's just weird that there's an optimizer object being passed in with no param groups (I don't think pytorch optimizers even let you do this). Is the optimizer object a MagicMock somehow?

I haven't seen your branch (link?) or your full stack trace so it's hard to say much more than that. Also, I'm a bit confused about the error you're encountering since the error message doesn't come from the lines of code in your screenshot; I'm assuming the RuntimeError: Parameter groups... error is the correct one.

Hi @dblalock!

First of all thanks for the detailed answer. Here is the branch. Yes exactly, the optimizer is a MagicMock(<MagicMock name='mock.optimizers.param_groups' id='139701712347920'>). I had the same feelings about optimizer without the param groups. And yeah I pasted the wrong error.

So I checked out your branch and don't seem to be getting any error:

~/code/bearnardd-composer single_op_ghost_batchnorm ❯ python -c 'import composer; print(composer.__file__)'

/Users/davis/code/bearnardd-composer/composer/__init__.py

~/code/bearnardd-composer single_op_ghost_batchnorm ❯ pytest tests/algorithms/test_ghost_batchnorm.py::TestGhostBatchesNormalized::test_normalization_correct_functional

=================================================================== test session starts ====================================================================

platform darwin -- Python 3.8.6, pytest-7.2.1, pluggy-0.13.1

rootdir: /Users/davis/code/bearnardd-composer, configfile: pyproject.toml

plugins: pytest_codeblocks-0.16.1, anyio-3.6.1, httpserver-1.0.5, timeout-2.1.0, mock-3.7.0, cov-4.0.0

collected 1 item

tests/algorithms/test_ghost_batchnorm.py . [100%]

==================================================================== 1 passed in 0.11s =====================================================================

~/code/bearnardd-composer single_op_ghost_batchnorm ❯

Also, based on the magicmock getting passed in as the State, I'm guessing the test code changed somehow? Are there changes to test_ghost_batchnorm.py that aren't pushed to github? The current code doesn't pass in a State object anywhere AFAICT:

def test_normalization_correct_functional(self, num_dims: int, ghost_batch_size: int, batch_size: int) -> None:

module = ModuleWithBatchnorm(num_dims=num_dims)

ghostbn.apply_ghost_batchnorm(module, ghost_batch_size=ghost_batch_size)

self._assert_ghost_batches_normalized(module=module, ghost_batch_size=ghost_batch_size, batch_size=batch_size)

Another guess is that the removal of the _already_ghost_batchnormed checks in apply_ghost_batchnorm, along with making GhostBatchnorm subclass _BatchNorm might be causing unwanted recursion.

Hi @dblalock!

Sorry for all the confusion, I guess working on the vacation was not the best idea. The one that is failing is test_normalization_correct_algorithm. So the description of the problem I have made before was about this one.

Closing. Tracking elsewhere as low pri. We're open to community suggestions!