libnetwork

libnetwork copied to clipboard

libnetwork copied to clipboard

Fix bridge connection reset due to invalid packets

Add drop of conntrack INVALID packets in input such that invalid packets due to TCP window overflow do not cause a connection reset.

Due to some netfilter/conntrack limitations, invalid packets are never treated as NAT'ed but reassigned to the host and considered martians. This causes a RST response from the host and resets the connection. As soon as NAT is setup, for bridge networks for instance, invalid packets have to be dropped in input.

The implementation adds a generic DOCKER-INPUT chain prefilled with a rule for dropping invalid packets and a return rule. As soon as some bridge network is setup, the DOCKER-INPUT chain call is inserted in the filter table INPUT chain.

Fixes #1090.

Signed-off-by: Christophe Guillon [email protected]

Note that this pull request was never treated, while it fixes an unresolved (but closed) issue #1090.

Maybe one should re-open the issue #1090, or should I add a new issue ?

ping @arkodg @euanh ptal

@arkodg

would be a right place to add the invalid rule only for the specified interface in the

FORWARDchain

Unfortunately, invalid packets, including benign out-of-window packets must be treated (actually dropped) in the input rule, before the FORWARD chain is processed. Otherwise, netfilter will reset connection before passing the packet to the FORWARD chain. Which mean that we cannot be more specific, any invalid INPUT packet has to be dropped. I've been trying the proposed solution (add the rule to forward chain) and it does not work indeed.

Can you please add test cases as well in https://github.com/docker/libnetwork/blob/master/drivers/bridge/setup_ip_tables_test.go

Yes, I will allocate some time as soon as possible to add tests.

@guillon I have not tried this out, but kube-proxy fixed this issue by inserting a similar rule in the FORWARD chain

https://github.com/kubernetes/kubernetes/pull/74840

Seems odd, but I will verify this again, thanks for the pointer.

I can confirm that adding the rules in the FORWARD chain does not work.

From my experiments the fix done in kube-proxy does not solve the issue, either they did not really test it, or their initial issue is different then ours.

@guillon if you can share your reproduction steps, I'd be happy to take a stab at it as well

I can confirm that adding the rules in the FORWARD chain does not work. From my experiments the fix done in kube-proxy does not solve the issue, either they did not really test it, or their initial issue is different then ours.

Should we open a ticket for that in the kubernetes issue tracker?

@arkodg I have forked a testbench for the kubernetes issue which I modified to exhibit the issue solved by this pull request. Get it at : https://github.com/guillon/k8s-issue-74839 If you can play with it and possibly test yourself some variants of iptables and the pull request it would be great!

@thaJeztah I still have to exhibit the problem under kubernetes, in order to sort things out, I'm working on it... Actually with the initial testbench from k8s, as the server and client are both NATed (i.e. specifically because the server is NATed in the setup), the problem should not appear, hence I still do not understand how they produced the problem with the testbench, I have to investigate on a local kubernetes setup (which I just started...).

Actually, when installing kubernetes on some physical Ubuntu hosts as described in https://vitux.com/install-and-deploy-kubernetes-on-ubuntu/, the kubernetes networking does not use NAT, hence I can't reproduce the issue over kubernetes. I may have to search for a kubernetes setup with bridge network...

@guillon awesome work with the repro steps and the comments, was able to recreate this issue very easily

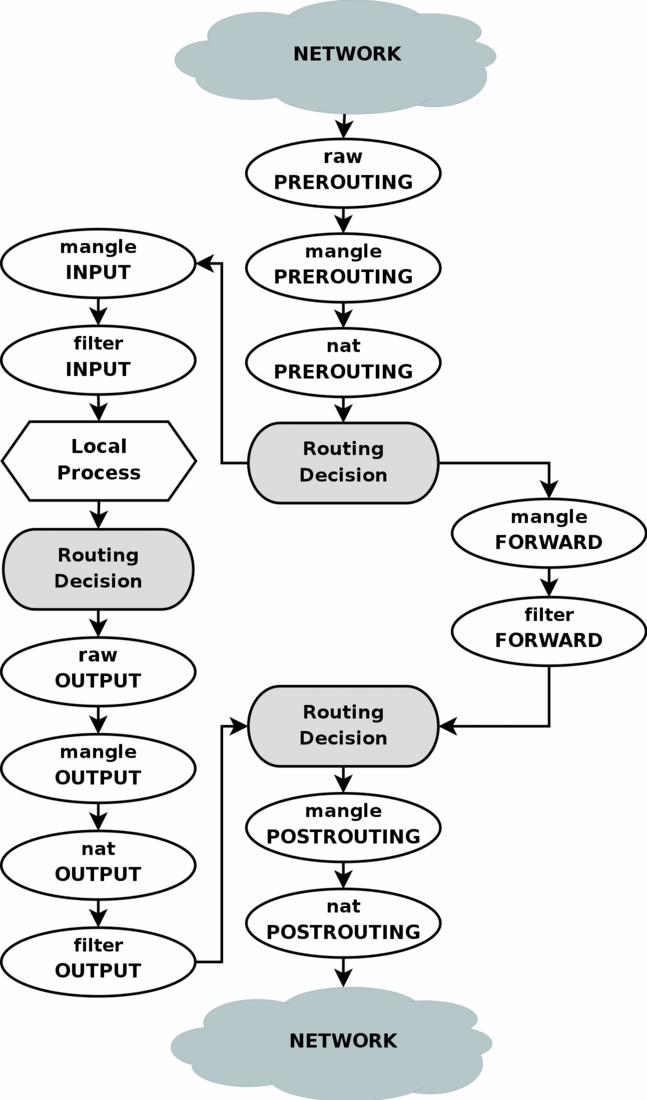

I observed that these INAVLID packets traverse from the PREROUTING to INPUT chain instead of the FORWARD chain . This is the part that does not add up .

My hunch is that for some reason INVALID packets do not get matched in nat-PREROUTING where they should based on this pretty picture

which is why adding this rule did the trick

sudo iptables -I PREROUTING 1 -t mangle -m conntrack --ctstate INVALID -j DROP

I verified the above using rules such as

sudo iptables -t mangle -A PREROUTING -j LOG -m state --state INVALID

and

sudo iptables -A INPUT -j LOG -m state --state INVALID

I'm hesitant to add these rules to the INPUT chain because you cannot even filter it on the docker0 interface and maybe for a particular use-case the user might want to send a RST out . Here's the log -

IN=ens3 OUT= MAC=02:4d:90:46:c6:ce:02:69:d2:4b:17:24:08:00 SRC=172.31.34.149 DST=172.31.42.122 LEN=49 TOS=0x00 PREC=0x00 TTL=64 ID=17787 DF PROTO=TCP SPT=9000 DPT=34945 WINDOW=229 RES=0x00 ACK PSH URGP=0)

Indeed the filtering can't be done on docker0, all invalid packets in conntrack need to be dropped. The first routing decision in your diagram is that an invalid packet in prerouting is routed to the host as fallback and DNAT is abandonned, From what I understood at the time of the fix, it's the only way to setup the bridge interface correctly.

Note that since last year all our services (swarms + bare docker clients) are working perfectly with the fix.

Don't remember if I mentionned it, but the overflow window error is typical in WAN setups due to WAN optimizations at the WAN routers level (packets deduplication in particular and also some aggressive packet streaming optimization strategies). For instance with WAN deduplication, the local optimizing router has cache of IP packet fragments based on content hashing, hence, from a client downloading for instance a large artifact, the input packet rate can suddenly become very high, and the client interface does not even has the time to adjust it's receiving window.

The use case which revealed this issue was dockerized clients downloading from a distant artifacts database service, where WAN deduplication is quite effective. The RST issue was causing very frequent and hard to understand - until I got it - download stalls+aborts (caused because the server receives the RST and stop trnasmiting, while the client wait forever at 0 b/s until some timeout occurs).

I have made at that time also a test over a WAN where I can also produce this by "artificially" making a client very slow, actually a bare curl with a limited input throughput to download a big artifact. This is sufficient to produce the issue. Download once, and again a second time (the WAN optimization being active), then the throughput rapidly reached over 50 Mbytes/s. If the client is too slow, suddenly the download stops to 0 b/s as the server received the infamous RST from the conntrack engine.

Thanks for describing the background, in this case is there any way to capture packets on both ends and determine if the WAN router and host are abiding by the TCP Congestion Control RFCs or not

Dropping these packets might solve your issue, but it still masks the problem because TCP is supposed to handle this case and the workaround causes more retransmissions on the network eating up the bandwidth

I'm afraid I can't tell whether the WAN routers behave correctly, I'm not expert enough. Actually it happens that the WAN is loaded, the routers are being updated or [badly] tuned by the IT departement or other events over which I have no control. Probably, indeed, in such cases the WAN routers behaves sub-optimally and perhaps incorrectly.

In the facts, when the networks behaves incorrectly, containerized services fail, while bare services remain functional. This has been reported multiple times in other contexts and even in IaaS vendors setups when we follow cross references from the issue #1090.

What I've seen recommended is that as soon as setting up NAT tables, INVALID packets should be dropped. I agree that invalid packets can be created by bogus WAN routers, but I think we shouldn't care. For instance: https://www.spinics.net/lists/netfilter/msg51409.html also referred here: https://serverfault.com/questions/309691/why-is-our-firewall-ubuntu-8-04-rejecting-the-final-packet-fin-ack-psh-wit/312687#312687

This seems to be a surprising flaw in the design of the iptables conntrack module, I couldn't really find an explanation for this weird behavior which makes NAT less stable when INVALID packet are not dropped explicitly.

Would it be possible to raise this issue with the conntreck folks, and see if this behavior is intentional or not before we consider adding such a rule

This is still an issue, and still something that needs to be fixed, is there any other place to subscribe for potential changes and or fixes?

Did someone take the time to discuss this with the conntrack people? Reading the discussion above, that's what's currently stalling this PR

Is there any progress in this PR? I've been having problems as well, and I've found that adding the following lines helps.

iptables -I INPUT -m conntrack --ctstate INVALID -j DROP

There seems to be a widespread way to add the above as a workaround, as shown in the link below. I don't know if it's bad network equipment manner or a libnetwork issue, but I think it would be in the community's best interest to provide solution or conclusion.

https://imbstack.com/2020/05/03/debugging-docker-connection-resets.html https://medium.com/swlh/fix-a-random-network-connection-reset-issue-in-docker-kubernetes-5c57a11de170

Any plan to merge this fix? as it has been outstanding for more than 4 years, and ppl are wasting tons of time in tracing down such issues.