pyodbc

pyodbc copied to clipboard

pyodbc copied to clipboard

Setting to return rows as dictionaries

Useful for some when returning values directly as JSON

Should this be a flag that causes all fetch calls to return dictionaries or a separate set of functions?

In my opion it would be undesirable to return real dictionaries because it doubles the code paths in pyodbc. Would it not make more sense to extend pyODBC row functionality to support getitem['fieldname'] in addition to getitem[columno] and then provide a custom JSON encoder like below.

import json class pyODBCEncoder(json.JSONEncoder): ... def default(self, obj): ... if isinstance(obj, pyodbc.Row): ... return obj.to_json() ... # Let the base class default method raise the TypeError ... return json.JSONEncoder.default(self, obj) ... dumps(myPyODBCRow, cls=pyODBCEncoder)

@Lexcon Good point, but Rows already support getitem. Unfortunately that isn't enough to make the JSON encoder turn it into a JSON object.

This was a suggestion via email. I wish I'd asked them to open the issue so they'd be in the conversation.

Another simple option, however, is for user's to wrap them. I often do this for logging or debug purposes. By wrapping the cursor from my db.connect() function (usually I have a db module in each project to consolidate connection handling).

I'll look at the JSON code again to see if there is something else that it might recognize that wouldn't double the code. Also, Rows are somewhat optimized for space.

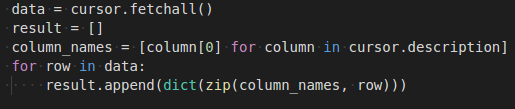

Hi guys, I am creating dict like below.

I am not 100% sure whether columns order is always same in both cursor.description and row.

Any further tough here? sqlite3 implements this which make it very convenient to use

yes, this would be a nice addition. (hello, I'm a pymssql refugee)

is there any progress with this?

Yep it would be nice to have this feature

I am facing a similar problem in one of my projects, I need to send the Nested JSON generated from the SQL Server database (using the FOR JSON Clause) via an API. Again since Row object is not JSON serializable, I need to convert it into Dict or List which ends up double encoding the JSON. Please let me know if you find a fix to this

But for simply converting the TSQL query output to JSON, the following code works just fine

This would be very handy. Consider a generic Flask route that should return a record fetched from pyodbc. Flask will automatically convert a returned dict to JSON, so it would be nice and clean to do something such as:

@app.route('/record/<key>')

def record_by_key(key):

cx = pyodbc.connect('DSN=MYDSN')

cursor = cx.cursor()

#the odbc driver I use is obscure and has bugs with parameterized queries so using ? doesn't work reliably

sql = """

select * from my_table where key='{0}'

""".format(key)

cursor.execute(sql)

row = cursor.fetchone(as_dict=True)

return row

Checking to see if there's been any progress on this. I'm also migrating from pymssql, which has this feature.

Yes please. Rows as dictionaries. 👍