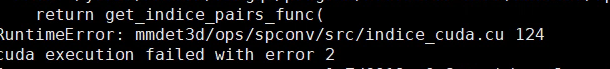

cuda execution failed with error

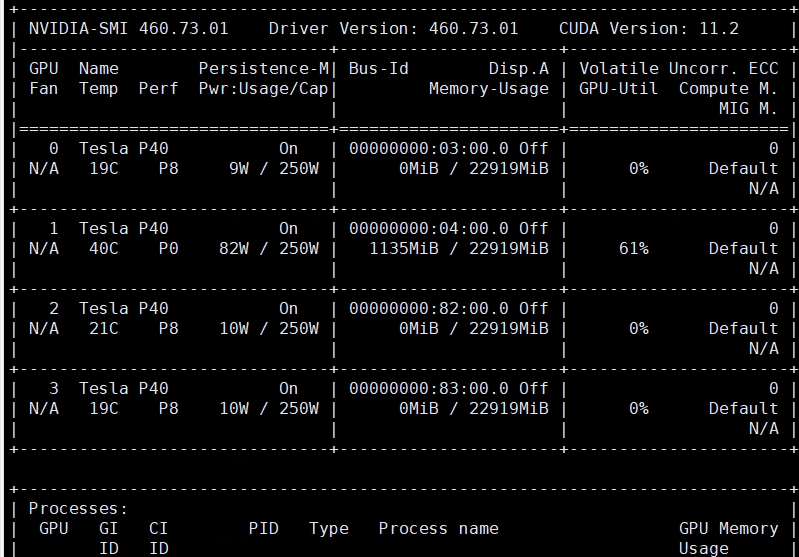

The code works fine when I set the gpu <=2. When I set the gpu ==4, the error occurs. What can I do to slove it ?

Typically this is an error related to OOM. Please check whether there are other processes running on the GPUs you are using. Here is a solution that can reduce the inference memory.

By the way, We will release a new version of the codebase that consumes significantly less memory than the current version in the near future.

I use the A100 48G to inference. But I get the same result.

It's better if you can provide more details about your experiments. For example: command used, full error log, the environment (versions of key libraries, type of GPU), etc. I will work on a docker version soon to avoid these problems in the future.

python -m torch.distributed.launch --nproc_per_node=$GPUS --master_port=$PORT tools/test_mmdet3d.py $CONFIG --launcher pytorch ${@:3}

I just replaced the test.py with the one from mmdet3d. The program works fine when the number of gpus is less than 3. I think it should have nothing to do with the test.py.

I just replaced the test.py with the one from mmdet3d. The program works fine when the number of gpus is less than 3. I think it should have nothing to do with the test.py.

I think you probably want to try removing lines 22-25 in this file and rerun python setup.py develop. Our current code is not compiled for P40 by default (should be sm61/compute61).

Sorry, It's all my fault. I replaced the test.py with the one from mmdet3d. But it doesn't open the muti tasks. However, adding -H localhost:port with different port to avoid port conflicts didn't work.

I print the tcp. From the results, setting the port does not seem to have any effect

I print the tcp. From the results, setting the port does not seem to have any effect

For the TCP problem, I would suggest you to open a separate issue and clearly describe the problem. I remember that in this issue we do have similar discussions and the problem was solved at that time.