milvus

milvus copied to clipboard

milvus copied to clipboard

[Bug]: collection has not been loaded to memory or load failed while loading 50M-100M vectors

Is there an existing issue for this?

- [X] I have searched the existing issues

Environment

- Milvus version: 2.2.0

- Deployment mode(standalone or cluster): cluster

- SDK version(e.g. pymilvus v2.0.0rc2): 2.2.0

- OS(Ubuntu or CentOS): ubuntu 20.04 5.15.0

- CPU/Memory:

HOST:

CPU(s): 80

Thread(s) per core: 2

NUMA node(s): 2

CPU max MHz: 3900.0000

Memory:

(8 channel * 2 socket) * 3200MT/s 1TB

CONTAINER:

in k8s limit with 20 + core 300GiB

- GPU:

not use

- Others:

Current Behavior

- create cluster with milvus2.2.0-release

- insert 50M 256-dim float vectors

- build HNSW index with params: ({ 'index_type': 'HNSW','metric_type': 'IP','params': {'M': 16, 'efConstruction':128} })

- load with 15 replicas

then error occurred:

pymilvus.exceptions.MilvusException: <MilvusException: (code=1, message=collection 437543825052155869 has not been loaded to memory or load failed)>

here is the log we exported: milvus220.zip

notice that if we build 1M vectors, then every thing goes well.

Expected Behavior

load index success

Steps To Reproduce

1. create cluster with milvus2.2.0-release

2. insert 50M 256-dim float vectors

3. build HNSW index with params: ({ 'index_type': 'HNSW','metric_type': 'IP','params': {'M': 16, 'efConstruction':128}

})

4. load with 15 replicas

Milvus Log

Anything else?

No response

Hi @neiblegy, this error means the loading was canceled, since v2.2.0, we have added a default load timeout (10min), which cancels loading for a loading collection with no any progress update for 10min.

For short answer, you can just change loadTimeoutSeconds config to longer.

We need to adjust the way we calculate the progress, for now, a segment would contribute to progress only if it was loaded in all replicas, but the loading tasks are added one-by-one replica, for large data and many replicas, it will take a long time to load a segment into all replicas

/assign

Will also add executor per QueryNode, to scale well

/assign @neiblegy

The resource we could supply is well enough, we have 400 cores + 5TB mem totally, and we need 100M vectors reach 2K QPS for query. I change the loading timeout, but I have so slow loading speed

发件人: Xiaofan @.> 日期: 星期三, 2022年11月23日 11:45 收件人: milvus-io/milvus @.> 抄送: Ryan Gao @.>, Mention @.> 主题: Re: [milvus-io/milvus] [Bug]: collection has not been loaded to memory or load failed while loading 50M-100M vectors (Issue #20769)

@neiblegyhttps://github.com/neiblegy

You has to check if you have enough memory. for 50M 256-dim * 12 replica you will need at least 500GB + memory

― Reply to this email directly, view it on GitHubhttps://github.com/milvus-io/milvus/issues/20769#issuecomment-1324512591, or unsubscribehttps://github.com/notifications/unsubscribe-auth/AD6ORVNWQMWNZMPMX5KZG73WJWHOTANCNFSM6AAAAAASIPHWWA. You are receiving this because you were mentioned.Message ID: @.***>

Milvus seems do the retry loop with errors: [2022/11/23 11:04:08.535 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825058760104] [error="The specified key does not exist."] [2022/11/23 11:04:08.580 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825058960234] [error="The specified key does not exist."] [2022/11/23 11:04:08.634 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059160331] [error="The specified key does not exist."] [2022/11/23 11:04:08.700 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059360430] [error="The specified key does not exist."] [2022/11/23 11:04:08.742 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059360537] [error="The specified key does not exist."] [2022/11/23 11:04:08.769 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059760643] [error="The specified key does not exist."] [2022/11/23 11:04:08.818 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059760742] [error="The specified key does not exist."] [2022/11/23 11:04:08.870 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825060160844] [error="The specified key does not exist."] [2022/11/23 11:04:09.558 +00:00] [INFO] [gc/gc_tuner.go:81] ["GC Tune done"] ["previous GOGC"=200] ["heapuse "=169] ["total memory"=114338] ["next GC"=436] ["new GOGC"=200] [2022/11/23 11:04:09.590 +00:00] [ERROR] [querynode/segment_loader.go:178] ["load segment failed when load data into memory"] [collectionID=437543825052155869] [segmentType=Sealed] [partitionID=437543825052155870] [segmentID=437543825058760097] [error="All attempts results:\nattempt #1:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825058760104)\nattempt #2:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825058960234)\nattempt #3:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059160331)\nattempt #4:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059360430)\nattempt #5:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059360537)\nattempt #6:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059760643)\nattempt #7:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059760742)\nattempt #8:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825060160844)\n"] [stack="github.com/milvus-io/milvus/internal/querynode.(*segmentLoader).LoadSegment.func3\n\t/go/src/github.com/milvus-io/milvus/internal/querynode/segment_loader.go:178\ngithub.com/milvus-io/milvus/internal/util/funcutil.ProcessFuncParallel.func3\n\t/go/src/github.com/milvus-io/milvus/internal/util/funcutil/parallel.go:83"] [2022/11/23 11:04:09.590 +00:00] [ERROR] [funcutil/parallel.go:85] [loadSegmentFunc] [error="All attempts results:\nattempt #1:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825058760104)\nattempt #2:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825058960234)\nattempt #3:NoSuchKey(key=stats_log/437543825052155869/437543825052155870/437543825058760097/100/437543825059160331)\nattempt #4:NoSuchKey(key=stats_log/

发件人: 高 源 @.> 日期: 星期三, 2022年11月23日 19:24 收件人: milvus-io/milvus @.>, milvus-io/milvus @.> 抄送: Mention @.> 主题: 答复: [milvus-io/milvus] [Bug]: collection has not been loaded to memory or load failed while loading 50M-100M vectors (Issue #20769) The resource we could supply is well enough, we have 400 cores + 5TB mem totally, and we need 100M vectors reach 2K QPS for query. I change the loading timeout, but I have so slow loading speed

发件人: Xiaofan @.> 日期: 星期三, 2022年11月23日 11:45 收件人: milvus-io/milvus @.> 抄送: Ryan Gao @.>, Mention @.> 主题: Re: [milvus-io/milvus] [Bug]: collection has not been loaded to memory or load failed while loading 50M-100M vectors (Issue #20769)

@neiblegyhttps://github.com/neiblegy

You has to check if you have enough memory. for 50M 256-dim * 12 replica you will need at least 500GB + memory

― Reply to this email directly, view it on GitHubhttps://github.com/milvus-io/milvus/issues/20769#issuecomment-1324512591, or unsubscribehttps://github.com/notifications/unsubscribe-auth/AD6ORVNWQMWNZMPMX5KZG73WJWHOTANCNFSM6AAAAAASIPHWWA. You are receiving this because you were mentioned.Message ID: @.***>

@neiblegy will this collection be finally loaded? The NoSuckKey error caused by compaction, v2.2.0 should be able to handle this, how long it takes to load after you changed the timeout?

For this problem, we need remote assistance. Is it convenient to give me your contact information by email so that I can find you.My email is [email protected]

@neiblegy

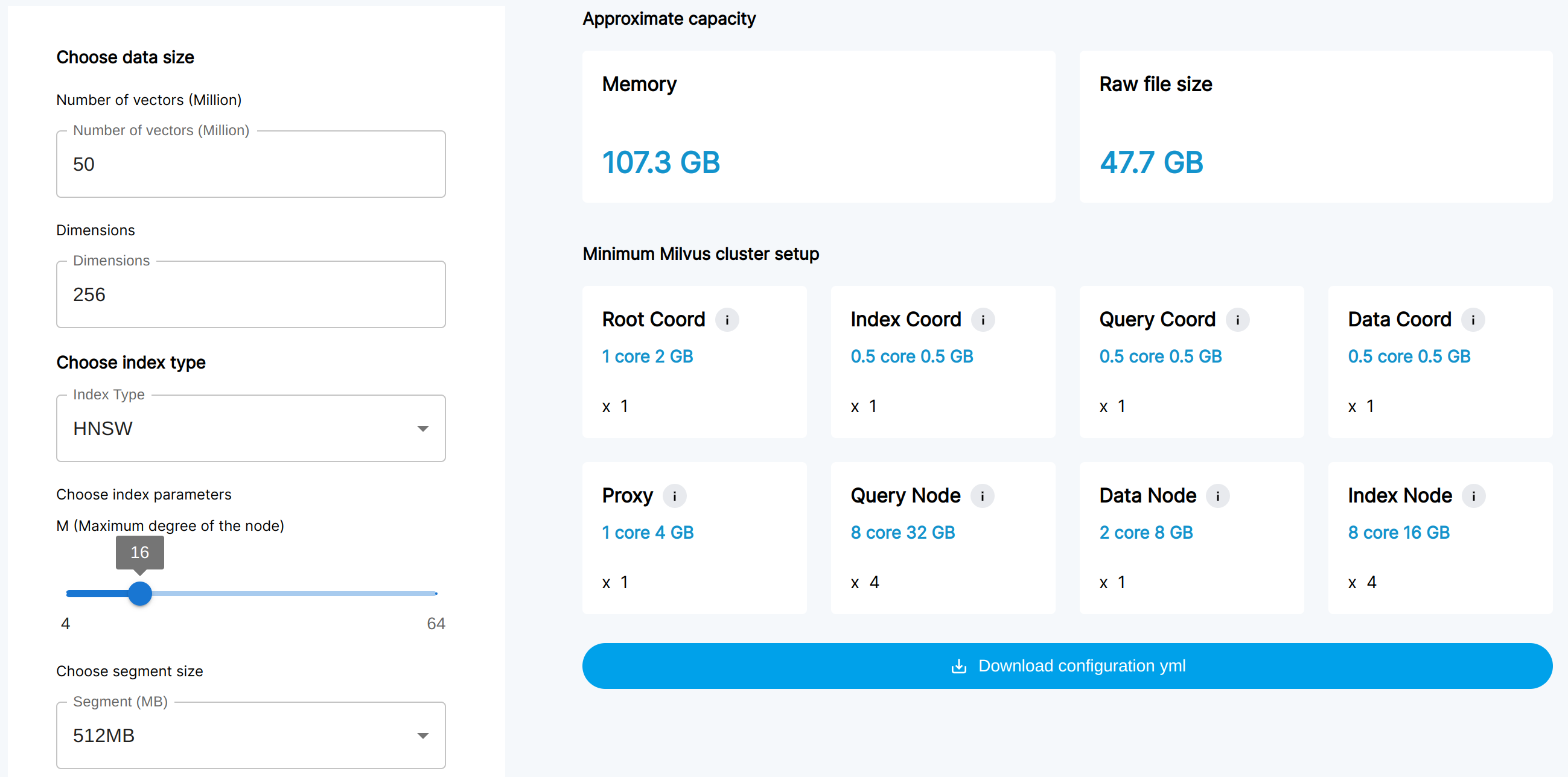

@neiblegy with sizing tool, it may be impossible to load 15 replicas with 1TB memory

@neiblegy with sizing tool, it may be impossible to load 15 replicas with 1TB memory

No, it take so long time with loop retry log infomations, then I give up

发件人: yah01 @.> 日期: 星期四, 2022年11月24日 10:54 收件人: milvus-io/milvus @.> 抄送: Ryan Gao @.>, Mention @.> 主题: Re: [milvus-io/milvus] [Bug]: collection has not been loaded to memory or load failed while loading 50M-100M vectors (Issue #20769)

@neiblegyhttps://github.com/neiblegy will this collection be finally loaded? The NoSuckKey error caused by compaction, v2.2.0 should be able to handle this, how long it takes to load after you changed the timeout?

― Reply to this email directly, view it on GitHubhttps://github.com/milvus-io/milvus/issues/20769#issuecomment-1325882250, or unsubscribehttps://github.com/notifications/unsubscribe-auth/AD6ORVKV6AUP3TK7KLDC6U3WJ3KGXANCNFSM6AAAAAASIPHWWA. You are receiving this because you were mentioned.Message ID: @.***>

I have 5 machines, each physical machine have such resource: 80 cores + 1TB mem, so 5 machine have 400cores + 5TB mem Each pod resource limit at 20 cores + 300GiB, I make 15 replicas with such resources

发件人: yah01 @.> 日期: 星期四, 2022年11月24日 11:10 收件人: milvus-io/milvus @.> 抄送: Ryan Gao @.>, Mention @.> 主题: Re: [milvus-io/milvus] [Bug]: collection has not been loaded to memory or load failed while loading 50M-100M vectors (Issue #20769)

[image]https://user-images.githubusercontent.com/12216890/203685120-21c325ca-61ff-445b-b78c-b855ce777db4.png @neiblegyhttps://github.com/neiblegy with sizing toolhttps://milvus.io/tools/sizing, it may be impossible to load 15 replicas with 1TB memory

― Reply to this email directly, view it on GitHubhttps://github.com/milvus-io/milvus/issues/20769#issuecomment-1325894544, or unsubscribehttps://github.com/notifications/unsubscribe-auth/AD6ORVLXEAY7SZW54LI5SO3WJ3MDTANCNFSM6AAAAAASIPHWWA. You are receiving this because you were mentioned.Message ID: @.***>

I also encountered this after upgrade to 2.2.0

use this snippet before using collection (for example, after reloading my webapp , i execute this)

collection = get_my_collection()

if not collection.has_index():

build_index()

collection.load()

@neiblegy hope it helps

NoSuchKey for stats log: [2022/12/05 08:34:23.378 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144599609] [error="The specified key does not exist."] 32 [2022/12/05 08:34:23.414 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144599627] [error="The specified key does not exist."] 31 [2022/12/05 08:34:23.452 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144599637] [error="The specified key does not exist."] 30 [2022/12/05 08:34:23.497 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144799647] [error="The specified key does not exist."] 29 [2022/12/05 08:34:23.517 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144799657] [error="The specified key does not exist."] 28 [2022/12/05 08:34:23.556 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144799667] [error="The specified key does not exist."] 27 [2022/12/05 08:34:23.604 +00:00] [WARN] [storage/minio_chunk_manager.go:236] ["failed to stat object"] [path=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144799694] [error="The specified key does not exist."]

[2022/12/05 08:34:23.653 +00:00] [WARN] [querynode/shard_cluster.go:651] ["follower load segment failed"] [collectionID=437836752123592712] [channel=by-dev-rootcoord-dml_1_437836752123592712v1] [replicaID=437836784121151489] [dstNodeID=7] [segmentIDs="[437836752144599604]"] [reason="All attempts results:\nattempt #1:NoSuchKey(key=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144599609)\nattempt #2:NoSuchKey(key=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144599627)\nattempt #3:NoSuchKey(key=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144599637)\nattempt #4:NoSuchKey(key=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144799647)\nattempt #5:NoSuchKey(key=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144799657)\nattempt #6:NoSuchKey(key=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144799667)\nattempt #7:NoSuchKey(key=stats_log/437836752123592712/437836752123592713/437836752144599604/100/437836752144799694)\n"]

We've seen no such key issue in other production environement, promote to critical urgent

We've seen no such key issue in other production environement, promote to critical urgent

in the 2.2.2 version, I found the same problem as "NoSuchKey" in a loaded collection, and the bucket does not have corresponding path. This seems to be an accidental problem, but I think it has hidden dangers. Do you have any idea about the reason for this problem and how to avoid it ?

We've seen no such key issue in other production environement, promote to critical urgent

in the 2.2.2 version, I found the same problem as "NoSuchKey" in a loaded collection, and the bucket does not have corresponding path. This seems to be an accidental problem, but I think it has hidden dangers. Do you have any idea about the reason for this problem and how to avoid it ?

Exactly, I'm invesigating on it and failure recovery will fail due to file corrupted. @yah01 any clue yet?

Other user encountered the same problem: https://github.com/milvus-io/milvus/discussions/22303 https://github.com/milvus-io/milvus/discussions/22300

This should have been fixed @neiblegy

ok,got it

获取 Outlook for iOShttps://aka.ms/o0ukef

发件人: yah01 @.> 发送时间: Wednesday, May 17, 2023 7:08:20 PM 收件人: milvus-io/milvus @.> 抄送: Ryan Gao @.>; Mention @.> 主题: Re: [milvus-io/milvus] [Bug]: collection has not been loaded to memory or load failed while loading 50M-100M vectors (Issue #20769)

This should have been fixed @neiblegyhttps://github.com/neiblegy

― Reply to this email directly, view it on GitHubhttps://github.com/milvus-io/milvus/issues/20769#issuecomment-1551193334, or unsubscribehttps://github.com/notifications/unsubscribe-auth/AD6ORVLB3WJ6OOJ65AYWXQLXGSWSJANCNFSM6AAAAAASIPHWWA. You are receiving this because you were mentioned.Message ID: @.***>

I'd close this issue, please free to open a new one if it does not fix on v2.2.13