vscode-remote-release

vscode-remote-release copied to clipboard

vscode-remote-release copied to clipboard

Conditional mounting

Hello there!

I have some mounts in my devContainer defined like

"mounts": [

"source=${localEnv:HOME}${localEnv:USERPROFILE}/.config/openstack/clouds.yaml,target=/root/.config/openstack/clouds.yaml,type=bind,consistency=cached",

"source=${localEnv:HOME}${localEnv:USERPROFILE}/.kube/config,target=/root/.kube/config,type=bind,consistency=cached"

],

Some users might not have those files. They will get an error in that case.

Feature Request: Mark mounts as optional or check if they exist on startup.

(Happy about any other solution to that as well)

Thanks in advance!

//cc @chrmarti @bamurtaugh as FYI. This is actually something we could do that docker itself does not support. I've hit this myself in the kubernetes situation.

This feature request is now a candidate for our backlog. The community has 60 days to upvote the issue. If it receives 10 upvotes we will move it to our backlog. If not, we will close it. To learn more about how we handle feature requests, please see our documentation.

Happy Coding!

@max06 Behind docker volume mounts like any OCI mounts stay either plain Linux mount or FUSE mount in the best case. If you have Linux or WSL under your hand you can ask mount --help or man mount to see what is possible.

That would be an awesome feature! I run into this issue a lot, as everyone in the team has kinda different structure to store their kubeconfigs, but we wanna share the same Dev Container for development. What usually happens is, that everyone starts the Container, then the first build will fail, people modify their devcontainer.json and re-build so it works again. Then, when we do changes to the devcontainer.json and people update the devcontainer.json they sometimes forget to merge their changes with the upstream version and just overwrite the devcontainer.json because they couldn't really remember why they changed this line and it fails again. Since we don't update the devcontainer.json that often, this happens from time to time for some people.

Would really love to see this feature! Happy to beta-test as well

@PavelSosin-320 That's the point: You can't mount something that's not there. And that's perfectly fine in a regular docker context. On the other hand, in the devContainer context, it might be perfectly fine to have missing mounts, like for configuration files. They won't break the container functionality if they are not there. And they don't bug the user to create empty files/directories just for a small component of the container.

@max06 In the pure Dockerfile or composefile it is possible to define volumes mount-points as targets and provide source as a run option, like in the case of regular Linux mount it can be local directory subtree (bind mount), named docker volums, directory at the remote filesystem and even Infrastructure block storage. There is veriaty of "drivers" that can be used. The mount target is never "missing" until container is run. The volume "source" (persistent storage) is always conditional and can change from run to run. In othe words it is always map source -> target (in the json format) independend from container definition.

@PavelSosin-320 Yes, until you don't want to use a source.

For clarification: This is not about the Dockerfile or a compose setup. This is about the vscode remote extension calling docker with a non-existent mount source. This is about the devContainer json configuration file.

@max06 It can be separate file. Atually, deferred volume source definition is the Docker "best practice". If Docker image knows too much about "volume source" it is easily breakable, hardly reusable. There are other methods to build and run Docker (OCI) container but today the devcontainer.json is translated into formats accepted by docker build and docker-compose plus docker run options.

@PavelSosin-320 I'm pretty sure we're talking about 2 different things here, and it's probably my fault. But based on the initial response I've been able to address my issue clearly enough to be understood by the responsible devs, so I'm gonna await their judgment now.

:slightly_smiling_face: This feature request received a sufficient number of community upvotes and we moved it to our backlog. To learn more about how we handle feature requests, please see our documentation.

Happy Coding!

So, what is the meaning of the word "conditional" ?. In the world of containerized applications, the deployment is always conditional because "build" and "deploy" descriptors are different files or different sections in the same file. The "build" descriptor is very stable and doesn't need any validation against the deployment target. The deployment descriptor is "conditional" and reflects the runtime configuration, capabilities, even user considerations, i.e. can be "conditioned" by simple things and checked to avoid "broken" applications, i.e.dev container deployment. It doesn't need any source control, git, etc. It is tested using "dry run". The result of the "dry run" is the condition. If "dry-run" says that the deployment descriptor is OK for the certain deployment target then it's OK to proceed. Maybe, dry-run as a simple (plain validator) and proven solution is more reliable?

P.S. Maybe, docker create to test everything possible as a dry-run is even better and makes all "conditional" definitions redundant?

A simple "stat" on the source would be enough. Does it exist? Great. If not, is it optional? Great, not adding that one to the run command. Not optional? Here, have an error message.

@max06. a Simple stat is not enough if Docker engine is running on a remote Linux machine. Path may exists but not accessible by the Container's user (OMG Uid/Gid mapping), exceeds link depth, Labeled by SeLinux someway, etc., and other things related to the Linux FS and Docker security. Why VSCode plugin has to check all these things if Docker will do it itself? Docker create command produces the same diagnostics as Docker run and User's repair will work in both cases: change devcontainer.json or create directory.

Hey @PavelSosin-320,

I strongly believe that you and @max06 are talking about entirely different things. Yes, you are right when we're talking about Docker in general.

I think the point that @max06 is trying to make is the following:

Imagine you have a mount defined in your devcontainer.json like the following:

"mounts": [

"source=${localEnv:HOME}${localEnv:USERPROFILE}/.kube/config2,target=/root/.kube/config,type=bind,consistency=cached"

],

What happens if the source ${localEnv:HOME}${localEnv:USERPROFILE}/.kube/config2 does not exist (maybe because the file is called config instead of config2)?

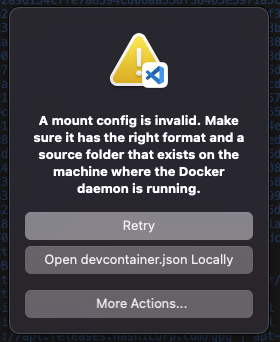

The answer is:

And the respective log is

[2021-07-14T08:55:50.769Z] Stop (221830 ms): Run: docker build -f /Users/cedi/src/myproject/.devcontainer/Dockerfile -t vsc-myproject-18bb504b6cbce31b3d1a66f5503ab02c --build-arg VARIANT=3 --build-arg INSTALL_NODE=false --build-arg NODE_VERSION=lts/* /Users/cedi/src/myproject

[2021-07-14T08:55:50.772Z] Start: Run: docker events --format {{json .}} --filter event=start

[2021-07-14T08:55:50.775Z] Start: Starting container

[2021-07-14T08:55:50.776Z] Start: Run: docker run --sig-proxy=false -a STDOUT -a STDERR --mount source=/Users/cedi/.kube/config2,target=/root/.kube/config,type=bind,consistency=cached --mount type=volume,src=vscode,dst=/vscode -l vsch.local.folder=/Users/cedi/src/myproject -l vsch.quality=stable -l vsch.remote.devPort=0 --entrypoint /bin/sh vsc-myproject-18bb504b6cbce31b3d1a66f5503ab02c -c echo Container started

[2021-07-14T08:55:51.492Z] docker: Error response from daemon: invalid mount config for type "bind": bind source path does not exist: /Users/cedi/.kube/config2.

See 'docker run --help'.

[2021-07-14T08:55:51.500Z] Stop (724 ms): Run: docker run --sig-proxy=false -a STDOUT --mount source=/Users/cedi/.kube/config2,target=/root/.kube/config,type=bind,consistency=cached --mount type=volume,src=vscode,dst=/vscode -l vsch.local.folder=/Users/cedi/src/myproject -l vsch.quality=stable -l vsch.remote.devPort=0 --entrypoint /bin/sh vsc-myproject-18bb504b6cbce31b3d1a66f5503ab02c -c echo Container started

[2021-07-14T08:55:51.501Z] Start: Run: docker ps -q -a --filter label=vsch.local.folder=/Users/cedi/src/myproject --filter label=vsch.quality=stable

[2021-07-14T08:55:51.690Z] Stop (189 ms): Run: docker ps -q -a --filter label=vsch.local.folder=/Users/cedi/src/myproject --filter label=vsch.quality=stable

[2021-07-14T08:55:51.694Z] Command failed: docker run --sig-proxy=false -a STDOUT -a STDERR - --mount source=/Users/cedi/.kube/config2,target=/root/.kube/config,type=bind,consistency=cached --mount type=volume,src=vscode,dst=/vscode -l vsch.local.folder=/Users/cedi/src/myproject -l vsch.quality=stable -l vsch.remote.devPort=0 --entrypoint /bin/sh vsc-myproject-18bb504b6cbce31b3d1a66f5503ab02c -c echo Container started

trap "exit 0" 15

So the tl;dr is: in this configuration, my Devcontainer build fails, because source=${localEnv:HOME}${localEnv:USERPROFILE}/.kube/config2 does not exist on my local filesystem.

If I get it right, then @max06 point is to have an option to not fail the Devcontainer build, but to simply skip this mount.

I can imagine a world, where we have in the devcontainer.json two ways to specify mounts.

One like we currently have:

"mounts": [

"source=${localEnv:HOME}${localEnv:USERPROFILE}/.kube/config2,target=/root/.kube/config,type=bind,consistency=cached"

],

which will work the same way it does currently -> fail the Docker-build when the source file is not there, and another option like

"optional_mounts": [

"source=${localEnv:HOME}${localEnv:USERPROFILE}/.kube/config2,target=/root/.kube/config,type=bind,consistency=cached"

],

which will do an stat to see if the source-file is there, and only if this test is successful and the file exists, VSCode then mounts that file to the Docker-Container (i. E. include it in the docker run --mount ... commandline)

I really hope this clarifies up the situation

@cedi We are talking about different solutions for the same problem. But the best practice solution of this problem is already described in the Docker documentation: Good use-cases for volumes. The devcontainer solution based on the Docker's solution will certainly work, simple in implementation and requires minimum efforts in maintenance because container definition may be very stable without volume source, with anonymous volume only. The Docker documentation describes exactly the use-case that justify this feature. I afraid that it is very hard to define condition that ensure that mount will work is feasible unless mount utility used by the container engine is tested with given parameters.

@PavelSosin-320 can you make a recommendation of how the mount should look like to that your solution into account?

I can't really follow your argumentation here to be honest.

A short example of how the mount would look like in the "mounts": [] would be very helpful here :)

The use case is: Mounting configuration files from the host system. The recommended way to do that is using bind mounts (see your link). It's not about copying config files into the container to be "stable" in case they disappear on the host.

If I send you a repository with a devcontainer definition, containing multiple (optional) mounts, I expect the container to work without you having to create all of the source files before or modifying the definition. Please show me a run command, that can actually do that.

Following Docker doc recommendations mount point of the dev container definition can have 'Open-ended' volume definition: -v or --volume: Consists of three fields, separated by colon characters (:). The fields must be in the correct order, and the meaning of each field is not immediately obvious. In the case of named volumes, the first field is the name of the volume and is unique on a given host machine. For anonymous volumes, the first field is omitted. The container will be fully functional regardless of deployment target except for non-workspace targets. I suppose that the workspace always exists because it contains devcontainer definition itself. Before using the specific engine running on the specific host or Azure to run the devcontainer user has to create a separate file that is not a part of devcontainer definition, doesn't need source control, and only defines storage. Docker storage. Up to user to create source files or directories for bind mount but it is easily testable using docker create command, i.e. dry run.

This is definitely a problem given the use cases for remote-containers that is not solved. At -v at one point would mount an empty folder if the source was missing, but there's a hard failure in recent versions and always has been for --mount. When you're deploying to production, that's a good thing. When you want to share configuration between people for local development environments, it becomes a challenge. The challenge is not getting a Docker volume, but bind mounting local folders.

A workaround is to have a script in initalizeCommand for both windows and macOS/Linux that creates the source folder if it is missing, but that's clunky.

This is a very old problem with very old and simple solution: If devcontainer definition contains only default or open-end parameters it can be "edited" using a local "configuration" file that overrides only those values that are not suitable for the local machine or user. if the source is open-ended like run --mount type=volume, source="", target=/uservolume then the Docker engine will create anonymous volume for the user in any case. This behavior is ensured by the OCI standard, i.e. works everywhere. The configuration file may contain the only local value for the source that overrides anonymous volume: type=bind, source=$HOME/user-volume. The configuration file is git-ignored, never shared among developers, and optional because the default value works anywhere. For the production deployment, this problem is more critical when urgent bug correction is required. The cost of error in the configuration file is minimal because the container fails to run from the local image in the worst case in less than a second with no harm. The value can be corrected and every variant can be tested using docker create command in a second too.

and optional because the default value works anywhere.

Except for Codespaces, K8s and other "locked" systems where you can't create a configuration file. Also it's not very user friendly.

The configuration file is git-ignored, never shared among developers, and optional because the default value works anywhere.

It's not working everywhere.

In case you still missed the point: It's not about the OCI standard. It's not related to the Dockerfile at all, it doesn't affect how the image is built.

@max06 Indeed, not related at all, only how volumes are mounted. Is it the issue, that justifies your Feature request? K8s is not a "locked system" because Kubernetes deployment works in the way described above. The configuration file is created on the client-side. The pride of solution belongs to one of the famous E.G. co-authors. The benefit of this solution is that missed volumes and folders can be checked and created.

It's useless to discuss with you as long as you only accept your own opinion. I'm out of here.

I am familiar with this problem and know that a solution shall be provided. This is a classic DevOps problem. The best solution is the best solution from the DevOps point of view. I am afraid that maintenance of devcontainer definition containing if logic is out of DevOps capability. Maintenance of small config or values file is much easy and, so Systemd, Helm, Ansible, etc tools are so popular - they are almost logic-less.

@bamurtaugh any updates on this backlog?

This would be a really nice improvement if we could get it -- maybe some explicit concept in the devcontainer.json spec that doesn't use Docker syntax directly, e.g.

{

"optionalBindings": {

"${localEnv:HOME}/.devcontainer-config/": "~/.devcontainer-config/"

}

}

and then the devcontainer CLI could implement the "checking to see if that directory/file exists" logic in a cross-platform way. It's worth noting that @Chuxel's workaround won't work for anybody using "Clone Repository in Container Volume," since the initializeCommand runs in the bootstrap container rather than on the host proper. Since Docker file-sharing performance is (in my experience) still too slow on Mac for a working frontend development experience, we have to use volumes and I'm going to have to instruct my users to just create this directory by hand.

another option would be to have docker-compose files conditionally added in. In my situation the problem is not mounts, its that some development machines have GPUs and others don't. I have two docker-compose files, one is the basic service description and the other layers on the nvidia gpu support.

For a GPU enabled machine I would want devcontainer.json to have:

"dockerComposeFile": ["docker-compose.yaml", "docker-compose.gpu.yaml"],

but a non-gpu machine should be:

"dockerComposeFile": ["docker-compose.yaml"],

but anything (mounts) that can be described in a docker-compose could be treated similarly. if there was a way to write a script to trigger inclusion of docker-compose files, it could be pretty general purpose.

Does it work to export COMPOSE_FILE🔗 in initializeCommand or is it too late at that point, @jbcpollak?

@carlthome - I'm not sure I follow how that would work. Would initializeCommand be able to modify the exported COMPOSE_FILE variable?

if I could write a shell script in intializeCommand that detected the presence of a GPU and then set (or modified) COMPOSE_FILE, that would solve the problem.

Effectively I'd like to be able to do:

"dockerComposeFileCmd": './getComposeFiles.sh'

I think this could solve the conditional mounting as well since getComposeFiles.sh could layer on additional mount descriptions based on shell results.

As an alternative, could the entire docker-compose launch be delegated? I don't know if that works within the framework of devcontainers, but something like this:

"dockerComposeCmd": './launchDockerCompose.sh'

that just ran the entire docker compose -f .... -f .... -f ... would also work for our usecase.

I have another use case that this would be useful for.

I currently include the following mount in my devcontainer.json file in order to use a debugger for embedded development.

"mounts": ["type=bind,source=/dev/bus/usb,target=/dev/bus/usb"]

This mount however causes the CI build in Github Actions to fail as the source, /dev/bus/usb, does not exist. Of course this is not required in a CI build, so simply skipping this mount somehow would be preferable.

it's been over a year is this ever going to be worked on? We need this also as we want to load different repos in the container, that might live on different paths on a persons machine.

Here is a general-purpose hacky solution we have been using to toggle GPU enable/disable. You should be able to extend this to mounts or other options as well.

In your devcontainer.json file, have these two lines:

"dockerComposeFile": ["docker-compose.yaml", "docker-compose.gpu.yaml"],

"initializeCommand": ".devcontainer/setup_container.sh",

have docker-compose.yaml define your core functionality. Then in the setup_container.sh shell script we check for a GPU and run this as its last line: ln -sf docker-compose.gpu-${HAS_GPU}.yaml docker-compose.gpu.yaml

the contents of docker-compose.gpu-enable.yaml:

version: '3'

services:

dill:

environment:

- NVIDIA_VISIBLE_DEVICES=all

- NVIDIA_DRIVER_CAPABILITIES=all

deploy:

resources:

reservations:

devices:

- driver: nvidia

capabilities: [ gpu ]

and disable (this is basically a no-op, but we found an empty file doesn't work):

version: '3'

services:

dill:

environment:

- HAS_GPU=disable