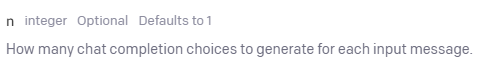

Support for chat completion choices parameter

Open AI API has parameter n for number of choices to be retruned. Is it possible to add this param to the SK?

At the moment class ChatCompletionRequest lacks it

public sealed class ChatCompletionRequest

{

public string? Model { get; set; }

public IList<Message> Messages { get; set; } = new List<Message>();

public double? Temperature { get; set; }

public double? TopP { get; set; }

public double? PresencePenalty { get; set; }

public double? FrequencyPenalty { get; set; }

public object? Stop { get; set; }

public int? MaxTokens { get; set; } = 256;

}

as well as GenerateMessageAsync method returns single string value

public interface IChatCompletion

{

public Task<string> GenerateMessageAsync(

ChatHistory chat,

ChatRequestSettings requestSettings,

CancellationToken cancellationToken = default);

}

@dmitryPavliv we are considering to expose the underlying full power of the models, it might be via a different set of interfaces though. IChatCompletion and ITextCompletion are aimed at the critical scenarios like "I need a message, here's a string" and "I need to run a prompt, here's the result".

Returning a list (and all the other values, e.g. tokens, logprobs, streaming the result, etc.) means more code to handle the response, so we'll probably introduce a different set of ad-hoc methods.

I can't talk to the timeline yet, but it's high priority in the list.

Yes, would really love to have multiple completions per prompt argument which is already supported by OpenAI and Azure Open AI APIs. My use case is around classifying and re-ranking of multiple generations. Accomplishing this in a single API call instead of 'N' calls would drastically improve system performance as well. I'm curious if there is any update to the timeline for this feature