Added configs for OpenAI API.

Motivation and Context

- Semantic Kernels ai.open_ai.services.open_ai_config's OpenAIConfig class was very limited in what it could configure.

- By adding the two new configs, users have an easy object to pass to their bots.

- In my particular case, and I suspect for others, I am initializing a chat bot with a config and some prompt text. I just pass these new objects in instead of tons of parameters.

Description

I needed to pass in api options such as model name, api key, temperatures, etc and I figured I would look to see if you guys had a config system already. You did, but it only had one config, OpenAIConfig, that could only save mode_id, api_key, and org_id. I needed something that could have more access to the full OpenAI api parameters. So I created two new ones. One for Chat Completions and one for generic Completions.

ChatCompletionConfig inherits from OpenAIConfig, and CompletionConfig inherits from ChatCompletionConfig because it is exactly the same but with 3 extra parameters. I followed the existing pattern as closely as possible. Additionally, I added the documentation for each parameter directly from the OpenAI.

There is no real logic, just objects config options. I figured if I was going to make one anyways, I might as well add it to the repo.

Contribution Checklist

- [x] The code builds clean without any errors or warnings

- [ ] The PR follows SK Contribution Guidelines (https://github.com/microsoft/semantic-kernel/blob/main/CONTRIBUTING.md)

- No test for it, there wasn't really a pattern for config tests. I can do this if you want.

- Did not warn you, my bad. I plan on adding quite a bit more functionality to the python branch so I will do this from now on.

- [x] All unit tests pass, and I have added new tests where possible.

- [x] I didn't break anyone :smile:

- Only inherits and makes new objects. Does not alter existing objects.

@microsoft-github-policy-service agree company="Asteres Technologies LLC"

Thank you @Codie-Petersen for this PR! We're actually introducing the ChatCompletitions endpoint for Python in this PR: https://github.com/microsoft/semantic-kernel/pull/121

Can we merge what you're doing here with that one?

This is awesome! It'd be great to support more of these parameters --- I know, personally, I'd love to see support for multiple completions from the model and support for returning logits.

One issue, right now, is that SK is very much using a "text in" and "text out" model. This makes sense, text is the universal wire protocol :) however, it does create an issue when using this expanded set of parameters. If I set config.logits = 2 now I have a "text in" and "text + tokens + logits" out situation and our function contracts aren't quite setup to support that as of now. Similarly, if I set config.n = 10 now I have "text in" and "array of 10 text completions" out.

@jjhenkel I see what you are saying. One solution to that problem is we could implement a callable to handle responses. This way we can force the response down to raw text again even if the user wants a bunch of extra info. So long as they follow the callable pattern, they could create their own custom function to handle response objects. I'm not super familiar with the code yet, but that's the idea off the top of my head, use something like a C# delegate for callbacks.

@alexchaomander Knowing some of @jjhenkel's concerns about breaking the text out pattern, do you still want this moved in #121? My thinking is maybe it will encourage people to use the full set, but they don't know it will break their pipeline. Up to you guys.

So from the core repo side, we will likely rewrite the whole model backend code so as to be better designed to handle multiple models and multi-modal inputs/outputs. @dluc and @dmytrostruk are handling this effort.

Since that'll probably change the Python code patterns as well, I'd say let's not try to solve this issue yet here.

If you're okay with that @Codie-Petersen, we can consolidate the work on #121 and bring up ChatGPT support so as to unblock the people who want to use that model in Python now.

@alexchaomander Sounds good. I think then in that case, @jjhenkel can use this command:

git pull https://github.com/Asteres-Digital-Engineering/semantic-kernel.git asteres-technologies/python-upgrades

to pull in my commit. It was only the one commit plus dluc's init commit, so I don't think there will be any merge conflicts.

Unless there is a different way you want it done, but to be honest, that's the only way I've done it.

One last request here if we're going to move forward with adding more parameters (ignoring the issues of somethings like n and logits not really being possible right now based on contracts) --- @Codie-Petersen do you have an example of using these new config classes?

Based on my understanding, as of now we'd need to do some additional plumbing to actually use these new more complete config classes --- like, making it easy to configure a Kernel instance with a backend that has this more complete configuration object. I could be missing something here though, maybe there's any easier way to use the parameters in these new classes without additional plumbing.

For example, we'd probably also need changes here and here and a handful of other places to actually use the new configs.

I think, in the end, we may be better off just waiting for the re-design around multi-model inputs/outputs. As a stop gap, I could go in and add any missing parameters that would have an effect if you have specific needs. (Like, say, custom logit biases for specific tokens.)

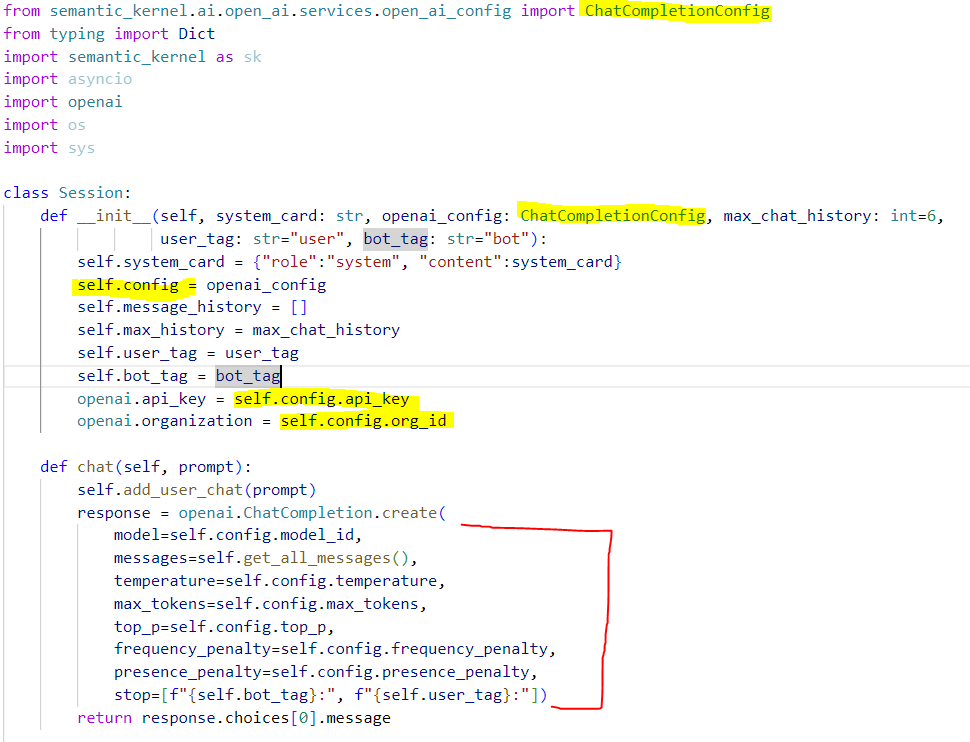

@jjhenkel Yeah, I was working on a bot last night. We have a lead generator on our website. It's pretty brittle with the single system card prompt. So I'm redoing it a bit right now to be more robust and helpful. Just started refactoring it last night. It's brand spanking new though, only about a week or so of development. I was going to make a class to handle all of the parameters, so I decided might as well contribute.

Ahh I see, this is helpful thank you! The good news is, that w/ the Chat API PR I opened today, you should be able to cover this use case (even without the extended parameters classes, all the params you use we support).

Here's an example of what things will look like once that PR is merged:

import asyncio

import semantic_kernel as sk

system_message = """

Whatever system message you want to start with

"""

kernel = sk.create_kernel()

api_key, org_id = sk.openai_settings_from_dot_env()

kernel.config.add_openai_chat_backend("chat-gpt", "gpt-3.5-turbo", api_key, org_id)

prompt_config = sk.PromptTemplateConfig.from_completion_parameters(

max_tokens=2000,

temperature=0.7,

top_p=0.8,

presence_penalty=0.01,

frequency_penalty=0.01,

stop_sequences=["BotTag:", "UserTag:"]

)

prompt_template = sk.ChatPromptTemplate(

"{{$user_input}}", kernel.prompt_template_engine, prompt_config

)

prompt_template.add_system_message(system_message)

prompt_template.add_user_message("Hi there, who are you?")

prompt_template.add_assistant_message("I'm a helpful chat bot.")

function_config = sk.SemanticFunctionConfig(prompt_config, prompt_template)

chat_function = kernel.register_semantic_function("ChatBot", "Chat", function_config)

async def chat() -> bool:

context = sk.ContextVariables()

try:

user_input = input("User:> ")

context["user_input"] = user_input

except KeyboardInterrupt:

print("\n\nExiting chat...")

return False

except EOFError:

print("\n\nExiting chat...")

return False

if user_input == "exit":

print("\n\nExiting chat...")

return False

answer = await kernel.run_on_vars_async(context, chat_function)

print(f"ChatBot:> {answer}")

return True

async def main() -> None:

chatting = True

while chatting:

chatting = await chat()

if __name__ == "__main__":

asyncio.run(main())

@jjhenkel I'll close out this request as it seems you guys are probably going to rewrite a bunch of this anyways. I'll just keep some custom code on my side and when you guys have a solid architecture you want to roll with I'll pull the changes and refactor my stuff. Won't be hard, it's pretty modular as it is.

@Codie-Petersen sounds good! Thanks for the discussion and examples --- do checkout the Chat support when it gets merged, I think it covers your use case well (and shouldn't require too much custom code from your side). Happy hacking!

@jjhenkel Sounds good man, I might actually just refactor it tonight to use your changes. I'll keep an eye out for issues or bugs.

@Codie-Petersen @jjhenkel Thank you so much for the great discussion!