onnxruntime

onnxruntime copied to clipboard

onnxruntime copied to clipboard

engine decryption does not work in TensorRT EP

Describe the bug I want to encrypt TensorRT engine in cache, so i set tensorrt_options->trt_engine_decryption_enable=ture and trt_engine_decryption_lib_path, but the program always to deserialize from the unencrypted TensorRT engine, trt_engine_decryption_enable did not work.

The program entered the decryption function after deleting the unencrypted TensorRT engine, but it did not found the file on disk.

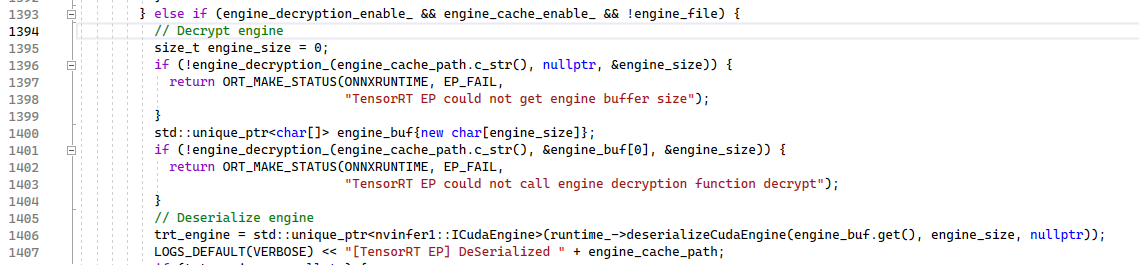

I have some questions when i read the following source code, why the file path of this encrypted engine is the same as the unencrypted engine’s. It always does not enter the engine_decryption_ function when the engine_cache_path is valid.

Urgency none

System information

- OS Platform and Distribution ( Linux Ubuntu 16.04):

- ONNX Runtime installed from (source ):

- ONNX Runtime version: 1.12.1

- Python version:

- Visual Studio version (if applicable):

- GCC/Compiler version (5.4):

- CUDA/cuDNN version:8.4.1

- GPU model and memory:

Expected behavior I want to know why the file paths of the unencrypted TensorRT engine and the encrypted TensorRT engine are the same, and how to encrypt TensorRT engine.

engine_cache_path is the path for all TRT engines. If you enabled trt_engine_decryption_enable, you should delete all unencrypted engines created before in the engine cache directory and create encrypted engine by providing your encryption library in trt_engine_decryption_lib_path. Once encrypted engine is created, next time when you run the model, the encrypted engine will be decrypted and loaded for inference.

engine_cache_path is the path for all TRT engines. If you enabled trt_engine_decryption_enable, you should delete all unencrypted engines created before in the engine cache directory and create encrypted engine by providing your encryption library in trt_engine_decryption_lib_path. Once encrypted engine is created, next time when you run the model, the encrypted engine will be decrypted and loaded for inference.

After deleting the unencrypted TensorRT engine, onnxruntime had not created encrypted engine yet but entered the engine_decryption_ function as shown in the picture above, and then it would never found the file in the engine cache directory.

I see the same behavior as described @luojung

@stevenlix I am also seeing the issue described by @luojung I looked into the implementation in tensorrt_execution_provider.cc and it seems it probably never worked. Pseudocode (current implementation):

if( engine_cache_enable && engine_file )

deserialize(engine_file) // <-- If engine_decryption_enable==true, this fails

else if (engine_decryption_enable && engine_cache_enable_ && !engine_file)

decrypted_engine_file = decrypt(engine_file) // <-- There is no engine cache file yet, so this fails

deserialize(decrypted_engine_file)

else

//build the engine

...

It seems to me it should look more like this:

if( engine_cache_enable && engine_file )

if( engine_decryption_enable )

decrypted_engine_file = decrypt(engine_file)

deserialize(decrypted_engine_file)

else

deserialize(engine_file)

else

//build the engine

...

I built onnxruntime 1.13.1 locally with these changes and it seems to fix the issue for me. Seems the current code still has the same structure/issue though.

I think you are right.

For non-dynamic shape, there's a code to decrypt an engine:

But it is missing in a block that loads a serialized engine:

I didn't find any example of encryption lib. This is my primitive POC. It adds "encrypted_" prefix during encryption and removes on decryption:

extern "C" {

__declspec(dllexport) int decrypt(const char* engine_cache_path, char* engine_buf, size_t* engine_size) {

std::ifstream engine_file(engine_cache_path, std::ios::binary | std::ios::in);

// Remove prefix

std::string prefix = "encrypted_";

engine_file.seekg(0, std::ios::end);

*engine_size = (size_t)engine_file.tellg() - prefix.length();

if (engine_buf != nullptr)

{

engine_file.seekg(prefix.length(), std::ios::beg);

engine_file.read(engine_buf, *engine_size);

}

return 1;

}

__declspec(dllexport) int encrypt(const char* engine_cache_path, char* engine_buf, size_t engine_size) {

std::ofstream engine_file(engine_cache_path, std::ios::binary | std::ios::out);

std::string prefix = "encrypted_";

engine_file.write(prefix.c_str(), prefix.length());

engine_file.write(engine_buf, engine_size);

return 1;

}

}

Another note: "trt_engine_decryption_lib_path" parameter has to point a dll, if the code is in a dynamic library.

@AndreyOrb I actually have a working fix for this issue but never took the time to create a pull request for it. I had a quick look at yours and it's missing some parts to have a full working solution. I am not sure what's the best way forward: should I create a new pull request or is there a way I can add to yours?

I can try adding missing parts. Could you share is here or refer me to a commit in your forked repo?

I need to adapt my fix, the main branch evolved since 1.13.1. I'll commit and share once it's tested on my end.

Sorry for the mess with commits, I'm not used with Github Desktop. It was easier to create a new pull request. Ignore the first commit showing here, it included an unneeded change to onnxruntime.cmake I did not want to commit. edit: adding @AndreyOrb

https://github.com/yangxianpku/model_cryptor

@unique

class ModelType(Enum):

MODEL_TORCH = "TorchModelCryptor" # torch模型

MODEL_TORCHSCRIPT = "TorchScriptModelCryptor" # torch script模型, torch.jit.script或torch.jit.trace保存

MODEL_ONNX = "ONNXModelCryptor" # onnx模型

MODEL_TENSORRT = "TensorRTModelCryptor" # tensorrt模型

MODEL_TORCH2TRT = "Torch2TRTModelCryptor" # torch2trt模型

MODEL_TENSORFLOW = "TensorFlowModelCryptor" # tensorflow模型

MODEL_TF2TRT = "TF2TRTModelCryptor" # tensorflow2tensorrt模型

MODEL_PADDLE = "PaddleModelCryptor" # paddlepaddle模型

MODEL_PADDLE2TRT = "Paddle2TRTModelCryptor" # paddle2tensorrt模型

As I understand this thread there is now support for engine encryption but as far as I can tell there is no documentation for how to write an encryption dll. What its function's names should be, what parameters they have and their semantics.

Also if it works, why isn't this issue closed?

Good question. I think there is still a flow that is not handled. I can check it this week. Regarding the documentation, you are right, no information/examples.

Applying stale label due to no activity in 30 days