SynapseML

SynapseML copied to clipboard

SynapseML copied to clipboard

The training of LightGBMRegressor model raised exceptions, using SynapseML

SynapseML version

0.10.2

System information

- Language version (e.g. python 3.9, scala 2.12):

- Spark Version (e.g. 3.2.3):

- Spark Platform (e.g. Synapse):yarn

Describe the problem

training data size: 55G

number of training data: 40,000,000

Spark conf:

--deploy-mode cluster \ --master yarn \ --num-executors 400 \ --executor-cores 1 \ --executor-memory 3G \ --driver-memory 32G \ --queue baize-ugrowth \ --packages com.microsoft.azure:synapseml_2.12:0.10.0-6-4868e8bf-SNAPSHOT \ --repositories https://mmlspark.azureedge.net/maven \ --conf spark.driver.maxResultSize=20G \ --conf spark.speculation=true \ --conf spark.speculation.interval=100 \ --conf spark.speculation.multiplier=1.5 \ --conf spark.speculation.quantile=0.75 \ --conf spark.default.parallelism=4000 \ --conf spark.sql.shuffle.partitions=4000 \ --conf spark.yarn.tags=peta-agent_available \ --conf spark.kryo.referenceTracking=True \ --conf spark.rpc.askTimeout=3600s \ --conf spark.executor.pyspark.memory=3G \

1.Exception One(useBarrierExecutionMode is not enabled):

1.1 training params:

LightGBMRegressor(maxDepth=3, numIterations=100, labelCol=Y, featuresCol="features", numTasks=100)

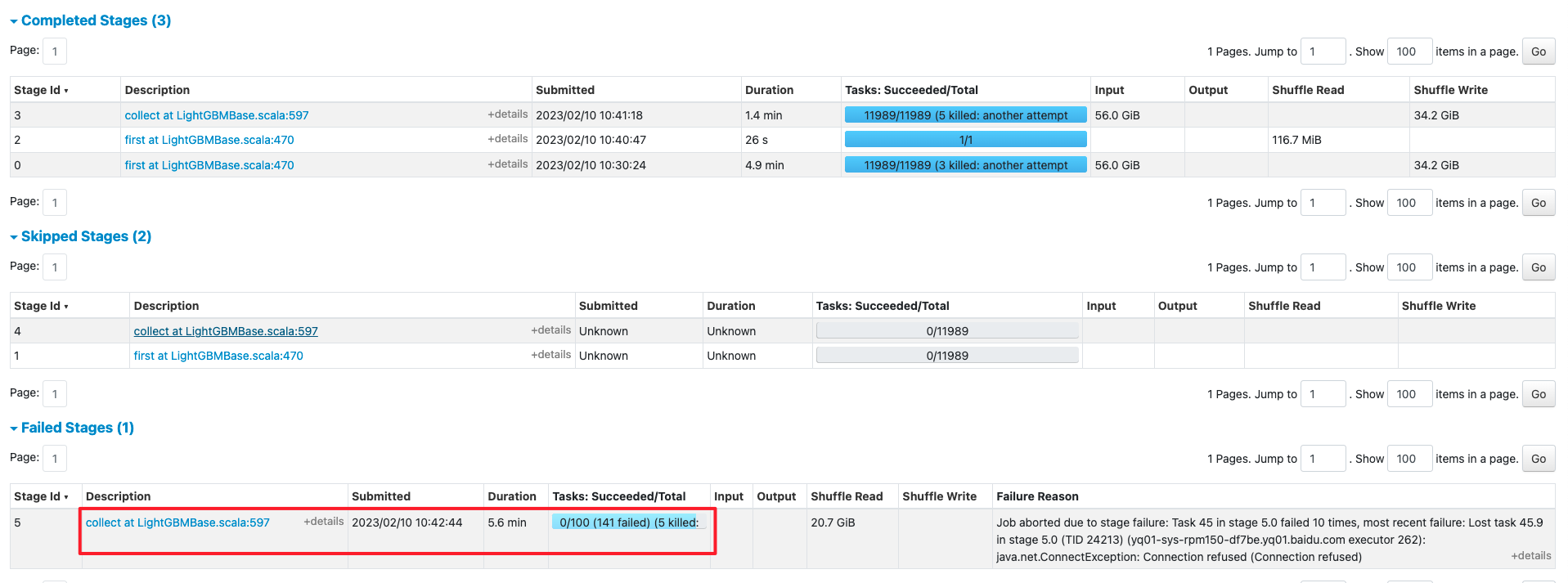

1.2 yarn display:

1.3 error log detail:

2023-02-10 10:48:19 [task-result-getter-3] ERROR [TaskSetManager:73]: Task 45 in stage 5.0 failed 10 times; aborting job

2023-02-10 10:48:20 [Thread-6] ERROR [LightGBMRegressor:94]: {"uid":"LightGBMRegressor_46bbdd8e51ed","className":"class com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor","method":"train","buildVersion":"0.10.0-6-4868e8bf-SNAPSHOT"}

org.apache.spark.SparkException: Job aborted due to stage failure: Task 45 in stage 5.0 failed 10 times, most recent failure: Lost task 45.9 in stage 5.0 (TID 24213) (yq01-sys-rpm150-df7be.yq01.baidu.com executor 262): java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:607)

at java.net.Socket.connect(Socket.java:556)

at java.net.Socket.<init>(Socket.java:452)

at java.net.Socket.<init>(Socket.java:229)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.getNetworkTopologyInfoFromDriver(NetworkManager.scala:129)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.$anonfun$getGlobalNetworkInfo$2(NetworkManager.scala:116)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:24)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:29)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:29)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:29)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:29)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.$anonfun$getGlobalNetworkInfo$1(NetworkManager.scala:111)

at com.microsoft.azure.synapse.ml.core.env.StreamUtilities$.using(StreamUtilities.scala:28)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.getGlobalNetworkInfo(NetworkManager.scala:107)

at com.microsoft.azure.synapse.ml.lightgbm.BasePartitionTask.initialize(BasePartitionTask.scala:179)

at com.microsoft.azure.synapse.ml.lightgbm.BasePartitionTask.mapPartitionTask(BasePartitionTask.scala:114)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.$anonfun$executePartitionTasks$1(LightGBMBase.scala:589)

at org.apache.spark.sql.execution.MapPartitionsExec.$anonfun$doExecute$3(objects.scala:201)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2(RDD.scala:898)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2$adapted(RDD.scala:898)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:510)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1520)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:513)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:2503)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2(DAGScheduler.scala:2452)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2$adapted(DAGScheduler.scala:2451)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:2451)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1(DAGScheduler.scala:1161)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1$adapted(DAGScheduler.scala:1161)

at scala.Option.foreach(Option.scala:407)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:1161)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2691)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2633)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2622)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:939)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2230)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2251)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2270)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2295)

at org.apache.spark.rdd.RDD.$anonfun$collect$1(RDD.scala:1030)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:414)

at org.apache.spark.rdd.RDD.collect(RDD.scala:1029)

at org.apache.spark.sql.execution.SparkPlan.executeCollect(SparkPlan.scala:410)

at org.apache.spark.sql.execution.adaptive.AdaptiveSparkPlanExec.$anonfun$executeCollect$1(AdaptiveSparkPlanExec.scala:340)

at org.apache.spark.sql.execution.adaptive.AdaptiveSparkPlanExec.withFinalPlanUpdate(AdaptiveSparkPlanExec.scala:368)

at org.apache.spark.sql.execution.adaptive.AdaptiveSparkPlanExec.executeCollect(AdaptiveSparkPlanExec.scala:340)

at org.apache.spark.sql.Dataset.collectFromPlan(Dataset.scala:3715)

at org.apache.spark.sql.Dataset.$anonfun$collect$1(Dataset.scala:2971)

at org.apache.spark.sql.Dataset.$anonfun$withAction$1(Dataset.scala:3706)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$5(SQLExecution.scala:103)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:163)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:90)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:775)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:64)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3704)

at org.apache.spark.sql.Dataset.collect(Dataset.scala:2971)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.executePartitionTasks(LightGBMBase.scala:597)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.executePartitionTasks$(LightGBMBase.scala:583)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.executePartitionTasks(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.executeTraining(LightGBMBase.scala:573)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.executeTraining$(LightGBMBase.scala:545)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.executeTraining(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.trainOneDataBatch(LightGBMBase.scala:435)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.trainOneDataBatch$(LightGBMBase.scala:392)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.trainOneDataBatch(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.$anonfun$train$2(LightGBMBase.scala:61)

at com.microsoft.azure.synapse.ml.logging.BasicLogging.logVerb(BasicLogging.scala:62)

at com.microsoft.azure.synapse.ml.logging.BasicLogging.logVerb$(BasicLogging.scala:59)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.logVerb(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.logging.BasicLogging.logTrain(BasicLogging.scala:48)

at com.microsoft.azure.synapse.ml.logging.BasicLogging.logTrain$(BasicLogging.scala:47)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.logTrain(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.train(LightGBMBase.scala:42)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.train$(LightGBMBase.scala:35)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.train(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.train(LightGBMRegressor.scala:39)

at org.apache.spark.ml.Predictor.fit(Predictor.scala:151)

at org.apache.spark.ml.Predictor.fit(Predictor.scala:115)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)

at java.net.Socket.connect(Socket.java:607)

at java.net.Socket.connect(Socket.java:556)

at java.net.Socket.<init>(Socket.java:452)

at java.net.Socket.<init>(Socket.java:229)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.getNetworkTopologyInfoFromDriver(NetworkManager.scala:129)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.$anonfun$getGlobalNetworkInfo$2(NetworkManager.scala:116)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:24)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:29)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:29)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:29)

at com.microsoft.azure.synapse.ml.core.utils.FaultToleranceUtils$.retryWithTimeout(FaultToleranceUtils.scala:29)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.$anonfun$getGlobalNetworkInfo$1(NetworkManager.scala:111)

at com.microsoft.azure.synapse.ml.core.env.StreamUtilities$.using(StreamUtilities.scala:28)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.getGlobalNetworkInfo(NetworkManager.scala:107)

at com.microsoft.azure.synapse.ml.lightgbm.BasePartitionTask.initialize(BasePartitionTask.scala:179)

at com.microsoft.azure.synapse.ml.lightgbm.BasePartitionTask.mapPartitionTask(BasePartitionTask.scala:114)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.$anonfun$executePartitionTasks$1(LightGBMBase.scala:589)

at org.apache.spark.sql.execution.MapPartitionsExec.$anonfun$doExecute$3(objects.scala:201)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2(RDD.scala:898)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2$adapted(RDD.scala:898)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:510)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1520)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:513)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

... 1 more

2023-02-10 10:48:21 [Driver] ERROR [ApplicationMaster:73]: User application exited with status 1

2.Exception Two(useBarrierExecutionMode is enabled):

2.1 training params:

LightGBMRegressor(maxDepth=3, numIterations=100, labelCol=Y, featuresCol="features", numTasks=100).setUseBarrierExecutionMode(True)

2.2 yarn display:

2.3 error log detail:

2023-02-09 15:45:46 [Thread-6] ERROR [LightGBMRegressor:94]: {"uid":"LightGBMRegressor_43bc1cc78f91","className":"class com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor","method":"train","buildVersion":"0.10.0-6-4868e8bf-SNAPSHOT"}

org.apache.spark.SparkException: Job aborted due to stage failure: Could not recover from a failed barrier ResultStage. Most recent failure reason: Stage failed because barrier task ResultTask(12, 39) finished unsuccessfully.

java.lang.Exception: Network init call failed in LightGBM with error: Binding port 12792 failed

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMUtils$.validate(LightGBMUtils.scala:18)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.initLightGBMNetwork(NetworkManager.scala:192)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.initLightGBMNetwork(NetworkManager.scala:203)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.initLightGBMNetwork(NetworkManager.scala:203)

at com.microsoft.azure.synapse.ml.lightgbm.NetworkManager$.initLightGBMNetwork(NetworkManager.scala:203)

at com.microsoft.azure.synapse.ml.lightgbm.BasePartitionTask.mapPartitionTask(BasePartitionTask.scala:126)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.$anonfun$executePartitionTasks$1(LightGBMBase.scala:589)

at org.apache.spark.rdd.RDDBarrier.$anonfun$mapPartitions$2(RDDBarrier.scala:51)

at org.apache.spark.rdd.RDDBarrier.$anonfun$mapPartitions$2$adapted(RDDBarrier.scala:51)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:510)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1520)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:513)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:2503)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2(DAGScheduler.scala:2452)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2$adapted(DAGScheduler.scala:2451)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:2451)

at org.apache.spark.scheduler.DAGScheduler.handleTaskCompletion(DAGScheduler.scala:2075)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2688)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2633)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2622)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:939)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2230)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2251)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2270)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2295)

at org.apache.spark.rdd.RDD.$anonfun$collect$1(RDD.scala:1030)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:414)

at org.apache.spark.rdd.RDD.collect(RDD.scala:1029)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.executePartitionTasks(LightGBMBase.scala:595)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.executePartitionTasks$(LightGBMBase.scala:583)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.executePartitionTasks(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.executeTraining(LightGBMBase.scala:573)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.executeTraining$(LightGBMBase.scala:545)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.executeTraining(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.trainOneDataBatch(LightGBMBase.scala:435)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.trainOneDataBatch$(LightGBMBase.scala:392)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.trainOneDataBatch(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.$anonfun$train$2(LightGBMBase.scala:61)

at com.microsoft.azure.synapse.ml.logging.BasicLogging.logVerb(BasicLogging.scala:62)

at com.microsoft.azure.synapse.ml.logging.BasicLogging.logVerb$(BasicLogging.scala:59)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.logVerb(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.logging.BasicLogging.logTrain(BasicLogging.scala:48)

at com.microsoft.azure.synapse.ml.logging.BasicLogging.logTrain$(BasicLogging.scala:47)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.logTrain(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.train(LightGBMBase.scala:42)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.train$(LightGBMBase.scala:35)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.train(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.train(LightGBMRegressor.scala:39)

at org.apache.spark.ml.Predictor.fit(Predictor.scala:151)

at org.apache.spark.ml.Predictor.fit(Predictor.scala:115)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.lang.Thread.run(Thread.java:748)

We tried to use synapseML to run training applications on yarn, more than 30 times. The above two exceptions occurs very frequently(the rate of exceptions is more than 90%, but not 100%). Could anyone please help us to avoid them? Thanks! :)

Code to reproduce issue

Exception One:

LightGBMRegressor(maxDepth=3, numIterations=100, labelCol=Y, featuresCol="features", numTasks=100)

Exception Two:

LightGBMRegressor(maxDepth=3, numIterations=100, labelCol=Y, featuresCol="features", numTasks=100).setUseBarrierExecutionMode(True)

Other info / logs

No response

What component(s) does this bug affect?

- [ ]

area/cognitive: Cognitive project - [ ]

area/core: Core project - [ ]

area/deep-learning: DeepLearning project - [ ]

area/lightgbm: Lightgbm project - [ ]

area/opencv: Opencv project - [ ]

area/vw: VW project - [ ]

area/website: Website - [ ]

area/build: Project build system - [ ]

area/notebooks: Samples under notebooks folder - [ ]

area/docker: Docker usage - [ ]

area/models: models related issue

What language(s) does this bug affect?

- [ ]

language/scala: Scala source code - [ ]

language/python: Pyspark APIs - [ ]

language/r: R APIs - [ ]

language/csharp: .NET APIs - [ ]

language/new: Proposals for new client languages

What integration(s) does this bug affect?

- [ ]

integrations/synapse: Azure Synapse integrations - [ ]

integrations/azureml: Azure ML integrations - [ ]

integrations/databricks: Databricks integrations

Hey @xglv1985 :wave:! Thank you so much for reporting the issue/feature request :rotating_light:. Someone from SynapseML Team will be looking to triage this issue soon. We appreciate your patience.

Several things to try:

- your spark config says you are using "com.microsoft.azure:synapseml_2.12:0.10.0-6-4868e8bf-SNAPSHOT", which is 0.10.0. Can you try 0.10.2? com.microsoft.azure:synapseml_2.12:0.10.2

- Try using useSingleDatasetMode=true

- Try using fewer partitions. You are using numTasks=100. The fewer tasks you have, the less likely you are to have network errors (LightGBM sets up #task "machines" and each communicates over the network with its own endpoint

- Use an even newer "com.microsoft.azure:synapseml_2.12:0.10.2-82-fbbb4336-SNAPSHOT" version, which has support for a memory efficient "streaming" mode (executionMode=streaming). This will also help let you reduce numTasks as we can process more data on a single executor. This isn't officially released yet (soon), but you can try it.

Thank you for your suggestions, but when we used "com.microsoft.azure:synapseml_2.12:0.10.2-82-fbbb4336-SNAPSHOT" version, we encountered an error. Have you ever updated the relevant version of GLIBC?

: java.lang.UnsatisfiedLinkError: /tmp/mml-natives6116134279440372765/lib_lightgbm.so: /opt/compiler/gcc-8.2/lib64/libm.so.6: version `GLIBC_2.27' not found (required by /tmp/mml-natives6116134279440372765/lib_lightgbm.so)

at java.lang.ClassLoader$NativeLibrary.load(Native Method)

at java.lang.ClassLoader.loadLibrary0(ClassLoader.java:1950)

at java.lang.ClassLoader.loadLibrary(ClassLoader.java:1832)

at java.lang.Runtime.load0(Runtime.java:811)

at java.lang.System.load(System.java:1088)

at com.microsoft.azure.synapse.ml.core.env.NativeLoader.loadLibraryByName(NativeLoader.java:66)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMUtils$.initializeNativeLibrary(LightGBMUtils.scala:33)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.train(LightGBMBase.scala:36)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMBase.train$(LightGBMBase.scala:35)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.train(LightGBMRegressor.scala:39)

at com.microsoft.azure.synapse.ml.lightgbm.LightGBMRegressor.train(LightGBMRegressor.scala:39)

at org.apache.spark.ml.Predictor.fit(Predictor.scala:151)

at org.apache.spark.ml.Predictor.fit(Predictor.scala:115)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.lang.Thread.run(Thread.java:748)

I'm not sure what you mean. Are you using this in a custom yarn setting (as opposed to Databricks or Synapse)? glibc should be on your nodes and is not controlled by the SynapseML package. And actually, the glibc version is controlled by the LightGBM build, and SynapseML is just a wrapper around that. I'm not as familiar with linux distros, so I could point you to someone else if that doesn't help.

We have released 11.2, which finally has the last of the planned streaming features.

i have the same error。

i have the same error。

Can you be more specific? And perhaps file a new issue since this is old. We are on version 0.11.2 now, and soon to be releasing 1.0.