SynapseML

SynapseML copied to clipboard

SynapseML copied to clipboard

How to see the number of iterations and loss changes when running model in mmlspark lightgbm?

Is there UI or log which to see the number of iterations and loss changes when running model in mmlspark lightgbm?

AB#1984522

1 rounds use 1 minite?

1 rounds use 1 minite?

@RainFung what is your dataset size and cluster configuration? 1 minute per iteration does seem like a lot. What are the parameters to lightgbm?

I increased the memory of excutor and solved the problem of slow iteration.How can I detect the number of iterations and loss changes like in the local mode?

@RainFung are you using pyspark or scala? In scala there are a ton of delegates you can override, you can even dynamically change the learning rate while training:

https://github.com/Azure/mmlspark/blob/master/lightgbm/src/main/scala/com/microsoft/ml/spark/lightgbm/LightGBMDelegate.scala

in pyspark that's a story that I need to implement.

"How can I detect the number of iterations and loss changes like in the local mode?"

Which functionality is this specifically, can you give more details? Could you point me to an example in python and I can try to mimic that in pyspark?

this is the delegate parameter:

https://github.com/Azure/mmlspark/blob/master/lightgbm/src/main/scala/com/microsoft/ml/spark/lightgbm/params/LightGBMParams.scala#L463

I think that LightGBMDelegate parameter could be wrapped in pyspark too...

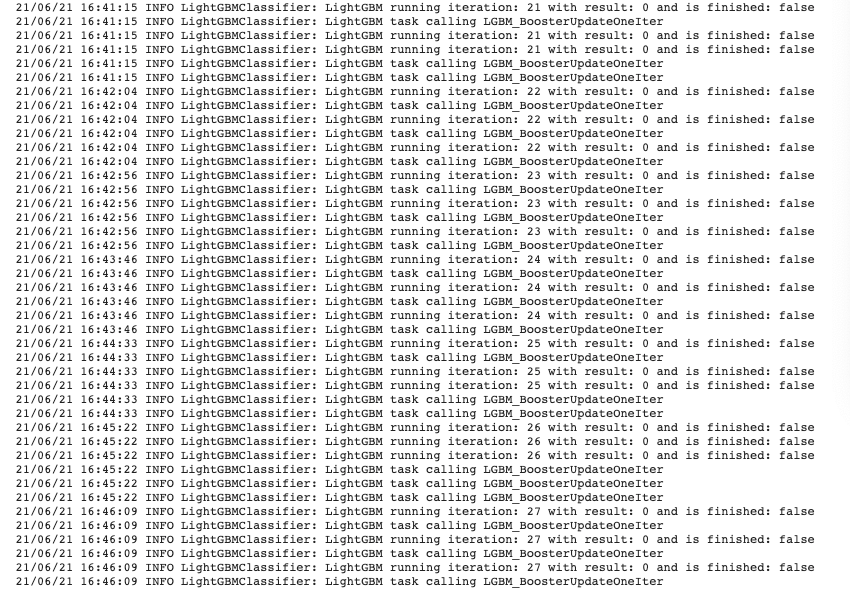

I want to see logs like this in pyspark

[LightGBM] [Warning] min_data_in_leaf is set=30, min_child_samples=20 will be ignored. Current value: min_data_in_leaf=30

[LightGBM] [Warning] Accuracy may be bad since you didn't explicitly set num_leaves OR 2^max_depth > num_leaves. (num_leaves=31).

Training until validation scores don't improve for 200 rounds

[500] train's auc: 0.786176 valid's auc: 0.785457

[1000] train's auc: 0.78646 valid's auc: 0.785479

Early stopping, best iteration is:

[858] train's auc: 0.786398 valid's auc: 0.785487

@imatiach-msft 👋

in pyspark that's a story that I need to implement. I think that LightGBMDelegate parameter could be wrapped in pyspark too...

I think it will be 100% very useful. For instance in my particular scenario: I am using hyperopt to tune booster parameters with average_precision as a metric using CV. From the code I see that from Scala I can override afterTrainIteration and gather metrics from validEvalResults to pass it then to hyperopt fmin / fmax.

But at the same delegate is not available for pyspark users, so what I really have to do is:

- use LightGBM internal

average_precisionfor early stopping - then gather predictions for validation set(s) and calculate average precision once again by my own in hyperopt objective

It works, but for sure it is not optimal and it would be so much easier (and safer) just to use LightGBM's output.

Having that said I wonder if there are plans to make LightGBMDelegate available for PySpark anytime soon?

Thanks!

I am resurrecting this issue because this is also a pain point of my workflow. Similarly to @denmoroz, I have to recompute the metric manually after early stopping in order to potentially prune a given trial. Instead of implementing LightGBMDelegate, which is apparently not in your plans, could we get an attribute getter that would return the evaluation metric from the LGBM model directly? That would save significant computation time for any form of external parameter tuning.