ValueError: unsupported pickle protocol: 5 while ensemble=True

Hi,

I am trying to use ensemble method while training a dataset as below-

The training was fine but in the end of the following line- [flaml.automl: 08-12 12:34:01] {3407} WARNING - Using passthrough=False for ensemble because the data contain categorical features.

I am getting below -

And then the following error occurred-

Without ensemble, I was able to complete the training.

My dataset info is as below-

Please help me to resolve this issue.

The error message is https://user-images.githubusercontent.com/97145738/184356110-52f5f468-2d53-4e7d-bfea-c1943e18dc86.png It suggests that you don't have enough RAM to build the ensemble. You can try specifying a simple final_estimator, e.g.,

automl.fit(

X_train, y_train, task="classification",

"ensemble": {

"final_estimator": LogisticRegression(),

"passthrough": False,

},

)

@sonichi is this syntax is correct-

And what should I import to resolve this error with above syntax-

I am able to proceed after importing as from sklearn.linear_model import LogisticRegression but since my problem is a regression I should use LinearRegression() as final estimator.

Now training is completed but RAM error is occurred as below-

It seems ensembling is not possible with FLAML.

Now training is completed but RAM error is occurred as below-

It seems ensembling is not possible with FLAML.

How large is the dataset and how large is the free RAM? Ensemble requires more RAM than training each single model. One thing you can try is to use a smaller time budget for tuning such that small models will be used for ensembling.

Now training is completed but RAM error is occurred as below-

It seems ensembling is not possible with FLAML.

How large is the dataset and how large is the free RAM? Ensemble requires more RAM than training each single model. One thing you can try is to use a smaller time budget for tuning such that small models will be used for ensembling.

Dataset is not so big as you can see here-

With "time_budget\": 10000*10, I am able to continue the training in Kaggle. Since its execution time crossed 12 hours limit, it stopped further.

I will continue my experiment with Colab Pro+ which has Tesla P100 16 GB GPU and 54 GiB RAM. I will inform you once the investigation is done.

Now training is completed but RAM error is occurred as below-

It seems ensembling is not possible with FLAML.

How large is the dataset and how large is the free RAM? Ensemble requires more RAM than training each single model. One thing you can try is to use a smaller time budget for tuning such that small models will be used for ensembling.

Dataset is not so big as you can see here-

With

"time_budget\": 10000*10,I am able to continue the training in Kaggle. Since its execution time crossed 12 hours limit, it stopped further.I will continue my experiment with Colab Pro+ which has Tesla P100 16 GB GPU and 54 GiB RAM. I will inform you once the investigation is done.

Thanks. Have you tried using smaller time budget to test whether the ensemble works?

One other question is did you feed the raw data into AutoML or did you do one-hot encoding before it? One-hot encoding could blow up the size of the dataset and should be avoided.

Yes, I started the experiment with 3600 but then ensembling was covering 96% so I increased the time budget.

And I am feeding raw data into the FLAML.

Yes, I started the experiment with

3600but then ensembling was covering 96% so I increased the time budget. And I am feeding raw data into the FLAML.

Do you mean ensemble works 96% cases or using 96% RAM when using 3600?

I mean with a lower time budget, search was 96% and in log message it was saying to increase the time budget to complete the search as 100%.

I mean with a lower time budget, search was 96% and in log message it was saying to increase the time budget to complete the search as 100%.

Did ensemble succeed with lower time budget?

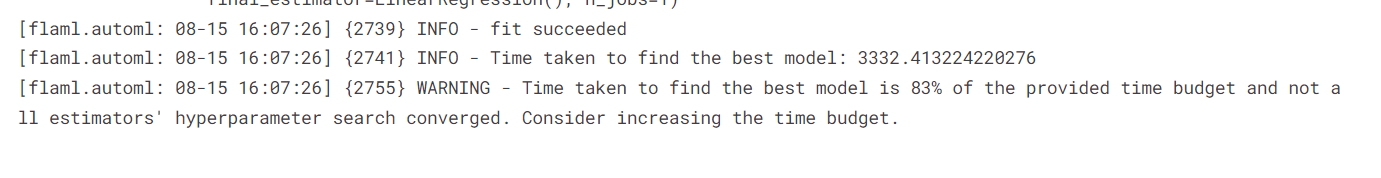

Yes, training was completed with below message-

It seems all estimators' hyperparameter search was not converged and thus score of my prediction model is poorer than a single best model using FLAML. With ensemble score is 83.4 and with single best model it was 83.55.

Please suggest some steps to improve the result using ensembling. My current settings are as below-

If you have enough RAM now, try removing the key "final_estimator" from "ensemble".

I have started the training with below changes in colab pro+ which have 54 GiB RAM-

can you please tell is there any setting to save whole logs in a file. Currently it is not saving all logs.

Use log_type="all". https://microsoft.github.io/FLAML/docs/Use-Cases/Task-Oriented-AutoML#log-the-trials

I did an experiment with commenting on the ensemble line like below-

And the score dropped to 83.3.

@sonichi as per your suggestion with the below changes, training is completed in 3467.75 seconds-

And the score is 83.54 which is less than without an ensemble (83.55).

Can you please suggest any other steps to improve the ensembling?

@sonichi I tried adding more estimators with the ensemble as below-

And my score improved to 83.968 from 83.55. Is there anything I can try to improve the score?

@sonichi I tried adding more estimators with the ensemble as below-

And my score improved to 83.968 from 83.55. Is there anything I can try to improve the score?

What about using all the estimators, by removing the estimator_list argument?

After removing the estimator_list, the score dropped to 83.92 from 83.96.