DeepSpeedExamples

DeepSpeedExamples copied to clipboard

DeepSpeedExamples copied to clipboard

Trained BLOOM model saved differently comparing to OPT model

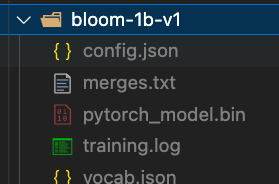

Hi, I following the script to train the bloom model for my own dataset. However, I found that it saved the model differently as compared to other models such as OPT. The screenshot below shows the saved files.

When I load the model and tokenizer using the following script:

tokenizer = AutoTokenizer.from_pretrained(model_path,fast_tokenizer=True)

model = AutoModelForCausalLM.from_pretrained(

model_path,

)

I always have this error:

Exception has occurred: OSError

Can't load tokenizer for 'xxx/DeepSpeed/DeepSpeedExamples/applications/DeepSpeed-Chat/output/actor-models/bloom-1b-v1'. If you were trying to load it from 'https://huggingface.co/models', make sure you don't have a local directory with the same name. Otherwise, make sure 'xxx/DeepSpeed/DeepSpeedExamples/applications/DeepSpeed-Chat/output/actor-models/bloom-1b-v1' is the correct path to a directory containing all relevant files for a BloomTokenizerFast tokenizer.