New training: Alpaca-lora-zero3 on 2080Ti

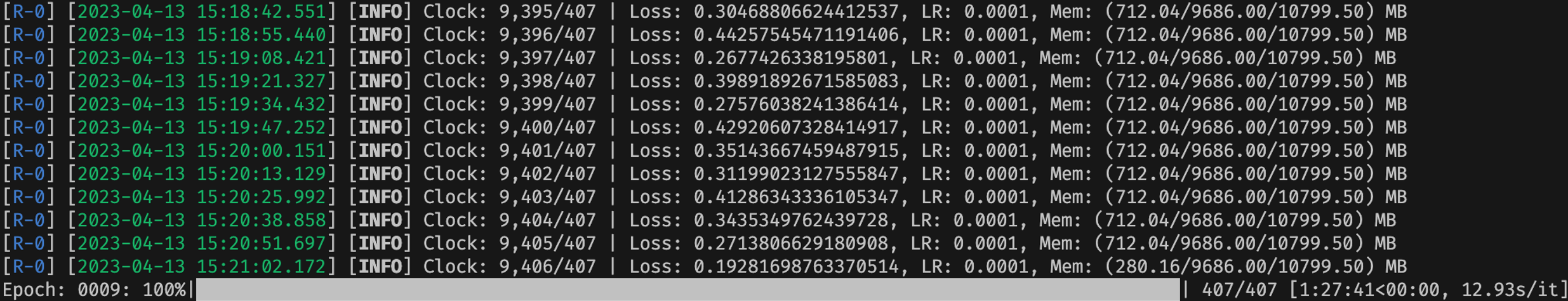

We added a new example to fine-tune LLaMA on 2080Ti-level GPUs. In my environment, with 8 2080Ti GPUs, LLama-7b can be fine-tuned on alpaca-52k dataset at the speed of 1.5 hours / epoch.

@microsoft-github-policy-service agree

Hi @bigeagle , we want to confirm one thing before reviewing your PR: is your example using DeepSpeed-Chat framework, or is it more like a standalone example only using some DeepSpeed feature? If it is the second case, then I'm afraid that it'd be hard for us to merge it: this repo is mainly intended for users to use and learn the DeepSpeed-Chat framework. An example that actually didn't use DeepSpeed-Chat would confuse other users. In our roadmap we mentioned LLaMa support https://github.com/microsoft/DeepSpeedExamples/tree/master/applications/DeepSpeed-Chat#-deepspeed-chats-roadmap-. It'd be great if you could adapt your example to DeepSpeed-Chat framework when the LLaMa support is available. Thank you.

Hi @conglongli, this PR does not use deepspeed-chat framework. I think it's a bit confusing that this repo is named as deepspeed-examples, instead of deepspeed-chat, especially to those who are interested in deepspeed itself (but not related to chat).

Anyway, if ds-chat is what this repo will focus on, I think it's appropriate to hold on this PR. I will check if I can make some contributions to ds-chat development directly.

Hi @conglongli, this PR does not use deepspeed-chat framework. I think it's a bit confusing that this repo is named as

deepspeed-examples, instead ofdeepspeed-chat, especially to those who are interested in deepspeed itself (but not related tochat).Anyway, if ds-chat is what this repo will focus on, I think it's appropriate to hold on this PR. I will check if I can make some contributions to ds-chat development directly.

@bigeagle Yes it's true that overall this DeepSpeedExamples repo hosts many different examples of how to use DeepSpeed in general. But for RLHF training (not matter step-1 only or all 3 steps), we would like to focus on showcasing how to do so via DeepSpeed-Chat framework. Thank you for your understanding and we very much welcome your contribution in the future.

@bigeagle I found the training pipeline pretty intuitive. One naive question, did you finetune the llama model and find similar performance as the open source Alpaca model? Also I'm assuming that the LoRa weights will be outputted in your training. How would you then load the finetuned weights on top of the llama model? Do you just pass both model and weights to peft?

How would you then load the finetuned weights on top of the llama model?

step1. load the orignal llama model and load the original weights

step2. use peft to add lora adaptor and load lora weights

Thanks, also is there a reason you decided to create your own tokenizer class rather than use the Hugginface tokenizer class for Llama? I do see that Huggingface tokenizer class doesn't have eos_token_id or pad_token_id set. Was that the reason?