[CPU] Support Intel CPU inference

Summary:

This PR provides adds Intel CPU support to DeepSpeed by extending DeepSpeedAccelerator Interface. It allows user to run LLM inference with Intel CPU with Auto Tensor Parallelism or kernel injection (currently kernel injection is experimental and only support BLOOM). This PR comes with the following features:

- CPUAccelerator

- Low latency communication backend CCLBackend that call oneCCL collective communication library directly instead of calling through torch.distributed.

- Kernel injection fallback path to aid development of DeepSpeed accelerator. Allowing kernel injection path to function before kernels fully developed.

- (Merged) ~~Reduce peak memory usage during AutoTP model loading stage. (Also in seperte PR https://github.com/microsoft/DeepSpeed/pull/3102)~~

- (Merged) ~~BF16 inference datatype support.~~

- (Merged) ~~LLAMA auto tensor parallel.(Also in PR https://github.com/microsoft/DeepSpeed/pull/3170)~~

- (Merged) ~~Efficient distributed inference on host with multiple CPU NUMA cluster, including multiple CPU socket or sub NUMA cluster (SNC). (Also in seperate PR https://github.com/microsoft/DeepSpeed/pull/2881)~~

- (Merged) ~~BLOOM AutoTP and/or tensor parallelism through user specified policy. (Also in PR https://github.com/microsoft/DeepSpeed/pull/3035)~~

Note: this PR keeps all the pieces for testing. The other PRs mentioned here are supposed to be reviewed seperatedly to allow small steps of work.

Major components:

- core binding support -- changes under deepspeed/launcher

- CCLBackend -- changes under deepspeed/com

- CPUAccelerator -- files from the following places:

- accelerator/cpu_accelerator.py

- op_builder/cpu/

- csrc/cpu/

- Accelerator selection logic -- accelerator/real_accelerator.py

- Kernel injection fallback path -- changes from deepspeed/ops/transformer/inference/op_binding/

- bfloat16 inference support -- changes scattered around

CPU Inference example

This section is work in progress

@tjruwase we plan to add a new workflow to validate installation for CPU and run some inference related test. If there is anything we should know before add new workflow just let us know. Thanks!

@tjruwase we plan to add a new workflow to validate installation for CPU and run some inference related test. If there is anything we should know before add new workflow just let us know. Thanks!

The CPU workflow requires machine with AVX2 or above. Is there any self-hosted keywords I can use to get machine with AVX2 or above?

We are discussing this internally, and will respond asap. In the meantime, you can try https://github.com/microsoft/DeepSpeed/blob/master/.github/workflows/nv-torch-latest-cpu.yml

@tjruwase we plan to add a new workflow to validate installation for CPU and run some inference related test. If there is anything we should know before add new workflow just let us know. Thanks!

Thanks @delock, this sounds great. Will github provided runner(s) work for this? If so, please extend the existing nv-torch-latest-cpu.yml workflow that @tjruwase referenced above.

@tjruwase we plan to add a new workflow to validate installation for CPU and run some inference related test. If there is anything we should know before add new workflow just let us know. Thanks!

The CPU workflow requires machine with AVX2 or above. Is there any self-hosted keywords I can use to get machine with AVX2 or above?

We are discussing this internally, and will respond asap. In the meantime, you can try https://github.com/microsoft/DeepSpeed/blob/master/.github/workflows/nv-torch-latest-cpu.yml

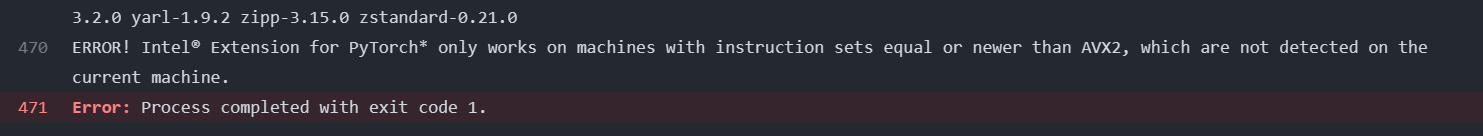

Thanks @tjruwase @jeffra ! We did some investigation and found at least one instance used to run workflow comes with AVX2 instruction set but Intel Extension for Pytorch complains no AVX2 support. https://github.com/microsoft/DeepSpeed/actions/runs/4498976192/jobs/7916249285?pr=3041#step:6:1115

I talked with engineer who worked on PyTorch, he has some idea on that behavior and would investigate early next week. Once we have conclusion will give you an update.

@tjruwase we plan to add a new workflow to validate installation for CPU and run some inference related test. If there is anything we should know before add new workflow just let us know. Thanks!

The CPU workflow requires machine with AVX2 or above. Is there any self-hosted keywords I can use to get machine with AVX2 or above?

We are discussing this internally, and will respond asap. In the meantime, you can try https://github.com/microsoft/DeepSpeed/blob/master/.github/workflows/nv-torch-latest-cpu.yml

Hi @tjruwase , we have investigated this issue and found that the root cause is some old hypervisor does not emulate xcr register used by Intel Extension for Pytorch,which detects XSAVE status for AVX2. We plan to remove this detection in next release. Before that, we will use a temporary Intel Extension for PyTorch wheel to build cpu workflow.

So to summary, the issue is not because machine does not support AVX2. I think we can build the workflow using the default ubuntu-20.04 instance for github and see if we can always get instance with AVX2 or above.

@delock, @mrwyattii,

I notice the cpu-inference CI is failing for the following reason:

Is it possible to have a filter to exclude this CI based on hardware requirements?

@delock, @mrwyattii,

I notice the cpu-inference CI is failing for the following reason:

Is it possible to have a filter to exclude this CI based on hardware requirements?

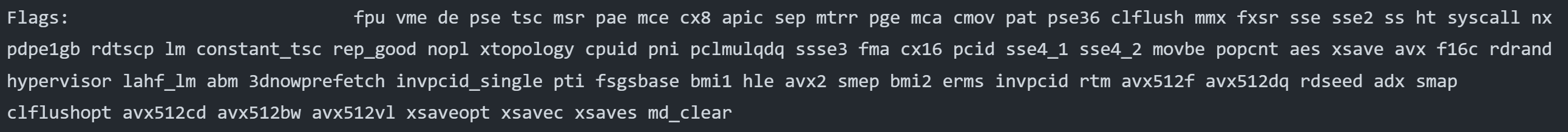

The detection part in this test shows this machine actually has AVX2 instruction set.

https://github.com/microsoft/DeepSpeed/actions/runs/4804701965/jobs/8550432875?pr=3041#step:4:48

However the control register are not correctly emulated by hypervisor which cause this problem, note the XCR value is not correct here.

https://github.com/microsoft/DeepSpeed/actions/runs/4804701965/jobs/8550432875?pr=3041#step:4:74

However the control register are not correctly emulated by hypervisor which cause this problem, note the XCR value is not correct here.

https://github.com/microsoft/DeepSpeed/actions/runs/4804701965/jobs/8550432875?pr=3041#step:4:74

Currently we are using a hotfix for Intel Extension for Pytorch to get around this issue by not relying on XCR. So Intel Extension for Pytorch could be more robust. However it is not effective for this specific run. Will need some investigation to understand why.

The Intel Extension for Pytorch we used here is a hotfix for the AVX2 instruction set detection. In this particulary run seems the public wheel is downloaded from pypi. We will have a new public release containing the AVX2 instruction set detection fix, then default installation of Intel Extension for Pytorch won't show this error.

Currently we are using a hotfix for Intel Extension for Pytorch to get around this issue by not relying on XCR. So Intel Extension for Pytorch could be more robust. However it is not effective for this specific run. Will need some investigation to understand why.

Hi @tjruwase need your input about UT data format. Currently on pytorch CPU backend we only support BF16 training and inference. This will break UTs using FP16 data format. We are thinking about ways to allow UT skip certain data format basing on backend capability. There are two options:

- Add new interface in abstract accelerator to claim accelerator capability (combination of FP16/BF16 training/inference, and maybe int8/FP8 as well). So UT would be able to make skip decision basing on return value of this interface. Model code can also use this capability to decide which datatype to use for training or inference.

- Check and skip base on accelerator name, this will not require accelerator interface change, but the implementation in UT will not be general enough and may require more change if there are other accelerator involved.

Which one do you prefer? We plan to add UT skipping in a seperate PR, after CPU support is merged. After that we are able to run more unit tests for CPU backend.

Hi @tjruwase need your input about UT data format. Currently on pytorch CPU backend we only support BF16 training and inference. This will break UTs using FP16 data format. We are thinking about ways to allow UT skip certain data format basing on backend capability. There are two options:

- Add new interface in abstract accelerator to claim accelerator capability (combination of FP16/BF16 training/inference, and maybe int8/FP8 as well). So UT would be able to make skip decision basing on return value of this interface. Model code can also use this capability to decide which datatype to use for training or inference.

- Check and skip base on accelerator name, this will not require accelerator interface change, but the implementation in UT will not be general enough and may require more change if there are other accelerator involved.

Which one do you prefer? We plan to add UT skipping in a seperate PR, after CPU support is merged. After that we are able to run more unit tests for CPU backend.

@delock, thanks for raising this question. I prefer option 1 of adding new interface to abstract accelerator.

For datatypes, I think we can add an interface that returns a list of supported torch data types.

def supported_dtypes(self) -> List[torch.dtype]

This interface will be future proof to allow new data types without interface change. Client code can query the return list of specific types.

For training and inference, I don't think we need an accelerator interface for these capabilities. To me, the difference between training and inference is whether or not reduction is supported by the communication library. Please let me know if I am missing anything.

@jeffra, @mrwyattii FYI

@tjruwase The difference between training and inference is mainly from software priority aspect. Sometimes a backend has forward OPs for a data type implemented first, then implement backward of the same OP in next release, so it would make sense to let a backend 'claim' support of certain datatype for inference only or training+inference.

@tjruwase The difference between training and inference is mainly from software priority aspect. Sometimes a backend has forward OPs for a data type implemented first, then implement backward of the same OP in next release, so it would make sense to let a backend 'claim' support of certain datatype for inference only or training+inference.

I was not aware that backward ops are more challenging for an accelerator than forward ops. Is this referring to availability of high-performance kernel implementations? Can you please explain a bit more?

I think gradient reduction is another requirement for training, and that is provided by communication library like CCL. Is that not the case?

From my understanding, the challenge is more in engineering effort. The effort include implement backward OPs in primitive library, then integrate these primitive into PyTorch, then do extensive testing to ensure OOB training experience for that datatype. It involves engineering effort in both development, integration and testing, so implement inference and training step by step will help focus efforts.

Different datatype do need gradient reduction for different datatype, and it will need support from communication library. If the communication library is developed in house, coordinate datatype support with backward ops seems to be a reasonable choice. May vary by case.

@tjruwase The difference between training and inference is mainly from software priority aspect. Sometimes a backend has forward OPs for a data type implemented first, then implement backward of the same OP in next release, so it would make sense to let a backend 'claim' support of certain datatype for inference only or training+inference.

I was not aware that backward ops are more challenging for an accelerator than forward ops. Is this referring to availability of high-performance kernel implementations? Can you please explain a bit more?

I think gradient reduction is another requirement for training, and that is provided by communication library like CCL. Is that not the case?

@delock, thanks for the discussion. Perhaps we should handle datatype support and OP support differently in the UTs.

- For datatype support, let us use the proposed abstract accelerator interface:

def supported_dtypes(self) -> List[torch.dtype] - For training/inference, let us use accelerator name to detect support.

In other words, going back to your original option 1 and 2 proposals. We shall use option 1 for datatypes and option 2 for training/inference. What do you think?

@delock, thanks for the discussion. Perhaps we should handle datatype support and OP support differently in the UTs.

- For datatype support, let us use the proposed abstract accelerator interface:

def supported_dtypes(self) -> List[torch.dtype]- For training/inference, let us use accelerator name to detect support.

In other words, going back to your original option 1 and 2 proposals. We shall use option 1 for datatypes and option 2 for training/inference. What do you think?

Sounds good. We will add the new interface and enable more UT in a seperate pull request.

The Intel Extension for Pytorch we used here is a hotfix for the AVX2 instruction set detection. In this particulary run seems the public wheel is downloaded from pypi. We will have a new public release containing the AVX2 instruction set detection fix, then default installation of Intel Extension for Pytorch won't show this error.

Currently we are using a hotfix for Intel Extension for Pytorch to get around this issue by not relying on XCR. So Intel Extension for Pytorch could be more robust. However it is not effective for this specific run. Will need some investigation to understand why.

This seems to still be failing in CI. Are you running the new release of AVX2 instruction set detection fix yet? https://github.com/microsoft/DeepSpeed/actions/runs/4936158586/jobs/8823357797?pr=3041

I believe this is remaining issue to merge this PR.

We will have a new release for Intel Extension for PyTorch for AVX2 detection very soon, will update install link to fix the CI workflow for CPU. A reference to the issue: https://github.com/intel/intel-extension-for-pytorch/issues/326

The Intel Extension for Pytorch we used here is a hotfix for the AVX2 instruction set detection. In this particulary run seems the public wheel is downloaded from pypi. We will have a new public release containing the AVX2 instruction set detection fix, then default installation of Intel Extension for Pytorch won't show this error.

Currently we are using a hotfix for Intel Extension for Pytorch to get around this issue by not relying on XCR. So Intel Extension for Pytorch could be more robust. However it is not effective for this specific run. Will need some investigation to understand why.

This seems to still be failing in CI. Are you running the new release of AVX2 instruction set detection fix yet? https://github.com/microsoft/DeepSpeed/actions/runs/4936158586/jobs/8823357797?pr=3041

I believe this is remaining issue to merge this PR.

Intel Extension for PyTorch had been updated to lastest version and AVX2 detection issue had been resolved.

We will have a new release for Intel Extension for PyTorch for AVX2 detection very soon, will update install link to fix the CI workflow for CPU. A reference to the issue: intel/intel-extension-for-pytorch#326

The Intel Extension for Pytorch we used here is a hotfix for the AVX2 instruction set detection. In this particulary run seems the public wheel is downloaded from pypi. We will have a new public release containing the AVX2 instruction set detection fix, then default installation of Intel Extension for Pytorch won't show this error.

Currently we are using a hotfix for Intel Extension for Pytorch to get around this issue by not relying on XCR. So Intel Extension for Pytorch could be more robust. However it is not effective for this specific run. Will need some investigation to understand why.

This seems to still be failing in CI. Are you running the new release of AVX2 instruction set detection fix yet? https://github.com/microsoft/DeepSpeed/actions/runs/4936158586/jobs/8823357797?pr=3041 I believe this is remaining issue to merge this PR.