DeepSpeed

DeepSpeed copied to clipboard

DeepSpeed copied to clipboard

[BUG] Cumbersome and misleading optimizer configuration

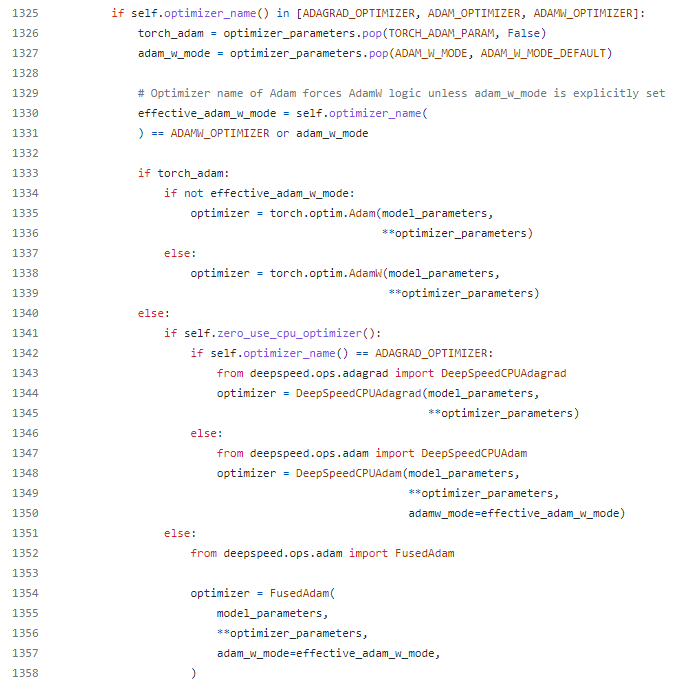

There is a discrepancy between the optimizer type (name) given in the DeepSpeedConfig json file to the optimizer chosen in the _configure_basic_optimizer function in .../deepspeed/runtime/engine.py

https://github.com/microsoft/DeepSpeed/blob/master/deepspeed/runtime/engine.py

- When initializing DeepSpeed engine one can configure the basic optimizer by supplying an optimizer object or by using the model_parameters from the DeepSpeedConfig json file: https://www.deepspeed.ai/docs/config-json/

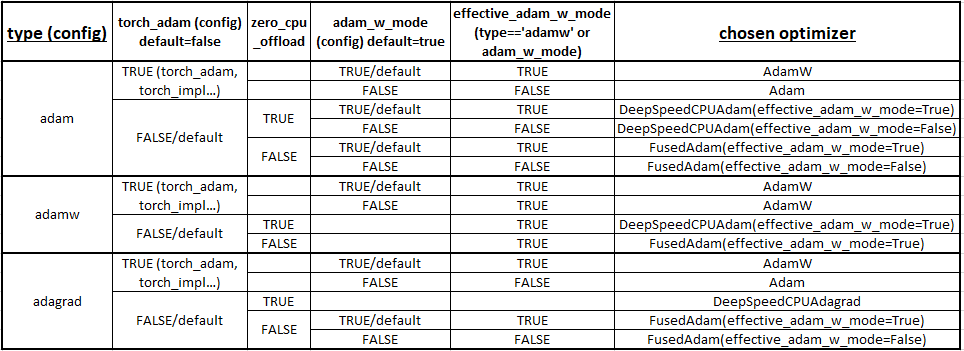

When the optimizer type is Adam/AdamW/Adagrad we get the following branched decision tree for the optimizer which consist of many discrepancies between the given optimizer type and the chosen basic optimizer:

It is written that:

Optimizer name of Adam forces AdamW logic unless adam_w_mode is explicitly set

And yet there are too many variables allowing the above discrepancies, especially for the "adagrad" optimizer.

As can be seen from the table above, the optimizer configuration is cumbersome, non-trivial, confusing and in some cases the optimizer chosen is not what the user asked for. For example, user asks for adagrad but get adam or adamw. Please check whether this configuration can be simplified, and maybe reduce the amount of args.