cli

cli copied to clipboard

cli copied to clipboard

State of the black box testing

Currently the black box testing can be found in:

https://github.com/microservices/oms/tree/df5ca63fa8633e0185a48a26f5cdca49b8080b25/tests/regression

As best as I can tell, these are split into a few parts:

- For all our non-event templates, run a simple

oms run oms runsome basic services (base64 and hashes)- For all Hub certified services that aren't internal

oms validate

I have a few concerns with the state in no particular order:

-

There's a bunch of commented code which confuses things (event tests, jwt) - can we remove this?

-

Because these tests only run on an

omsPR or commit and the large amount of dependencies, it's not unlikely that breaking changes may build up in the dependent artifacts that are hard to track down (did I break this, or did something else, and when?) - ideally we would have a repo independent solution (like concourse), but perhaps we could introduce a periodic build as a stopgap? -

These tests are really following many of the same paths, and don't seem to provide sufficient regression coverage for me. In an ideal world (I'm not saying we go this far), I should be able to rewrite the CLI leaning on the tests for confidence. That we didn't have this for Anees to rewrite the CLI was a bit of a failure that we should avoid in future.

In particular, we have a lot of tests that do simple oms run and oms validate, but there are many other interesting behaviours (just in the CLI) around validation options, building, listing, subscriptions, expected errors, etc that we don't seem to exercise.

As a gut feel, if a behaviour is important enough to include in the product, it's important enough to be sure that it continues to work. In particular, a common failure mode is that features that are less useful are considered less important to test because they are less well-trodden, but this really just results in obscure edge cases and investigations when problems do surface.

We could take inspiration from other systems that are building CLIs:

Thanks for reading and let me know your thoughts!

There's a bunch of commented code which confuses things (event tests, jwt) - can we remove this?

IIRC it was commented out because at the time OMS validated failed. It should not be removed, but fixed. It's probably just removing the comment.

Because these tests only run on an oms PR or commit and the large amount of dependencies, it's not unlikely that breaking changes may build up in the dependent artifacts that are hard to track down (did I break this, or did something else, and when?)

As you mentioned, we have to kinds of regression tests:

- validation only (we should be able to run this against all services on the hub theoretically)

oms run(we can only run this against a limited number of services). At the moment this is mostly limited to the "hello world" templates + a few static ones like e.g.base64.

Failures like the recent Clojure build dependency failure are annoying and ideally shouldn't happen. My take on this is to only include "stable" OMS packages into the build. We want to ensure that our official templates always work with OMS, so they are a good starting set. Though if a template breaks too often randomly (like e.g. Clojure), we should consider not maintaining it officially anymore.

Ideally we would have a repo independent solution (like concourse)

Well, ideally we implement sth. like oms test (i.e. https://github.com/microservices/oms/issues/120).

In other words: a package defines how it can be tested. Then we don't need to hard-code things here and can just run oms test on well-maintained packages. I still think that we should tests on EVERY oms PR, because that's the easiest way to avoid breakage. Before a-new-dawn the regression tests were working on master and it took quite some manual effort to get them working again.

FWIW on the D Language we have a project tester which runs on every PR (https://buildkite.com/dlang) and does the following things:

- build release distribution once

- parallel:

- git clone the ~ top 50 D packages

- run

dub test(it's similar to e.g.npm run test)

We have caught tons of regressions this way which is why I think continuing to test on every PR is very important. So I disagree on repo-independent solution (you only want to run the testsuite of all packages here), but I agree on the aspect of changing the infrastructure from a simple shell script to something more sophisticated.

These tests are really following many of the same paths, and don't seem to provide sufficient regression coverage for me. In an ideal world (I'm not saying we go this far), I should be able to rewrite the CLI leaning on the tests for confidence.

Agreed. The Hub SHOULD NOT accept invalid YAML, because then we could simply run the CLI against all packages.

we have a lot of tests that do simple oms run and oms validate, but there are many other interesting behaviours (just in the CLI) around validation options, building, listing, subscriptions, expected errors, etc that we don't seem to exercise.

Yes, at least master is doing a simple subscription event test for the events templates:

https://github.com/microservices/oms/blob/4ee2528a424990924c7f0893e557d90adef7ce2a/tests/regression/travis.sh#L20-L26

I don't know why it was removed/commented for 'a-new-dawn'.

Anyhow, oms run does an implicit regression test on building, so the most important things are covered. I agree with you that we should add more tests compared to master instead of removing them.

That we didn't have this for Anees to rewrite the CLI was a bit of a failure that we should avoid in future.

That's not entirely correct. Master already had working regression tests against some packages before the rewrite.

We could take inspiration from other systems that are building CLIs:

Master had CLI tests:

https://github.com/microservices/oms/blob/4ee2528a424990924c7f0893e557d90adef7ce2a/packages/cli/tests/src/cli/Cli.ts#L93-L106

I don't see anything wrong with that way.

Thanks Seb, agree with many things you said. A few notes:

We have caught tons of regressions this way which is why I think continuing to test on every PR is very important. So I disagree on repo-independent solution (you only want to run the testsuite of all packages here)

I think you might be misunderstanding me here. Actually I want to rely on repo-independent solution to run the tests more often. I'd prefer a pipeline that triggers on any change to oms or the dependent projects to catch issues faster and to have them discoverable in one central location.

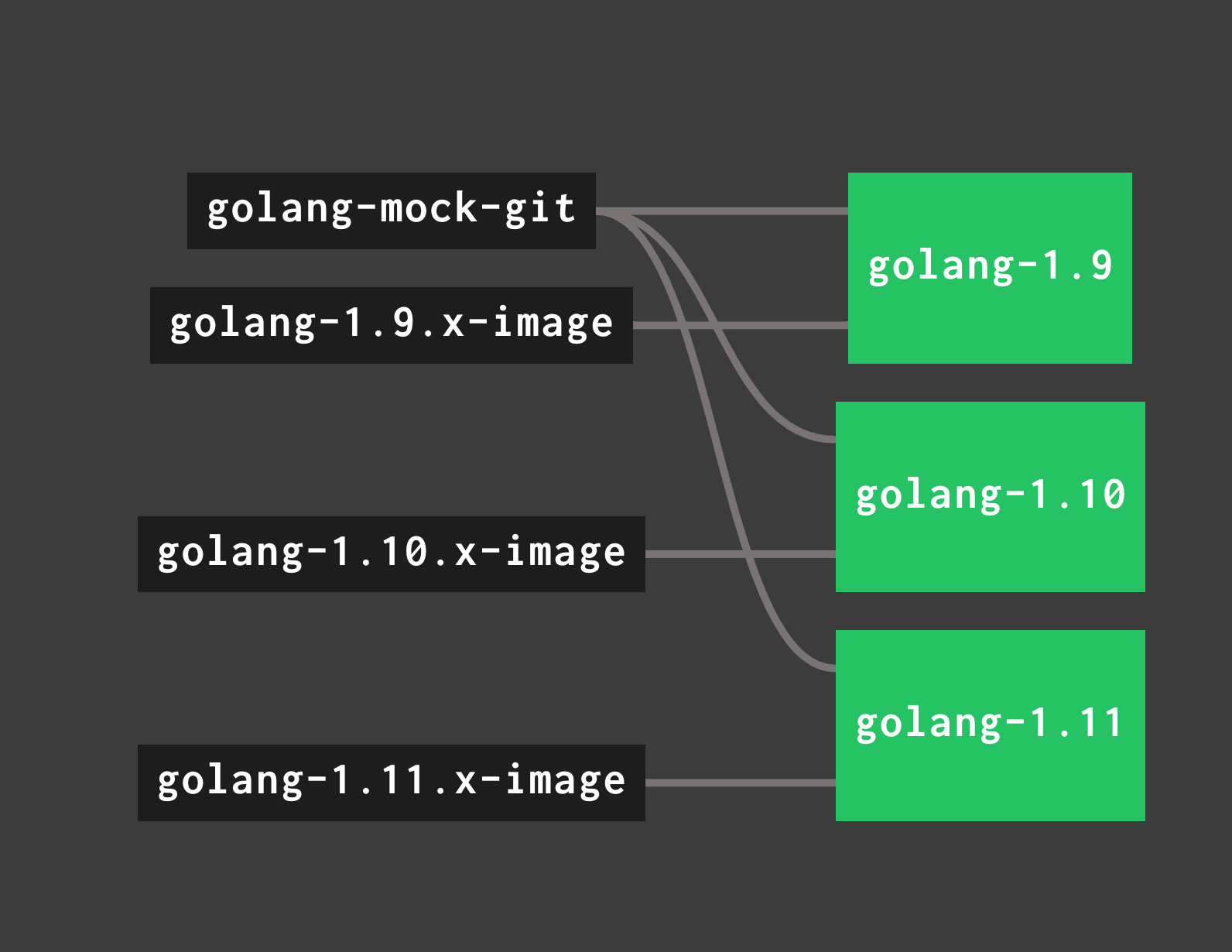

See for example this small pipeline which triggers builds on changes to different versions of Go, rather than waiting for a code change.

That's not entirely correct. Master already had working regression tests against some packages before the rewrite.

I recognise this - take it in context of the missing tests that you seem to have "Agreed" with as being missing.

Master had CLI tests. I don't see anything wrong with that way.

Nothing explicitly with regards to the approach. There's perhaps a bit too much mocking for my taste but I can live with that. The crux is the extensive coverage provided by the referenced tests in other projects compared to what we have.

I think you might be misunderstanding me here. Actually I want to rely on repo-independent solution to run the tests more often. I'd prefer a pipeline that triggers on any change to oms or the dependent projects to catch issues faster and to have them discoverable in one central location.

Ah sorry misunderstood you. Yes that would be nice. Though, it does sounds like a lot of work with little impact to our users that could be fixed for now with e.g. a six-hour cron for the OMS build.

The crux is the extensive coverage provided by the referenced tests in other projects compared to what we have.

:+1: Maybe better focus on this than building extensive pipelines ;-)

Ah sorry misunderstood you. Yes that would be nice. Though, it does sounds like a lot of work with little impact to our users that could be fixed for now with e.g. a six-hour cron for the OMS build.

Yes. See: but perhaps we could introduce a periodic build as a stopgap? - I think cron would give us a lot of what we want here and is cheaper.

Maybe better focus on this than building extensive pipelines ;-)

Yes. See: I have a few concerns with the state in no particular order - I think we should relatively prioritise these things, and I agree that the test coverage is more concerning.