nscp

nscp copied to clipboard

nscp copied to clipboard

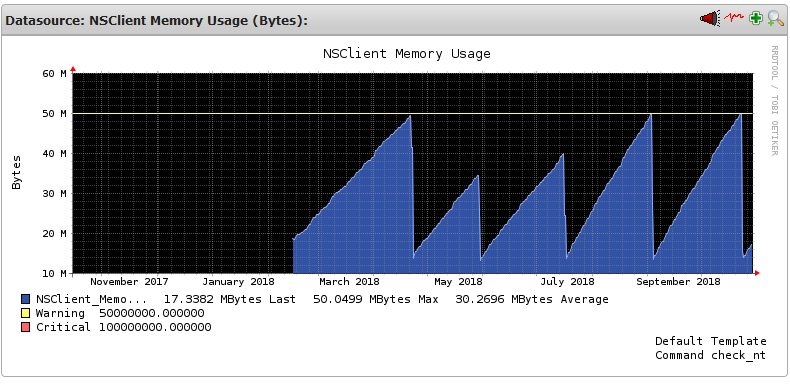

Memory Leak on Versions >= NSClient++ 0.5.2.35 2018-01-28

Issue and Steps to Reproduce

Upgrade from NSClient++ 0.5.1.31 2017-06-26 to NSClient++ 0.5.2.35 2018-01-28

Still exists in 0.5.2.41 2018-04-26

Expected Behavior

Memory usage should remain static

Actual Behavior

Memory usage grows over time roughly in correlation with number of checks Unresolved by upgrade to NSClient++ 0.5.2.39 2018-02-04

Details

- OS and Version: Windows Server 2008, 2008r and 2012 r2

- Checking from: Open Monitoring Distribution running naemon core and gearman

- Checking with: check_nrpe, check_nt, check_nsc_web

Additional Details

NSClient++ log: Log is empty

Graphs

Memory leak is demonstrated in graphs here: https://imgur.com/a/APAYxAR

In the next week or so, I'll set-up NSClient++ on an additonal box and test different types of checks as to try and narrow down the scope of the issue

Since posting, I realise we are using check_nrpe for a very small number of checks and not ubiquitously across all Windows hosts, so that's ruled out.

I've now got three test boxes now running to try and narrow down the scope of the problem.

- Agressively running 3 nsc web checks + check nt for nsclient version and memory usage on 1 minute interval

- Agressively running 3 check nt checks + check nt for nsclient version and memory usage on 1 minute interval

- Control solely running check nt for nsclient version and memory usage on 1 minute interval

I will share findings in due course.

The results are in. It's fairly conclusively an issue in check_nsc_web

https://imgur.com/a/nc2vFHp

I'll see what I can do to help narrow down the problem further still.

When you say an issue in check_nsc_web do you mean a problem when check_nsc_web is used? I.e. there's a memory leak in NSClient's REST API?

@mintsoft yes, a problem when check_nsc_web with NSCClients's REST API.

Over the weekend we left two tests running, one invoking check_ok and the other invoking check_critical and the other invoking check_ok via ceck_nsc_web (and thus using NSClient's REST API)

Both resulted in NSClient leaking memory in the same manner as other checks.

To confirm the nslient.ini when being tested was the stock configuration:

; alias_mem - Alias for alias_mem. To configure this item add a section called: /settings/external scripts/alias/alias_mem

alias_mem = checkMem MaxWarn=80% MaxCrit=90% ShowAll=long type=physical type=virtual type=paged type=page

the commands being used were:

Leaks memory:

/omd/sites/default/lib/nagios/plugins/check_nsc_web -p password -u https://servername:8443 -k alias_mem

Doesn't leak memory:

/omd/sites/default/lib/nagios/plugins/check_nt -H servername -p 12489 -v MEMUSE -w 80 -c 90

The memory leak in check_nsc_web is very slow, however is definitely present at a rate of about 360 bytes/minute on our production servers.

Just to keep updated, we've rolled out 0.5.2.41 company wide to fix https://github.com/mickem/nscp/issues/550 we've had it on a few servers for nearly a year and you can see the background memory leak:

Our workflow is when NSClient hits 50MB we restart the service to workaround the problem; we only use check_nsc_web and check_nt against these servers and there's nothing in the nsclient.log

I've started looking at this as I'm seeing the same behaviour as we convert our nrpe checks to use the REST api under 0.5.3.4

A quick run with valgrind --tool=memcheck --log-file="valgrind.log" --leak-check=full --show-leak-kinds=all -v ./nscp test

then running 50 times: ./check_nsc_web -k -p "pass" -u "https://localhost:8443" check_version

Shows:

==26571== 50 errors in context 1 of 31:

==26571== Thread 24:

==26571== Syscall param socketcall.sendto(msg) points to uninitialised byte(s)

==26571== at 0x763495B: send (send.c:31)

==26571== by 0xCA5F567: mg_broadcast (mongoose.c:3054)

==26571== by 0xCA4F1B8: Mongoose::ServerImpl::request_reply_async(unsigned long, std::string) (ServerImpl.cpp:136)

==26571== by 0xCA4F48F: Mongoose::request_job::run() (ServerImpl.cpp:291)

==26571== by 0xCA50BB4: Mongoose::ServerImpl::request_thread_proc(int) (ServerImpl.cpp:90)

==26571== by 0x4E2E56: operator() (function_template.hpp:767)

==26571== by 0x4E2E56: void has_threads::runThread<boost::function<void ()> >(boost::function<void ()>, boost::thread*) (has-threads.hpp:122)

==26571== by 0x4E2C46: operator() (mem_fn_template.hpp:280)

==26571== by 0x4E2C46: operator()<boost::_mfi::mf2<void, has_threads, boost::function<void()>, boost::thread*>, boost::_bi::list0> (bind.hpp:392)

==26571== by 0x4E2C46: operator() (bind_template.hpp:20)

==26571== by 0x4E2C46: boost::detail::thread_data<boost::_bi::bind_t<void, boost::_mfi::mf2<void, has_threads, boost::function<void ()>, boost::thread*>, boost::_bi::list3<boost::_bi::value<has_threads*>, boost::_bi::value<boost::function<void ()> >, boost::_bi::value<boost::thread*> > > >::run() (thread.hpp:117)

==26571== by 0x52C6A49: ??? (in /usr/lib/x86_64-linux-gnu/libboost_thread.so.1.54.0)

==26571== by 0x762D183: start_thread (pthread_create.c:312)

==26571== by 0x794103C: clone (clone.S:111)

==26571== Address 0x184938e4 is on thread 24's stack

==26571== in frame #1, created by mg_broadcast (mongoose.c:3038)

It would appear that each invocation into the REST API results in an error in that stacktrace

+1 for this problem.

Another +1 for this problem. With 79 Windows servers and I have to restart NSClient++ on 2 or 3 of them each week.

@mickem is there any chance of getting this resolved? Seems pretty widespread!

are there any news about this problem?