Mask_RCNN

Mask_RCNN copied to clipboard

Mask_RCNN copied to clipboard

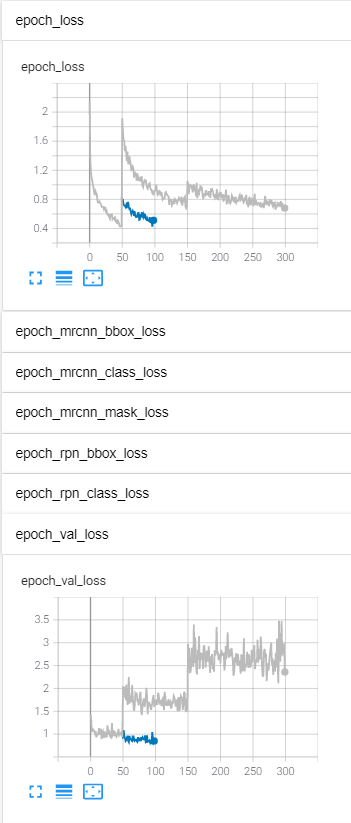

huge jump of loss when training with multiple stages

If I train the model in a sequence with different layers, there is a huge jump of loss at the transition from heads to more layers.

However, if I train head first and then start a completely new training for more layers, there is no significant change of lose.

For example:

def train(model):

# Training - Stage 1

epoch_count += 50

print("Training network heads")

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE*100,

epochs=epoch_count,

layers='heads',

augmentation=None)

epoch_count += 100

print("Fine tune Resnet stage 3 and up")

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE*50,

epochs=epoch_count,

layers='3+',

augmentation=augmentation)

epoch_count += 150

print("Fine tune all layers")

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE*10,

epochs=epoch_count,

layers='all',augmentation=augmentation)

Or: Train

def train(model):

# Training - Stage 1

epoch_count += 50

print("Training network heads")

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE*100,

epochs=epoch_count,

layers='heads',

augmentation=None)

Finish and then start a new training:

def train(model): epoch_count += 100 print("Fine tune Resnet stage 3 and up") model.train(dataset_train, dataset_val, learning_rate=config.LEARNING_RATE*50, epochs=epoch_count, layers='3+', augmentation=augmentation)

The grey line is the one with multiple stages in one def train(model) code and the blue line is the manual start of a new training of the second stage. (The blue line was not finished just to use as an example)

What could be the problem?

Sorry, I want to ask an irrelevant question: what program do you use to view the loss curve?

@hanbangzou I am having the same problem. Did you find out any solution to this? Thanks!!!

@hanbangzou I am having the same problem. Did you find out any solution to this? Thanks!!!

Every time a new layer is started, the learning rate scheduler restarts calculating the learning rate. This may be one of the reasons.

@ydzat @hanbangzou I have five/six stages in my training where I train different layers and with different learning rates. This loss accumulating over training stages is really bugging me with the final reports on metrics. Also, I am trying to save only models with performance and this loss jump at each stage makes it save initial two epochs models per stage. It would be great if there's a solution to this behavior. Thank you!!

@ydzat @hanbangzou I have five/six stages in my training where I train different layers and with different learning rates. This loss accumulating over training stages is really bugging me with the final reports on metrics. Also, I am trying to save only models with performance and this loss jump at each stage makes it save initial two epochs models per stage. It would be great if there's a solution to this behavior. Thank you!!

I did not solve this problem. Now, I just train one stage and then train next stage after it is finished.

Sorry, I want to ask an irrelevant question: what program do you use to view the loss curve?

Tensorboard

@ydzat @hanbangzou I have five/six stages in my training where I train different layers and with different learning rates. This loss accumulating over training stages is really bugging me with the final reports on metrics. Also, I am trying to save only models with performance and this loss jump at each stage makes it save initial two epochs models per stage. It would be great if there's a solution to this behavior. Thank you!!

Try to reduce the initial learning rate: that is, when the specified epoch is reached, manually/through code reduce the initial learning rate to the learning rate at the previous epoch

@ydzat @hanbangzou I have five/six stages in my training where I train different layers and with different learning rates. This loss accumulating over training stages is really bugging me with the final reports on metrics. Also, I am trying to save only models with performance and this loss jump at each stage makes it save initial two epochs models per stage. It would be great if there's a solution to this behavior. Thank you!!

Additional: the learning rate scheduler is reset at the start of a new Stage, so it is also possible to consider inheriting the value of the learning rate scheduler at the last epoch of the previous Stage by a manual method.