memory leak since 1.53.0

Description

Since upgrade tomatrix-synapse-py3==1.53.0+focal1 from 1.49.2+bionic1 i observe memory leak on my instance. The upgrade is concomitant to OS upgrade from Ubuntu bionic => focal / Python 3.6 to 3.8

We didn't change homeserver.yaml during upgrade

Our machine had 3 GB memory for 2 years and now 10G isn't enough.

Steps to reproduce

root@srv-matrix1:~# systemctl status matrix-synapse.service ● matrix-synapse.service - Synapse Matrix homeserver Loaded: loaded (/lib/systemd/system/matrix-synapse.service; enabled; vendor preset: enabled) Active: active (running) since Fri 2022-03-04 10:21:05 CET; 4h 45min ago Process: 171067 ExecStartPre=/opt/venvs/matrix-synapse/bin/python -m synapse.app.homeserver --config-path=/etc/matrix-synapse/homese> Main PID: 171075 (python) Tasks: 30 (limit: 11811) Memory: 6.1G CGroup: /system.slice/matrix-synapse.service └─171075 /opt/venvs/matrix-synapse/bin/python -m synapse.app.homeserver --config-path=/etc/matrix-synapse/homeserver.yaml ->

I tried to change this config without success expiry_time: 30m

syslogs says oom killer killed synapse: Mar 4 10:20:54 XXXX kernel: [174841.111273] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0,global_oom,task_memcg=/system.slice/matrix-synapse.service,task=python,pid=143210,uid=114 Mar 4 10:20:54 srv-matrix1 kernel: [174841.111339] Out of memory: Killed process 143210 (python) total-vm:12564520kB, anon-rss:9073668kB, file-rss:0kB, shmem-rss:0kB, UID:114 pgtables:21244kB oom_score_adj:0

no further usefull information in homeserver.log

Version information

$ curl http://localhost:8008/_synapse/admin/v1/server_version {"server_version":"1.53.0","python_version":"3.8.10"}

-

Version: 1.53.0

-

Install method: Ubuntu apt repo

-

Platform: VMWare

I could be happy to help getting python stacktrack to debug this, if I have any lead how to do so.

(sorry for my english)

Hi, thanks for your report!

Out of interest, where is this graph from? The units are in K but I assume that's meant to be G.

In any case, anon-rss:9073668kB is clear that we're looking at 9+ GB.

Do you happen to have metrics set up? The graphs there would give us some indication as to where the memory is being used.

You could try reducing the cache factor, but if you've only needed 3 GB so far then I'm not sure this would help as it sounds like there's something wrong.

Are you able to downgrade to try a few versions and see which one introduces the problem?

If I understand correctly, you're using the packages.matrix.org repository, so you should be able to downgrade by specifying apt install matrix-synapse-py3==1.50.0 (for example).

It would be really useful to know which version introduces the problem if possible!

This is shinken + graphite, unit should be G not K yes :) We don't have graphana so metrics are not enabled.

No I can't do via apt because older version are not proposed:

# apt-cache policy matrix-synapse-py3

matrix-synapse-py3:

Installé : 1.53.0+focal1

Candidat : 1.53.0+focal1

Table de version :

*** 1.53.0+focal1 500

500 https://packages.matrix.org/debian focal/main amd64 Packages

100 /var/lib/dpkg/status

I just downgraded to 1.50.2, let's see if there is still mem leaks.

wget https://packages.matrix.org/debian/pool/main/m/matrix-synapse-py3/matrix-synapse-py3_1.50.2%2Bfocal1_amd64.deb

dpkg -i matrix-synapse-py3_1.50.2+focal1_amd64.deb

Memory increases very regulary even during the night with no are little activity, could it be a cleanup process ? I will update this issue in few hours.

V1.50.2 downgraded after 12AM

I can do one update per day, will continue

updated:

1.53.0 service is consuming more than 600M after 22 minutes

I'm not entirely sure if this is related, but the expiry_time config flag is now deprecated and should be removed from your config. From the 1.53.0 release notes: "Enable cache time-based expiry by default. The expiry_time config flag has been superseded by expire_caches and cache_entry_ttl."

If the memory leak is the result of the caches never expiring, removing the deprecated flag from the config and restarting should fix it. Synapse should then expire caches after 30 mins by default, or you can use the new flags to set a different time-to-live for the caches.

I added the flag expiry_time in purpose of testing theses last days by I didn't had it before I noticed the problem. Looks like to me a memory leak or cache not expiring on default behavior, but the regular growth even during the night makes me think" or the first one. I'm wondering why am i the only one to report such a problem, our conf is standart, except for SAML2. Will try to set expire_caches / cache_entry_ttl, but tomorrow. Thanks

tried 1.54.0 with

expire_caches: 2m

cache_entry_ttl: 2m

i still need rolling back to 1.52

Hi there thanks for getting back to us. One thing: expire_caches is a boolean flag, so the only valid values are true or false. Thus, if you wanted to the ttl on the caches to be 2m, the configuration would be

expire_caches: true

cache_entry_ttl: 2m

And just to be crystal clear, the expiry_time option needs to be deleted from the config. It seems like something else is probably going on here, but it's good to eliminate what we can.

retried with 1.54.0

expire_caches: true

cache_entry_ttl: 2m

same problem. Looks related to (lot of / second) queries done by pantalaimon + maubot client. I stopped them and the memory stopped increasing, but memory weren't freed so I still think there is a memory leak somewhere. Synapse memory usage is back to normal despite memory footprint increases but very slowly now. Our bots were very old (version > 1y), it could be an old API.

We don't really use our bots so I can stay like that, but this could be problematic one some installations (ore nasty clients) and could be use to do a DOS.

I can see an error on logs, but i don't think this is related since this error happened on 1.52.0

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: 2022-03-12 18:48:25,014 - synapse.http.server - 100 - ERROR - GET-396 - Failed handle request via 'SyncRestServlet': <XForwardedForRequest at 0x7f12201fe340>

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: Traceback (most recent call last):

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/twisted/internet/defer.py", line 1660, in _inlineCallbacks

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: result = current_context.run(gen.send, result)

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: StopIteration: SyncResult(next_batch=StreamToken(room_key=RoomStreamToken(topological=None, stream=445658, instance_map=frozendict.frozendict({})), presence>

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: During handling of the above exception, another exception occurred:

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: Traceback (most recent call last):

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/http/server.py", line 269, in _async_render_wrapper

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: callback_return = await self._async_render(request)

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/http/server.py", line 471, in _async_render

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: callback_return = await raw_callback_return

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/rest/client/sync.py", line 219, in on_GET

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: response_content = await self.encode_response(

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/logging/opentracing.py", line 794, in _trace_inner

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: return await func(*args, **kwargs)

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/rest/client/sync.py", line 242, in encode_response

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: joined = await self.encode_joined(

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/logging/opentracing.py", line 794, in _trace_inner

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: return await func(*args, **kwargs)

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/rest/client/sync.py", line 363, in encode_joined

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: joined[room.room_id] = await self.encode_room(

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/rest/client/sync.py", line 558, in encode_room

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: serialized_timeline = serialize(

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/rest/client/sync.py", line 532, in serialize

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: return self._event_serializer.serialize_events(

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/events/utils.py", line 530, in serialize_events

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: return [

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/events/utils.py", line 531, in <listcomp>

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: self.serialize_event(event, time_now=time_now, **kwargs) for event in events

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/events/utils.py", line 419, in serialize_event

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: self._inject_bundled_aggregations(

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: File "/opt/venvs/matrix-synapse/lib/python3.8/site-packages/synapse/events/utils.py", line 513, in _inject_bundled_aggregations

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: serialized_event["unsigned"].setdefault("m.relations", {}).update(

mars 12 18:48:25 srv-matrix1 matrix-synapse[2117]: KeyError: 'unsigned'

Louis

Looks related to (lot of / second) queries done by pantalaimon + maubot client.

Are these /sync queries, or something else? Knowing which endpoint is involved can help us greatly narrow down what might have changed in v1.53.0 to cause this.

yes, maubot is doing a lot of sync queries. Memleaks looks like proportional with number of queries.

since 12/03, synapse has grow up to 3,8G of memory

since 12/03, synapse has grow up to 3,8G of memory

@lchanouha I'm guessing the spiky part of the graph from 2022-03-02 to 2022-03-08 is Synapse 1.53/1.54? Which version of Synapse were you running from 2022-03-13 onwards / what changed between the spiky part and now?

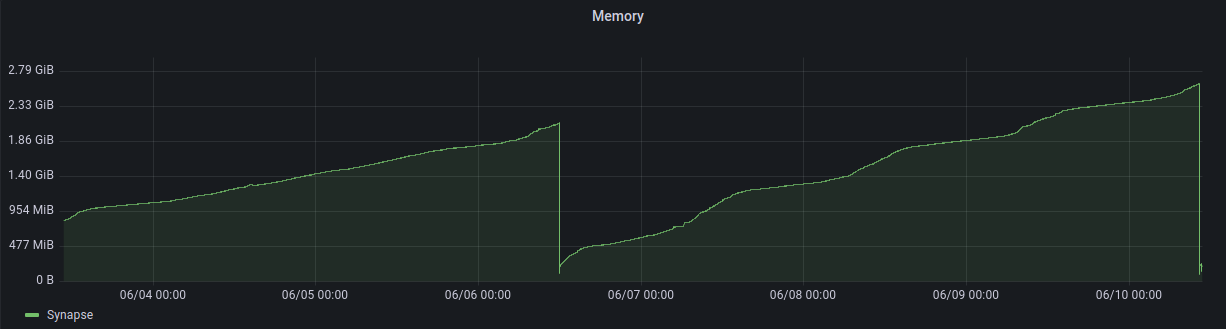

It's interesting to see that matrix.org's memory usage also shows a linear rise in memory over time, until restarted:

That's like to just be caches filling up though. We no longer have pre-1.53 data unfortunately.

That's like to just be caches filling up though. We no longer have pre-1.53 data unfortunately.

It's interesting to see that matrix.org's memory usage also shows a linear rise in memory over time, until restarted

Are all of matrix.org's cache's configured with time-based expiry?

Are all of matrix.org's cache's configured with time-based expiry?

I think so?

There appear to be three caches that are not subject to time-based expiry in Synapse:

get_users_in_room, with a base size of 100,000.get_rooms_for_user_with_stream_ordering, with a base size of 500,000.get_users_who_share_room_with_user, with a base size of 500,000.

In the case of matrix.org, get_rooms_for_user_with_stream_ordering's size tends to increase linearly but never hits capacity before a restart.

The only change to these caches that I can see in 1.53 is that we reduced the frequency at which get_users_in_room gets cleared (#11878).

@lchanouha Would you be willing to try setting the per_cache_factors for these three caches to something small like 0.01 for a couple of days, to see if the memory usage hits a ceiling?

@squahtx that's done, lets see the behaviour next days.

per_cache_factors:

get_users_in_room: 0.01

get_rooms_for_user_with_stream_ordering: 0.01

get_users_who_share_room_with_user: 0.01

Here is the updated graph, (service was running since Sat 2022-03-12 18:43:33 CET; 3 weeks 5 days ago, v1.54.0) and used 4,1G

We may have a ceiling here, but 5G of cache would be very high on our little installation. We have 560 users (probably less than 100 active) & 2,8G in /var/lib/postgresql

We have an experimental option to track the memory usage of caches, though it does add load to the server so I'm not sure its feasible for you to leave it running? If you want to try it then add the following to your config:

caches:

track_memory_usage: true

# .. rest of the caches config

You'll need to install the pympler python library in your virtual env.

This will add the following Prometheus metric synapse_util_caches_cache_size_bytes, which will give the estimated total size of the cache in bytes.

Is this still an issue/has this been resolved?

We are still seeing this. We upgraded to 1.54.0 a few months ago and we've been having to bounce synapse daily since then.

@byanes what version are you on now though? The current version is 1.60.0.

@aaronraimist We haven't upgraded off of 1.54.0 yet. Is there anything specific that you think might have fixed this? We can try upgrading later today. If it helps, below is what we see day-to-day. We don't have very many active users, but they are generally active between 7am and 4pm ET every weekday. If we don't bounce the container, it will consume all 8G of memory in 7-8 days.

@tomsisk I'm not the developer so I don't know if specific changes fixed it but saying "We are still seeing this" while behind 6 versions behind (technically 11 counting patch releases) doesn't seem like a very helpful comment. I would recommend you at least see if the current version improves it and if not then enable https://github.com/matrix-org/synapse/issues/12160#issuecomment-1097993084 and provide more information so they can fix it. (I'm assuming you operating the same server as @byanes?)

Just glancing at the changelog there are several changes that specifically call out reducing memory usage. https://github.com/matrix-org/synapse/pull/12544 https://github.com/matrix-org/synapse/pull/12656 https://github.com/matrix-org/synapse/pull/12554 https://github.com/matrix-org/synapse/pull/10533

Our last upgrade unleashed a pretty large memory leak so we're not exactly excited to live on the bleeding edge when there isn't a lot of time to commit to this at the moment. You have a point that we haven't done anything, but I think @byanes comment was just so this didn't get blindly closed out as fixed when the actual issue was never diagnosed in the first place. We'll try upgrading and see where that gets us.

Looks like this is still an issue in 0.60.0. I'll try to turn on track_memory_usage to see if there's anything helpful there.

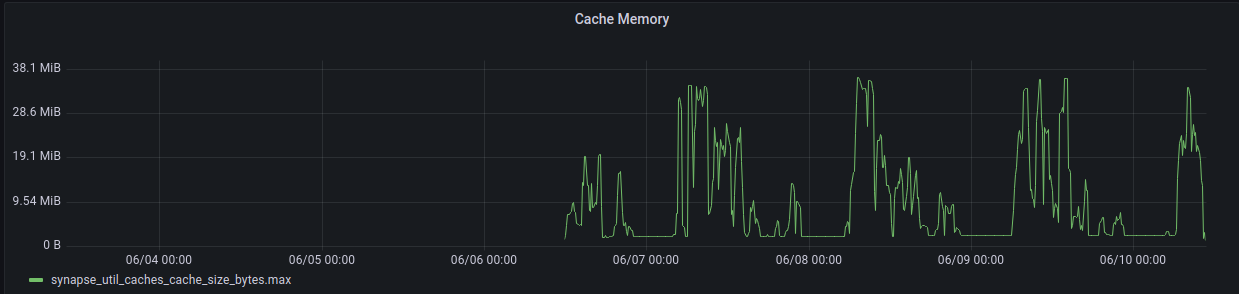

Our issue doesn't look related to cache, the cache usage is consistently very low.

> Our issue doesn't look related to cache, the cache usage is consistently very low.

synapse_util_caches_cache_size_bytes has a label for each individual cache. Does that chart show the sum over all caches? Those numbers look impossibly low.

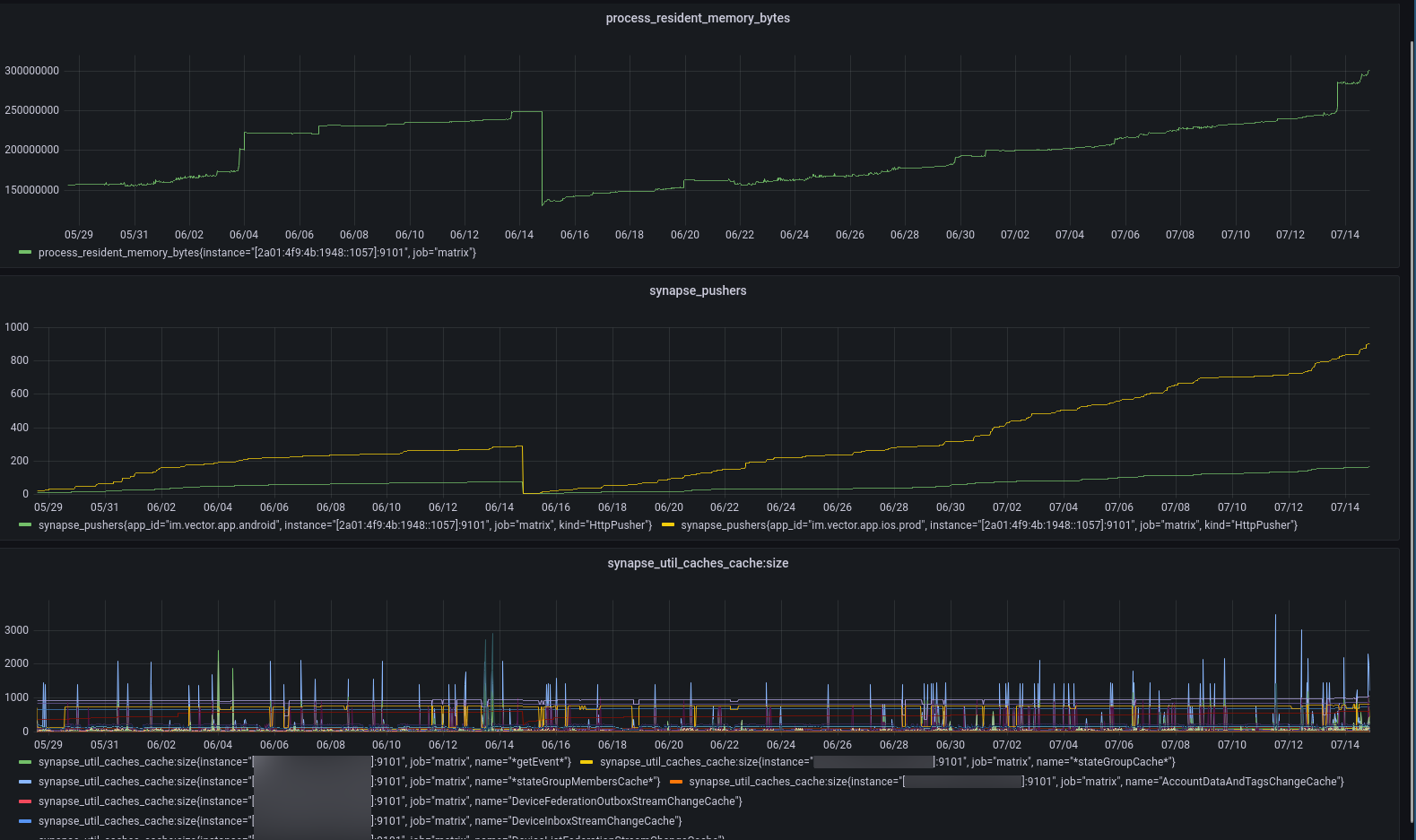

I don't want to hijack this issue, but it looks like we have a very similar memleak pattern (also a few hundred MB per month):

Apart from the used memory (first chart), I've also discovered that the continuous increase seems to be correlated with

Apart from the used memory (first chart), I've also discovered that the continuous increase seems to be correlated with synapse_pushers in our case (second chart). I've also added the requested synapse_util_caches_cache:size graphs for all individual caches, those all seem not to be correlated.

@felixdoerre Would you be willing to turn on track_memory_usage (see https://github.com/matrix-org/synapse/issues/12160#issuecomment-1097993084 for instructions) and get a chart of synapse_util_caches_cache_size_bytes instead?

It'd be very useful to see the largest caches by memory usage instead of number of entries.