LibRecommender

LibRecommender copied to clipboard

LibRecommender copied to clipboard

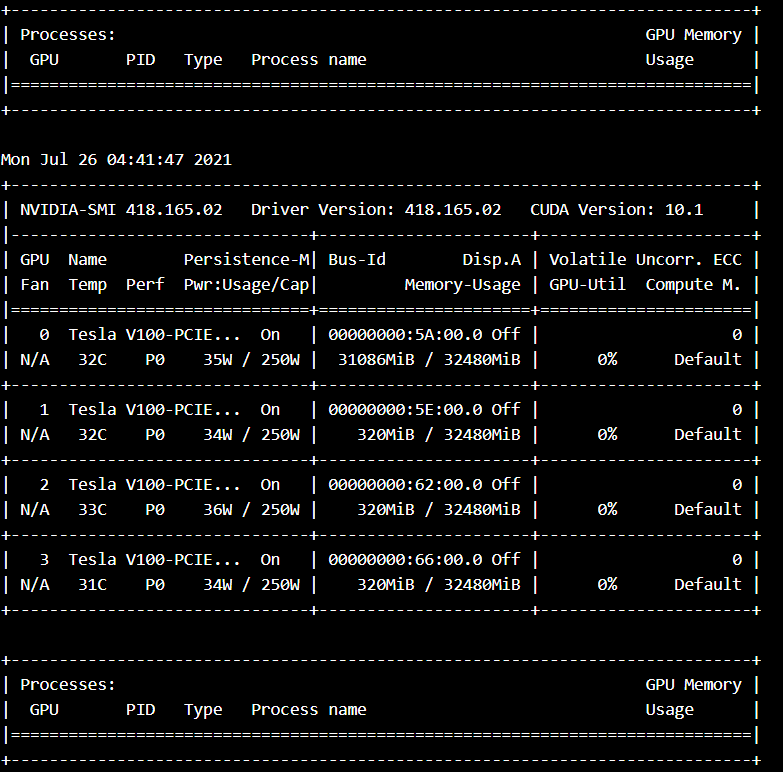

Multi GPU Training is not supported

How can I train models in multiple GPUs on the same machine. Current generic tf solution for keras API is using tf.distribute.MirroredStrategy() like below:

strategy = tf.distribute.MirroredStrategy()

with strategy.scope():

model = WideDeep("ranking", data_info, embed_size=16, n_epochs=args.n_epochs,

lr={"wide": 0.01, "deep": 1e-4},

lr_decay=False, reg=None, batch_size=args.batch_size, num_neg=1, use_bn=False,

dropout_rate=None,

hidden_units="128,64,32", tf_sess_config=None)

However since the models in this library does not use keras API, so the solution does not work here. Do you have any suggestion?

Sorry, I just have noticed that tf.distribute.MirroredStrategy() succesfully works for LibRecommender models that is why I am closing this issue.

I realized that using tf.distribute.MirroredStrategy() within this library has a problem. That is

most of the ops are done in GPU:0. How can I distribute works between GPUs fairly?

By considering multi-gpu implementation on https://github.com/vahidk/EffectiveTensorflow/tree/v1#multi_gpu, for Wide and Deep Model with ranking task, I have added make_parallel to WideDeep class in algorithms/wide_deep.py

@staticmethod

def make_parallel(fn, num_gpus, **kwargs):

in_splits = {}

for k, v in kwargs.items():

in_splits[k] = tf.split(v, num_gpus)

out_split = []

for i in range(num_gpus):

with tf.device(tf.DeviceSpec(device_type="GPU", device_index=i)):

with tf.variable_scope(tf.get_variable_scope(), reuse=tf.AUTO_REUSE):

out_split.append(fn(**{k: v[i] for k, v in in_splits.items()}))

return tf.concat(out_split, axis=0)

Then used it to parallelize forward pass and backprop in _build_train_ops method as follows:

if num_gpu is None:

self.loss = tf.reduce_mean(

tf.nn.sigmoid_cross_entropy_with_logits(labels=self.labels,

logits=self.output)

)

else:

# parallel forward pass

loss = self.make_parallel(tf.nn.sigmoid_cross_entropy_with_logits, num_gpu, labels=self.labels,

logits=self.output)

self.loss = tf.reduce_mean(loss)

colocate_gradients_with_ops = False if num_gpu is None else True

wide_optimizer_op = wide_optimizer.minimize(total_loss,

global_step=global_steps,

var_list=var_dict["wide"],

# parallel backward pass

colocate_gradients_with_ops=colocate_gradients_with_ops)

deep_optimizer = tf.train.AdamOptimizer(self.lr["deep"])

deep_optimizer_op = deep_optimizer.minimize(total_loss,

global_step=global_steps,

var_list=var_dict["deep"],

# parallel backward pass

colocate_gradients_with_ops=colocate_gradients_with_ops)

Note that num_gpu is passed at fit method like this:

model.fit(train_data, verbose=3, shuffle=True, eval_data=eval_data,

patience_limit=2, metric_name_monitored='recall',

k=args.num_retrieved_items,

metrics=["precision", "recall", "map", "ndcg", "loss", "balanced_accuracy", "roc_auc", "pr_auc"],

num_gpu=num_gpu)

It worked, and tasks for each GPUs are disrtibuted in a fair way and model can be trained much faster than before. This approach is model-specific and should be done for the others one by one. But, if there is a general way that all the models can be affected I will be appreciated.

I don't have multi-GPUs, so I didn't even consider it when writing the library. Even if I can write the multi-GPUs training code, I couldn't test it. But this is a really useful feature, and I'll take it into account if the corresponding resources are available some day.