"Communication link failure" state:"08S01" on a connection pool

Hi,

I have question about using connectionpool on my server.

I have defined a global variable named connection pool and when I want to query database I use it to query. that works fine but there is a problem that I think after a while being idle the server closes the connections and I face this error. "Communication link failure" state:"08S01" which the cause is explained here.

https://www.ibm.com/support/pages/odbc-client-connections-dropping-intermittently-error-communication-link-failure

Is there any way to workaround this problem in node-odbc?

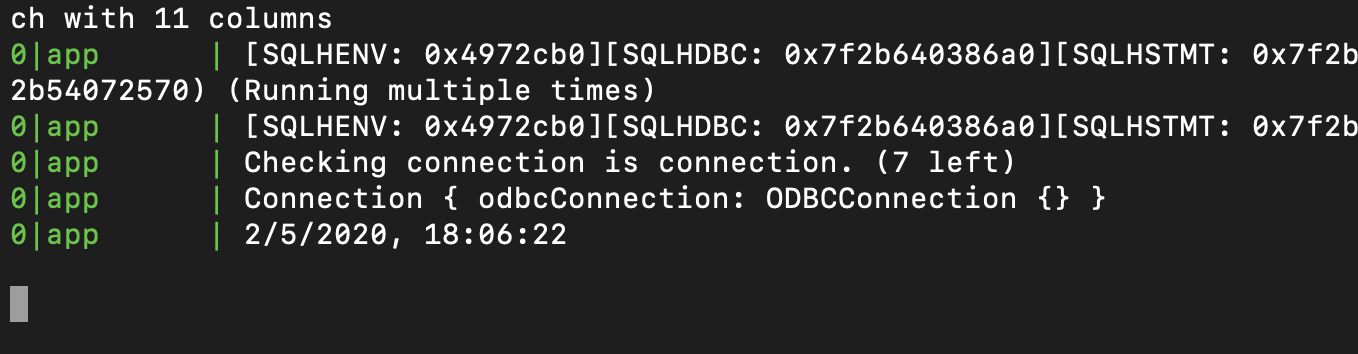

I am getting this issue too and it's urgent. Even with connectionTimeout as 0, after some idle time it seems to disconnect the connections. I went ahead and built node-odbc with DEBUG mode so we could see the log. You can also see a log of the pool object before the debug output.

0|app | Pool {

0|app | isOpen: true,

0|app | freeConnections: [

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} },

0|app | Connection { odbcConnection: ODBCConnection {} }

0|app | ],

0|app | connectionString: 'Driver=IBM i Access ODBC Driver;System=xxx;UID=xxx;Password=xxx',

0|app | initialSize: 10,

0|app | incrementSize: 10,

0|app | maxSize: null,

0|app | shrink: true,

0|app | connectionTimeout: 0,

0|app | loginTimeout: 0

0|app | }

0|app |

0|app | [SQLHENV: 0x4bad8c0][SQLHDBC: 0x7fe28c0173e0] ODBCConnection::Query()

0|app | [SQLHENV: 0x4bad8c0][SQLHDBC: 0x7fe28c0173e0] ODBCConnection::QueryAsyncWorker::Execute(): Running SQL '

0|app | select

0|app | --REDACTED--

0|app | '

0|app | [SQLHENV: 0x4bad8c0][SQLHDBC: 0x7fe28c0173e0] ODBCConnection::QueryAsyncWorker::Execute(): Running SQLAllocHandle(HandleType = SQL_HANDLE_STMT, InputHandle = 0x7fe28c0173e0, OutputHandlePtr = 0x4e5a560)

There is a long pause here before this error comes out:

0|app | [SQLHENV: 0x4bad8c0][SQLHDBC: 0x7fe28c0173e0] ODBCConnection::QueryAsyncWorker::Execute(): Running SQLAllocHandle(HandleType = SQL_HANDLE_STMT, InputHandle = 0x7fe28c0173e0, OutputHandlePtr = 0x4e5a560)

0|app | [SQLHENV: 0x4bad8c0][SQLHDBC: 0x7fe28c0173e0][SQLHSTMT: 0x7fe2a0021240] ODBCConnection::QueryAsyncWorker::Execute(): SQLPrepare returned -1

0|app | ODBC::GetSQLError : handleType=3, handle=0x7fe2a0021240

0|app | ODBC::GetSQLError : called SQLGetDiagField; ret=0, statusRecCount=1

0|app | ODBC::GetSQLError : calling SQLGetDiagRec; i=0, statusRecCount=1

0|app | ODBC::GetSQLError : errorMessage=[IBM][System i Access ODBC Driver]Communication link failure. comm rc=8413 - CWBCO1054 - A user-specified time-out occurred while sending or receiving data, errorSQLState=08S01

0|app | [SQLHENV: 0x4bad8c0][SQLHDBC: 0x7fe28c0173e0] ODBCConnection::Close()

@worksofliam so is it a kind of bug or we need to do some special kind of configurations?

Not sure. Waiting for a maintainer to suggest something :)

@worksofliam Did you try changing the TCP keepalive settings with CHGTCPA as I suggested ?

https://github.com/markdirish/node-odbc/blob/ca5c37727522ea309f90327d59b0eb2938da8969/src/odbc.cpp#L291

On line 291 of src/odbc.cpp, if you change the truth check to if (options->connectionTimeout >= 0) {, does that solve the issue? Reading the ODBC documentation, it seems that 0 should be the default, and not setting it should result in the same behaviour as 0, but I could be mistaken.

If that doesn't solve the issue, I think it wouldn't be an issue with the Node.js odbc connector, but with some underlying layer. Could be the driver, but could also be the TCP settings as Richard pointed out. You can use CFGTCP and then do option 3 (Change TCP/IP attributes) and see what the values for TCP keep alive

It seems that TCP keep alives are an issue for other ODBC drivers as well. For example, the Simba Greenplum ODBC driver has DSN options to set TCP keep alive time https://www.simba.com/products/Greenplum/doc/ODBC_InstallGuide/mac/content/odbc/re/configuring/tcpkeepalives.htm. This functionality isn't available on the IBM i ODBC driver, but just shows that TCP timeouts are a thing that can affect ODBC connectivity

@markdirish so we just need to set ODBC timeout in driver setting?

@am2222 The ODBC timeout may work for the client side, but I don't know if the ODBC connections in a connection pool will send keep-alives which means the connections to the DB2 server jobs for each connection can still die even though they may stay active on the i. If you're running a remote server like I believe Liam is, I think the IBMi TCP keep-alive setting (on CHGTCPA) will be very important to set because of WAN/VPN connectivity and potential router timeout issue.. I think the default IBMi keepalive may be 120 minutes. I changed mine to 2 and it resolved issues I was having with JDBC and other connections types like RDI so I'm guessing it will help for you as well.

@markdirish I am going to make that change, but where is SQL_ATTR_CONNECTION_TIMEOUT coming from? I can't find it in the IBM i docs for SQLSetConnectAttr - unless it's from somewhere else?

Edit: built change. One notice

../src/odbc.cpp:291:41: warning: comparison of unsigned expression >= 0 is always true [-Wtype-limits]

if (options->connectionTimeout >= 0) {

These are the Db2 CLI docs, which is 90-95% the same as ODBC, but for some reason don't seem to have this timeout...

The option can be found at the ODBC docs (which are annoyingly merged with SQL Server docs): https://docs.microsoft.com/en-us/sql/odbc/reference/syntax/sqlsetconnectattr-function?view=sql-server-ver15

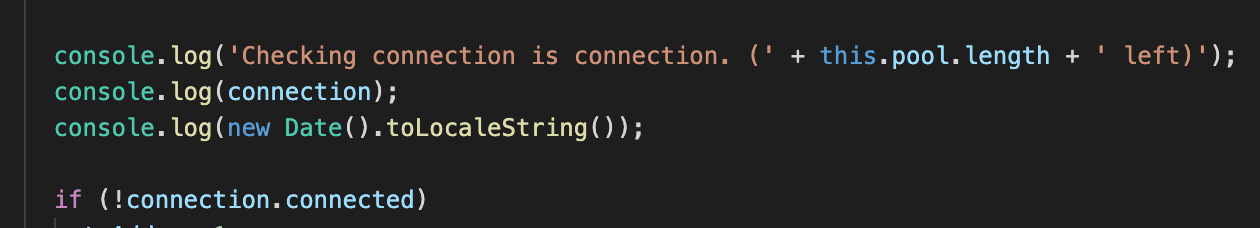

Any changes should just have to be done in JavaScript: Connections have a .connected property that calls SQLGetConnectAttr with the SQL_ATTR_CONNECTION_DEAD option. Returns true if SQL_ATTR_CONNECTION_DEAD returns false (since it makes much more sense for .connected to return true if its... connected). I imagine the code would just check:

if(!connection.connected) {

// close connection

// open a new connection and add it to pool

}

To me, it looks like connection.connected is actually just hanging for an indefinite amount of time if the connection does not exist. Here's the Getter for connected.

It's been hanging for 10 minutes now.

Some say it's still waiting for a response to this day

@worksofliam

That sort of makes sense, but I thought it would be smart enough to work around it.

SQL_ATTR_CONNECTION_DEAD, according to the ODBC spec, supposed to be a quick check of the last state of the connection. So for most drivers, it doesn't even check the connection, it just checks if you closed it. The previous maintainers of our ODBC driver decided to make ours not follow that, and instead actually send out a packet to see if it is dead. But it seems like it can't actually communicate over a dead connection and is just hanging... I would think it would be smart enough to timeout quickly and say "ok, no connection available", but apparently not. Will look into it more

Ok so maybe I have been understanding connection timeout ALL WRONG. It might be the time and query (or function) waits to complete before giving up:

"An SQLUINTEGER value corresponding to the number of seconds to wait for any request on the connection to complete before returning to the application. The driver should return SQLSTATE HYT00 (Timeout expired) anytime that it is possible to time out in a situation not associated with query execution or login.

If ValuePtr is equal to 0 (the default), there is no timeout."

So... maybe try setting it to like 5, and see if that helps your hang (with your .connected code)

@markdirish Hi, I am using netezza odbc driver, it works fine but I still have this issue with connection pool, IS there any way to check if connections are dead renew pool?

Just to revisit this, PHP uses in procedural ODBC and PDO_ODBC uses if the data source is read-only; it seems to be the most consistent check, unfortunately. (Procedural ODBC used it for a long time, and I added it to PDO_ODBC based on that.) However, I'm not sure of the methodology used to determine that, so it could be worth looking into alternatives.

@NattyNarwhal AFAICT they only use SQL_DATA_SOURCE_READ_ONLY since they want to support ODBC 2-only drivers and SQL_ATTR_CONNECTION_DEAD is ODBC 3.5 (and the comments claim some drivers implement it improperly, which for sure the IBM i Access driver did until the most recent fixpack -- though for our driver SQL_ATTR_CONNECTION_DEAD was in the same boat, problem-wise)

@kadler Do you think if they update their PASE ODBC driver the issue could go away by using the dead connection check ?

In other words, this should work:

if(!connection.connected) {

// close connection

// open a new connection and add it to pool

}

The latest IBM i Access ODBC driver now supports SQL_ATTR_LOGIN_TIMEOUT; and SQL_ATTR_CONNECTION_TIMEOUT on systems other than Windows. It also changes behavior of SQL_ATTR_CONNECTION_DEAD and SQL_DATA_SOURCE_READ_ONLY to only report the state of the connection (not actually try to ping the system).

This should help in solving a lot of the problems here, though there may be other issues involved. Certainly, on the previous driver you would have issues using either attribute if you were not on Windows since connection issues could prevent the ping from ever working and the attempt will never time out. Of course, even on the newer driver, you still need to do something to enable timeouts -- if the application doesn't set either attribute, then you can use cwbcopwr to set the send and receive timeouts (and also enable keep alives, as well).

So if I understand correctly, doing a dead connection check could still be problematic because the local connection could report good to go, but the IBM i service thread related to the connection could actually be dead if the job recycled. Or would the ODBC driver attempt to reconnect once a query happens and it sensed the dead connection ?

As long as the socket is still up, the dead connection check will still report it's good. That is all the ODBC spec says that should happen. If the remote job is dead, though, the socket should get disconnected.

However, if the connection has died between last use, that won't be known at check time, only once the connection is returned to the application and is attempted to be used. Of course, that's how the ODBC spec defines it to work.

A driver must implement this option efficiently or it will impair the connection pooling performance. Specifically, a call to get [SQL_ATTR_CONNECTION_DEAD] should not cause a round trip to the server. Instead, a driver should just return the last known state of the connection. The connection is dead if the last trip to the server failed, and not dead if the last trip succeeded.

https://docs.microsoft.com/en-us/sql/odbc/reference/develop-app/driver-manager-connection-pooling?view=sql-server-ver15

So Liam's scenario could still occur or will a low query timeout at least reduce latency now ?

My lowly brain is just trying to understand if the latest stuff actually made anything better. In my case that's why I have chosen not to use pooling.

I would think a short ping to the server with a short timeout would be a good thing in the dead connection check.

Just trying to get it right :-)

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

This issue still seems to be occurring. Is the workaround to use connection instead of pools?

I never did achieve clarity on this after my comment from May 26. Maybe @markdirish and @kadler can provide a thought process on whether pooled connections are good or bad. I'm still thinking there may be some issues.

@phpdave did you change to non-pooled connections ? Describe what issue you're seeing.

I think this is basically the same issue as #255 and Mark's suggestion here would be helpful: https://github.com/markdirish/node-odbc/issues/63#issuecomment-581983751

This prevents handing out "known bad" connections, however there could still be issues:

- SQL_ATTR_CONNECTION_DEAD returns the last known connection state. If the socket has been disconnected since it was last used, you still have a problem

- retrieving SQL_ATTR_CONNECTION_DEAD on IBM i Access drivers prior to SP26 can cause hangs since it tries to ping the server*

*if the socket is still up, but the server job is unresponsive for some reason it will hang indefinitely unless socket timeouts are enabled (and socket timeouts were only supported on Windows prior to SP26)

To make this more foolproof, we could add some kind of check on top. Maybe you could register a callback which would be run prior to giving back a connection. From there you could run a validation query or something. Of course, for IBM i if the server job is unresponsive this will still hang without timeouts set.

@worksofliam Is this issue solved for you? I am running into it on Node/odbc/pools/IBMi as well.

This is what they did in PHP to fix this. https://github.com/php/php-src/commit/f3a14d1b1ad6cd60570b264f913536c4b28bc10f

Not sure where in this repo this would go

It looks like node is doing something similar here: https://github.com/markdirish/node-odbc/blob/69f49bd4703e569ef01693f4d9241bcdeffa5b00/src/odbc_connection.cpp#L199-L218

but its not checking the return value for SQL_SUCCESS, not sure if thats needed

SQLUINTEGER dead = SQL_CD_FALSE;

ret = SQLGetConnectAttr(db_conn->hdbc,

SQL_ATTR_CONNECTION_DEAD,

&dead, 0, NULL);

if (ret == SQL_SUCCESS && dead == SQL_CD_TRUE) {

/* Bail early here, since we know it's gone */

zend_hash_str_del(&EG(persistent_list), hashed_details, hashed_len);

goto try_and_get_another_connection;

}

/* If the driver doesn't support it, or returns

* false (could be a false positive), fall back

* to the old heuristic.

*/

maybe the default

SQLINTEGER connection = SQL_CD_TRUE;

should be SQL_CD_FALSE??? and we should check if the return value is SQL_SUCCESS. If the connection is bad/dead would it not return SQL_FALSE?

I think changing the default to SQL_CD_TRUE would be good. Not sure the benefit of checking the return value, unless you're going to call SQLGetDiagRec or something. Either the call succeeded and set the value appropriately or the call failed and we just assume it's dead.

Ah! sorry you're right this code looks right. I'll have to keep looking around to try and figure out why I'm getting

State 08S01 Code 10054 [IBM][System i Access ODBC Driver]Communication link failure. comm rc=10054 - CWBC01047 - The IBM i server application disconnected the connection.

After the application is running for an entire day and request is made the next day.

Maybe the issue is here: https://github.com/markdirish/node-odbc/blob/69f49bd4703e569ef01693f4d9241bcdeffa5b00/lib/Pool.js#L253

I think an assumption is being made that the connection that's being popped is a good connection, and perhaps connection.connected should be checked on the popped connection. This will make sure the connection is good and if its not, to create a new connection.

Unless the developer using the node-odbc pool is expected to try catch when they run pool.query and catch the connection error message

State 08S01 Code 10054 [IBM][System i Access ODBC Driver]Communication link failure. comm rc=10054 - CWBC01047 - The IBM i server application disconnected the connection.

Then reinstantiate the pool. Which seems odd to me since I feel the pool should be gracefully handling dead connections without the developer needing to do anything.

pool = await odbc.pool({

connectionString,

initialSize: 5,

maxSize: 10,

});

Going back to the php_odbc.c source comparison. node-odbc doesn't have a

goto try_and_get_another_connection;

It just has a function to check for a dead connection. It would be nice if node-odbc realized it had a dead connection and replaced it with a new connection.