Normalize a batch of tensors

I created a function to normalize a batch. It works if my batch contains some arrays but not with some tensors.

First, I'm creating a batch like in my neural network.

batch_1 = [1.0 3 5 7 8 ; 6 8 10 11 12 ; 2 4 5 6 7] # Output values

batch_1 = reshape(batch_1, (3,1,5))

batch_2 = [1.0; 6; 2] # Input values

# With the following function, I will have the output value in batch[1] and the input values in batch[2]

function create_batch(batch_1, batch_2)

return (batch_1, batch_2)

end

batch = create_batch(batch_1, batch_2)

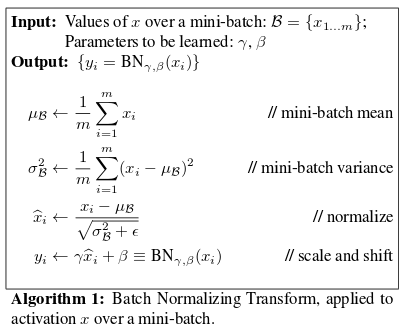

I'm working with luminance and temperatures, so it explains the names in my function to normalize the batch. I used the following picture to create the function where I taken gamma, beta and epsilon:

function Batch_norm(batch, gamma, beta, epsilon)

lum_batch = batch[1] # Luminance

temp_batch = batch[2] # Temperature

batch_size = length(temp_batch)

mean_lum_base = sum(lum_batch,1) / batch_size # Mean

mean_lum = deepcopy(lum_batch)

for i in 1:batch_size

mean_lum[i,:,:] = mean_lum_base[:,1,:]

end

v_lum_base = sum((lum_batch - mean_lum).^2,1) / batch_size # Variance

v_lum = deepcopy(lum_batch)

for i in 1:batch_size

v_lum[i,:,:] = v_lum_base[:,1,:]

end

lum_batch_norm = (lum_batch - mean_lum) ./ sqrt.(v_lum + epsilon)

lum_batch_new = (gamma.*lum_batch_norm) + beta

mean_temp_base = sum(temp_batch,1) / batch_size # Mean

mean_temp = deepcopy(temp_batch)

for i in 1:batch_size

mean_temp[i,:,:] = mean_temp_base[:,1,:]

end

v_temp_base = sum((temp_batch - mean_temp).^2,1) / batch_size # variance

v_temp = deepcopy(temp_batch)

for i in 1:batch_size

v_temp[i,:,:] = v_temp_base[:,1,:]

end

temp_batch_norm = (temp_batch - mean_temp) ./ sqrt.(v_temp + epsilon)

temp_batch_new = (gamma.*temp_batch_norm) + beta

return (lum_batch_new, temp_batch_new, temp_batch)

end

With the precedent batch, it's giving the following:

batch_norm = Batch_norm(batch, 1,1, 0)

([0.0741799; 2.38873; 0.53709]

[0.0741799; 2.38873; 0.53709]

[0.292893; 2.41421; 0.292893]

[0.53709; 2.38873; 0.0741799]

[0.53709; 2.38873; 0.0741799], [0.0741799, 2.38873, 0.53709], [1.0, 6.0, 2.0])

Now, I'm converting batch_1 and batch_2 to tensors:

batch_1_tensor = convert(TensorFlow.Tensor{Float32}, batch_1)

batch_2_tensor = convert(TensorFlow.Tensor{Float32}, batch_2)

batch_tensor = create_batch(batch_1_tensor,batch_2_tensor)

I can't normalize the batch with my function, batch_tensor_norm = Batch_norm(batch_tensor, 1, 0 , 0) return the following error:

ERROR: MethodError: no method matching start(::TensorFlow.TensorRange)

Closest candidates are:

start(::SimpleVector) at essentials.jl:258

start(::Base.MethodList) at reflection.jl:560

start(::ExponentialBackOff) at error.jl:107

...

Stacktrace:

[1] Batch_norm(::Tuple{TensorFlow.Tensor{Float32},TensorFlow.Tensor{Float32}}, ::Int64, ::Int64, ::Int64) at ./REPL[14]:11

Moreover, I would want my function return directly a tensor and not an array...

For usage help I recommended stack, overflow, the Julia slack, or the Julia discourse forum. Github issues are are best for bug reports and feature requests.

As a general statement without a close look at your code I think you might want to work through a tutorial or two. I say thus because TensorFlow code normally includes calls to run, after using placeholder, and get_varuable stuff to build up a graph. Remember, a TensorFlow Tensor is not a container type. It is a symbolic type. Code that works on arrays rarely trivially translates. Defining a graph that does Looping over parts of a tensor, and setting elements is nontrivial and generally not the right way to go about things. (You might have more luck with that kinda approach in say Flux.jl)

Thank you for your answer @oxinabox . I posted this question to stack overflow Flux.jl does not work with placeholders (I opened an issue about this), so I can't use it yet. I have a complete network with placeholders and calls to run but I think it is too long to post all... So, I created a minimal code

Having only looked at the error in the OP, should we be defining start(::TensorRange), @oxinabox ?

So to add features to TensorFlow.jl we'ld need indexed assignment (already an open issue) Iteration for TensorRange (i'ld leave this til Julia 0.7 since it changing)

And the code can't use deepcopy. We might be able to define similar which would do. But it might be fiddly?or do we have it already.

The result would be a very complicated graph.