Graph differences between Python and Julia

I thought it be useful to have an issue that tracks how the graphs produced by the julia version differs from the graphs produced by the python version. Lets update these bullet points as the package progresses and add more examples if some come up.

-

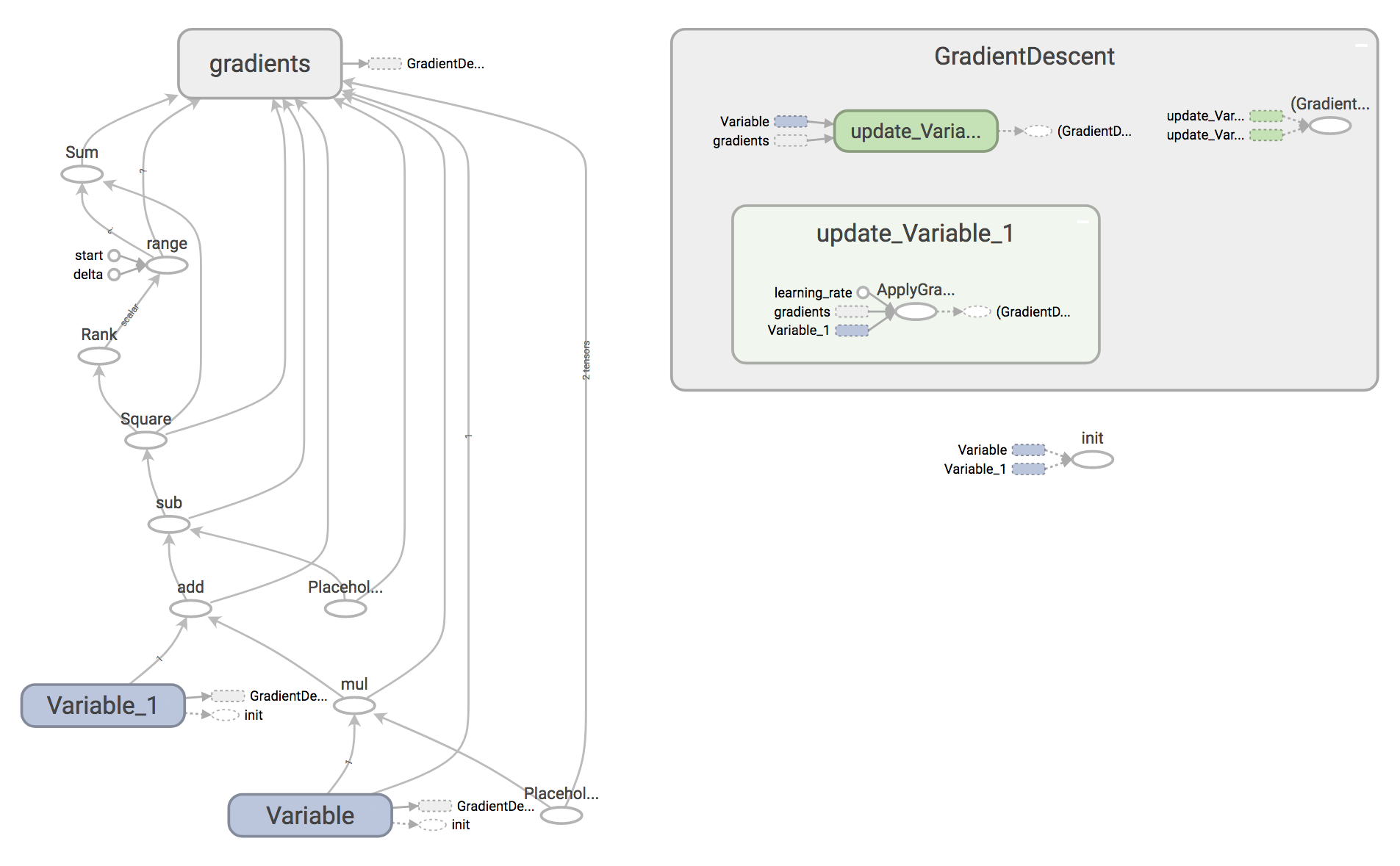

[ ] Group optimizer nodes. In the julia version, the operations of something like

GradientDescentOptimizerare not grouped together. -

[x]

TensorFlow.summary.FileWriter(dir)fails ifdirdoes not exists, while the python version creates it. (fixed by #331 )

Linear Regression Example

Description: A simple LinReg example from the TensorFlow documentation

Source: https://www.tensorflow.org/get_started/get_started#complete_program

| Python (reference) | Julia |

|---|---|

|

|

-

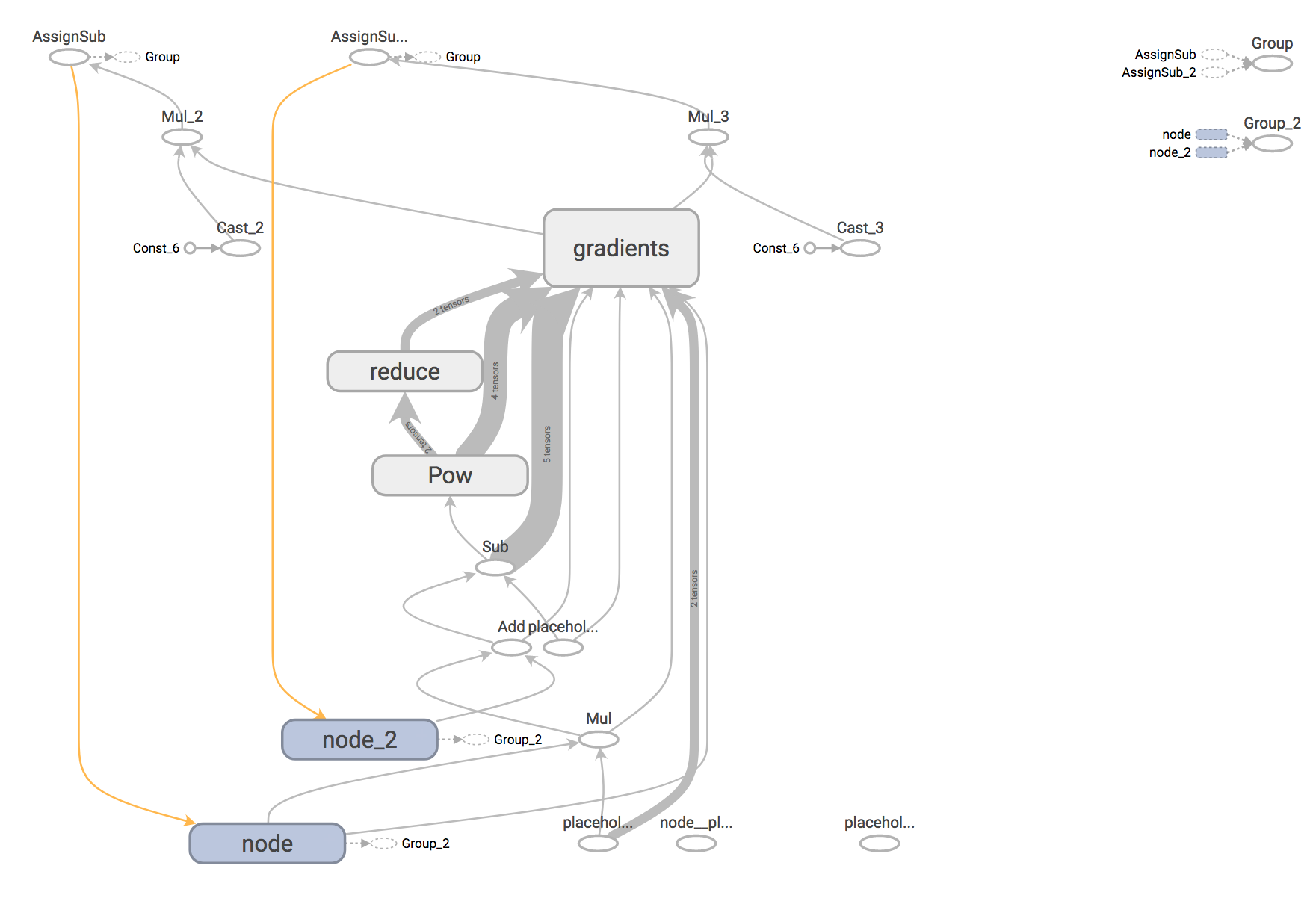

In Julia some of the operations are grouped into a

reduceblock, while python doesn't do that. That doesn't strictly seem like a bad thing that needs fixing though. -

Python groups

GradientDescentnicely together. All these free floating notes on the top left of the julia version are fromtrain(I checked that by usingwith_ops_name) -

The Julia version causes two dummy placeholder variables that are visible in the bottom right of the graph. They are named

node__placeholder__1_1andplaceholder__placeholder__1_1.

Code for the Julia version:

using TensorFlow

tfsession = Session(Graph())

# Model parameters

W = Variable([.3f0])

b = Variable([-.3f0])

# Model input and output

x = placeholder(Float32)

linear_model = W .* x + b

y = placeholder(Float32)

# loss

loss = reduce_sum((linear_model .- y).^2)

# optimizer

optimize = train.GradientDescentOptimizer(0.01)

alg = train.minimize(optimize, loss)

# training data

x_train = Float32[1, 2, 3, 4]

y_train = Float32[0, -1, -2, -3]

# training loop

run(tfsession, global_variables_initializer())

for i in 1:1000

run(tfsession, alg, Dict(x=>x_train, y=>y_train))

end

# evaluate training accuracy

curr_W, curr_b, curr_loss = run(tfsession, [W, b, loss], Dict(x=>x_train, y=>y_train))

println("W: $(curr_W) b: $(curr_b) loss: $(curr_loss)")

summary_writer = TensorFlow.summary.FileWriter("testlog")

Example 2

...