depthai

depthai copied to clipboard

depthai copied to clipboard

Extremely confusing API

-

what is the difference between

createMonoCameraandcreateColorCamera? Aren't they both referring to the same Monocular camera's in the right and the left? If it' sjust the grayscale that makes the difference, having a seperate name asMonoCamerais confusing as the camera remains the same but it's just that the frames are being converted from rgb to grayscale. -

The compiler warns that 'can not find reference to MonoCameraProperties in depthai.py`. This makes it hard to navigate inside the API to see the possible settings or the API that I could use? This makes development hard as I need to search the documentation online for every other settings that depthai supports.

-

The code is too verbose. For instance,

depth_preview.pyhas the following code

# Define a source - two mono (grayscale) cameras

left = pipeline.createMonoCamera()

left.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

left.setBoardSocket(dai.CameraBoardSocket.LEFT)

right = pipeline.createMonoCamera()

right.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

right.setBoardSocket(dai.CameraBoardSocket.RIGHT)

# Create a node that will produce the depth map (using disparity output as it's easier to visualize depth this way)

depth = pipeline.createStereoDepth()

depth.setConfidenceThreshold(200)

depth.setOutputDepth(False)

but wouldn't it be easier to create a depth node with a single line and retrieve the left and right frames as follows,

cam.retrieve_image(left_matrix, View.LEFT) # left rgb

cam.retrieve_image(right_matrix, View.RIGHT) #right rgb

cam.retrieve_depth() #16 bit depth image

- Please add a simplistic example to retrieve 1. RGB Left 2. RGB Right 3. Depth Image 4. Distance on mouse click. I see an example with open3d but having too many dependencies makes it hard to integrate.

Hi @Zumbalamambo ,

Sorry about the delay.

- What is the difference between createMonoCamera and createColorCamera? Aren't they both referring to the same Monocular camera's in the right and the left? If it' sjust the grayscale that makes the difference, having a seperate name as MonoCamera is confusing as the camera remains the same but it's just that the frames are being converted from rgb to grayscale.

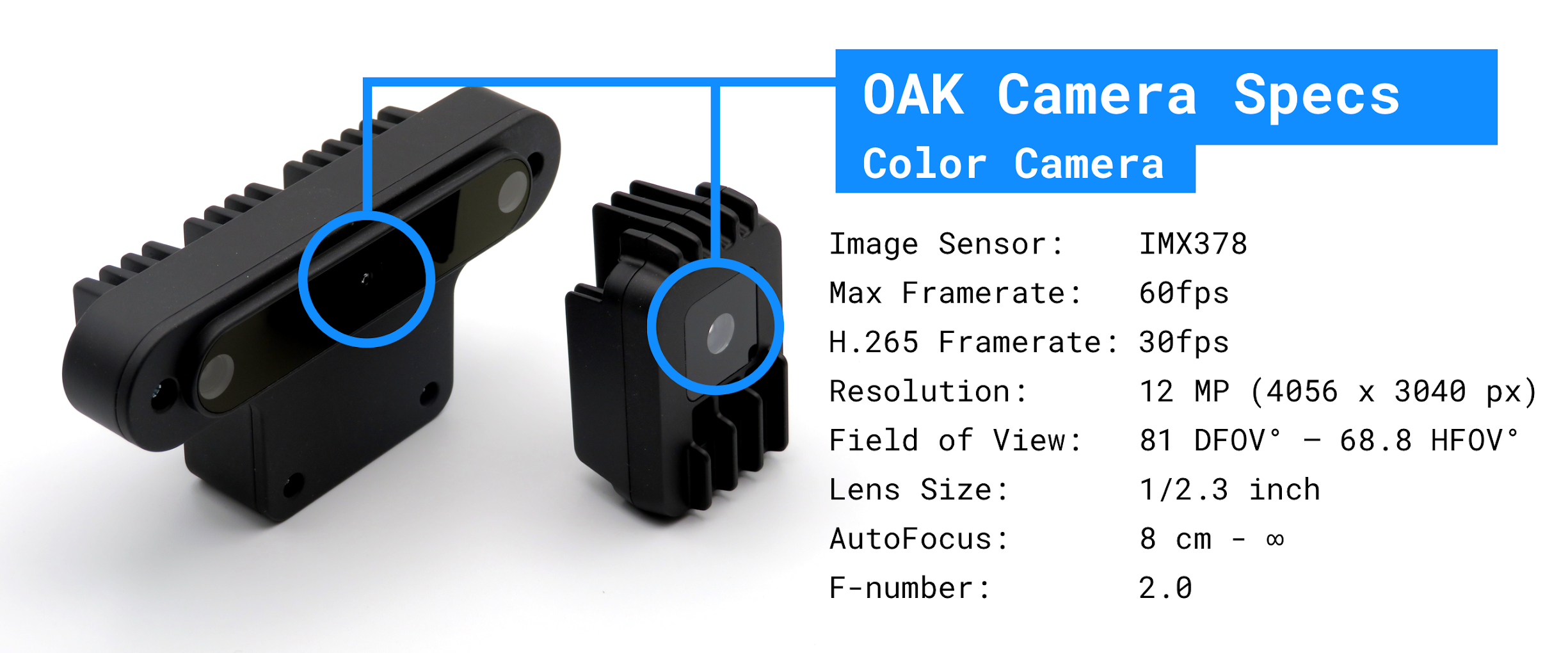

I'm not sure which DepthAI model you are running, but here's an overview of the cameras in 2 of the more popular Luxonis models (OAK-1, the single-camera, and OAK-D, the 3-camera):

So the MonoCamera node is mainly intended for global shutter mono cameras (as are used). Whereas color is indeed used for color image sensors. There are differing output formats, controls/etc. between these because of their sensor formats.

But we're definitely up for suggestions on this if you think having the color and mono definitions are confusing.

- The compiler warns that 'can not find reference to MonoCameraProperties in depthai.py`. This makes it hard to navigate inside the API to see the possible settings or the API that I could use? This makes development hard as I need to search the documentation online for every other settings that depthai supports.

Which development environment are you using in which you are seeing this? CC: @Erol444 informationally.

- The code is too verbose. For instance,

depth_preview.pyhas the following code.

So I am not deep into the code so cannot comment here too much, but I think the recommendation you give is actually for getting frames from left or right cameras. Whereas the code you are reference is actually instantiating the cameras. And the reason for allowing flexibility between making not just right/left/etc. is that our system actually does support 4x cameras, and they don't necessarily need to be right/left in this way. They could in fact be something else, and still combined as the developer would like them. For example a grayscale could be used with an RGB of a different resolution, and first put through the ImageManip node to adjust the resolution and lens parameters (i.e. to a transform) to make the rectification/resolution match.

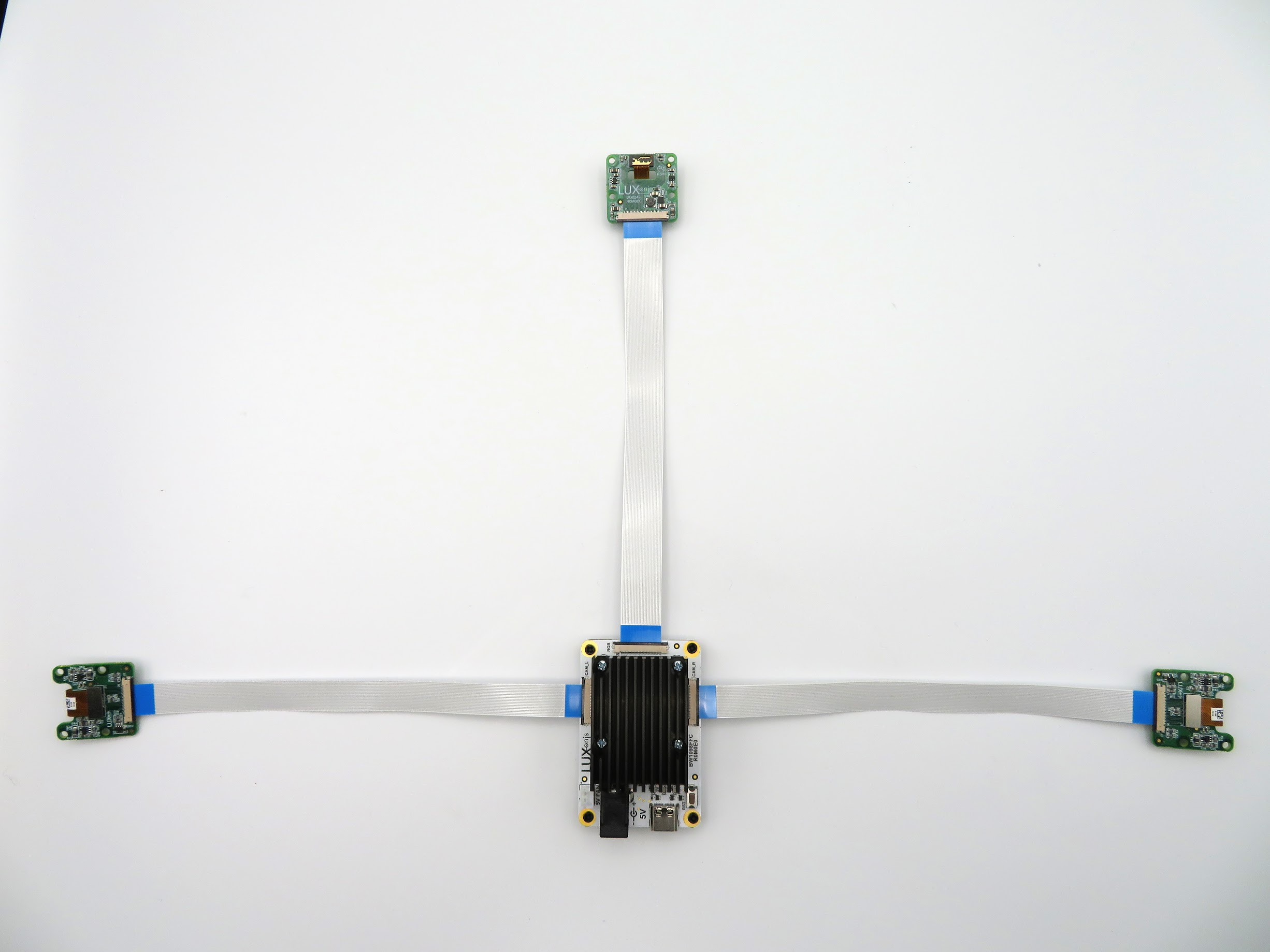

A 3-camera setup is shown below:

That said, we are planning on on making abstraction layers on top of given, more rudimentary functions, to simplify things.

Thoughts on this @themarpe ? I don't know it deep enough to say if it makes sense in this case.

- Please add a simplistic example to retrieve 1. RGB Left 2. RGB Right 3. Depth Image 4. Distance on mouse click. I see an example with open3d but having too many dependencies makes it hard to integrate.

Ah, this makes sense. I'm not sure what product you're using (it could be a custom one for example). If you're using OAK-D (specs here, and above) then likely this is the source of confusion. There is no left RGB or right RGB camera.

There is an 1MP left grayscale, an 1MP right grayscale, and a center 12MP RGB.

But in terms of samples, here is the code sample for retrieving the (center) RGB camera image data:

And here is the sample for retrieving the left and right grayscale camera image data:

And for retrieving the depth image, there are a couple depending on exactly what you are looking to do:

- Depth Image along, here: https://docs.luxonis.com/projects/api/en/latest/samples/03_depth_preview/

- Depth Image with reference code to pull XYZ location of a given region of interest: https://docs.luxonis.com/projects/api/en/latest/samples/27_spatial_location_calculator/

- Pulling the XYZ location of a detected object using MobileNetSSDv2: https://docs.luxonis.com/projects/api/en/latest/samples/26_2_spatial_mobilenet_mono/

Thoughts?

Thanks, Brandon

- I'm using OAK-D

- I'm using PyCharm

- Ofcourse but what I meant was that I had to write 17 lines of code to retrieve the RGB and depth image. It makes the code look messy. Following is an example of instantiation ,

camRgb = pipeline.createColorCamera()

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

xoutRgb = pipeline.createXLinkOut()

xoutRgb.setStreamName("rgb")

camRgb.video.link(xoutRgb.input)

left = pipeline.createMonoCamera()

left.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

left.setBoardSocket(dai.CameraBoardSocket.LEFT)

right = pipeline.createMonoCamera()

right.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

right.setBoardSocket(dai.CameraBoardSocket.RIGHT)

depth = pipeline.createStereoDepth()

left.out.link(depth.left)

right.out.link(depth.right)

xout = pipeline.createXLinkOut()

xout.setStreamName("disparity")

depth.disparity.link(xout.input)

Instead wouldn't it be so much better to simplify everything by having default parameters and change them as and only it is required or may be by creating yet another node which is called RuntimeParameter in which the parameters can be optionally configured? It might aid visual scripting design but most project's doesn't rely on visual scripting where we link multiple nodes and later watch the connection without any clue, when the code size has become big.

- DepthAI has zero options to record the RGBD dataset which I can use later to play. Therefore I have to do it myself. Now the example that you've quoted has an option to save the depth image and rgb images. But later on during inference, how do I use it in conjunction with the depthai to retreive the depth provided an xy coordinate? Without playback functionality , it is extremely tough to record the production or industrial environment and develop the application in lab.

Hello @Zumbalamambo,

- as per the image that Brandon posted, those are actually different sensors. So the frames from the mono cameras aren't actually converted from RGB to grayscale - they are grayscale by default (from the sensor). That's why the cameras are also accessed differently in the API.

- What version of the

depthaipackage are you using? Did you follow the installation instructions? If so, could you please provide the error that is returned and the script you are using? We would be more than happy to take a look. - You are absolutely correct and this is actually the next step we are taking. Funny enough we were just discussing this with another developer today. We want to make the API such that displaying a rgb image on the host requires max 10 lines of code. This means we will be working on a top-level abstraction and node grouping on top of the current API layer. However, currently, there are tons of features that users are requesting which we are focusing on. I estimate that we will start working on this top-layer abstraction layer in the next few months:)

- From my knowledge of the o3d you can save the image/pcl from within the library, right? Here is the demo for the RGBD - to pointcloud: gen1 and for gen2 - this is not on

mainbecause it needs calibration to be read from gen1 and manually storing it on host and feeding it to the device. If that is not what you are looking for please correct me and I can add it to my TODO of experiments/demos to make:)

And apology for the late reply!

@Erol444

Thank you!

2, I have installed v 2.2.1.0. I have followed the installation instruction. There isn't any error but I can not be able to navigate inside the methods to other available apis just by clicking on the method names. It says "unknown reference...."

-

I would highly appreciate an update on the API. In the current form, I just don't understand what nodes I need to connect to get things. I had to look at all 30 examples to find the possible connections, try and replace them like wire connections to see what works on a trial and error basis.

-

As I have mentioned here , I see an example with open3d but having too many dependencies makes it hard to integrate. As I have said earlier, depthai has zero support for record and playback. My ideal solution would be something like zed where I can record the SVO file. Load the SVO file with the same API. Let's say if I detect the object in the recorded data, I want to be able to detect its distance as well from the recorded data. Simply put, during playback I would like to measure the distance on a mouse click over the specific RGB Image XY coordinate.

During this time, It's just not possible to be in the production environment in which the OAK-D has been intended to be used. So we rely on recording every data which we can take home and develop. Sadly depthai doesn't have this much-required functionality of record and playback with opportunities to use depthai api's on playback recordings.

Hi @Zumbalamambo ,

Thanks for the explanation here. And sorry for the trouble.

We want anyone who purchases DepthAI to be happy with it or get their money back. So we are more than happy to issue a full refund for your purchase if you would like. If you'd like to do so please email me at support at luxonis dot com.

That said, we will continue to improve the API, and what you mention in terms of being able to record data and then use the same API to interact with it is a core thing we intend to support. And actually we do support feeding in images from the host for both stereo depth and also neural inference. Actually every node in the pipeline builder supports image data (or videos) coming from the host instead.

But I think I'm at high risk of not understanding what you are needing here. So please correct me if I'm wrong on the examples below. And the offer for a full refund stands at any point. As we did not meet your expectations with the device, and it is not incumbent on you to wait for us to do so - and so we do not expect you to wait for us to implement what you need (but we will implement it, as we completely agree that it is needed like you mention).

So anyway, here goes WRT being able to do stereo depth, inference, etc. from the host:

- This example (here) shows how to get disparity results from images passed in from the host. (Also in docs, here, but doesn't show photos, yet).

- Running neural inference on recorded video (here).

- Running object tracker on video from the host (here).

And then in terms of having DepthAI remap the depth and RGB together so they're aligned is here. On that, keep in mind that the stereo cameras are grayscale, so having RGB on top of depth is a reprojection that is being done on-device, unlike if the stereo pair were RGB, in which case no such re-alignment calculations would be required. The reason the stereo pair is grayscale is that the low-light performance is a lot better this way than if they were RGB.

So then with 29_rgb_depth_aligned.py in there, the depth map is The depth map is aligned to RGB, which then can be sent to SpatialCalculator to get the XYZ position of each RGB pixel (that has a valid depth). And this would work from the host as well.

Example below:

https://youtu.be/TuXbULqsG1A

https://youtu.be/TuXbULqsG1A

I don't know if the existing example shows how to do this from the host. (I was about to check, but have to run now.)

But either way, we could write this example so that you can use recorded images and then flick through to get the XYZ location of each pixel you click on, or run object detection on it and get the XYZ shown for the object.

Would you like us to do so?

We are more than happy to issue a full refund though. As I agree this should have been a default example. We are behind, and that is no excuse.

Thoughts?

Thanks, Brandon

@Luxonis-Brandon

sorry for the late response. I would highly appreciate an example that shows how we could get the XYZ position from a xy position of the RGB Image. thank you! Also please, reduce the size of the code that it takes to grab the feeds.

Thanks. Yes we can make this. I think @saching13 would likely be the best to do the example.

Do you have any deadline for which you would need this?

Thakns, Brandon

thank you! The product deployment is at the end of June. I would appreciate an update before the deployment so I can integrate this sensor.

Sounds good thanks.

@Luxonis-Brandon

sorry for the late response. I would highly appreciate an example that shows how we could get the XYZ position from a xy position of the RGB Image. thank you! Also please, reduce the size of the code that it takes to grab the feeds.

Hello @Zumbalamambo,

I'm currently just working on this for another project. I will make a demo that shows how to achieve this and add that to depthai-experiments, hopefully, this week. I will circle back here when I push it:)

The code that converts ROI to XYZ positions (you could just provide a single pixel) on the host is here, note here that color and depth frames are aligned.

Thanks, Erik